Of Algo-Read'ems, (Col)Lapses in Time, Moderating Moderation

MisDisMal-Information Edition 42

What is this? MisDisMal-Information (Misinformation, Disinformation and Malinformation) aims to track information disorder and the information ecosystem largely from an Indian perspective. It will also look at some global campaigns and research.

What this is not? A fact-check newsletter. There are organisations like Altnews, Boomlive, etc., who already do some great work. It may feature some of their fact-checks periodically.

Welcome to Edition 42 of MisDisMal-Information

Curate and Algo-Read’em

Last weekend, I ran a little poll on Twitter to try and find out how people sorted their feeds - algorithmically or reverse-chronologically. This was driven by an unscientific observation that most people around me tended to use the algorithmic or ‘Home’ option instead of the ‘Latest Tweets’ option that I primarily rely on, and massive FOMO over what I could be missing out on.

What, I said it was little, didn’t I?

Needless to say, that ridiculously small sample didn’t really help me in any way. In April, though, Jack Bandy and Nicholas Diakopoulos had published a study on how Twitter’s algorithmic curation affects what users see in their timelines (there’s a Medium version too).

Wait, Prateek, Twitter has a very limited and privileged set of users, why do I care?

Fair point. I’m using Twitter as an example, but it is a broader point. As Sinan Aral writes in Hype Machine:

“the Hype Machine’s content-curation algorithms reduce consumption diversity through a Hype Loop that nudges us toward polarization: Friend-suggestion algorithms connect us with people like ourselves. So the content shared by our contacts is biased toward our own perspectives. Newsfeed algorithms further reduce content diversity by narrowing our reading options to the items that most directly match our preferences. We then choose to read an even narrower subset of this content, feeding biased choices back into the machine intelligence that infers what we want, creating a cycle of polarization that draws us into factionalized information bubbles.”

So there are multiple levels of curation/filtration with varying degrees of human-algorithm interaction at work:

Who platforms recommend we connect with.

Who we ultimately connect with on platforms.

What newsfeeds recommend to us, based on what we do.

What we finally choose to click on/read.

Bandy and Diakopoulos had to rely on eight “automated puppet accounts” and comparing their chronological and algorithmic feeds. Another post describing their process specifies that they basically cloned/emulated a set of accounts from an identified set of left and right-leaning communities and sampled 50 tweets from their timelines twice a day. Note that the puppets didn’t actually click on links, which is a signal that Twitter does use as a signal for personalisation, so that’s a potential limitation. Anyway, here’s what they found.

Twitter’s algorithm showed fewer external links:

On average, 51% of tweets in chronological timelines contained an external link, compared to just 18% in the algorithmic timelines

Many Suggested Tweets

On average, “suggested” tweets (from non-followed accounts) made up 55% of the algorithmic timeline.

Increased Source Diversity

the algorithm almost doubled the number of unique accounts in the timeline

…algorithm also reined in accounts that tweeted frequently: on average, the ten most-tweeting accounts made up 52% of tweets in the chronological timeline, but just 24% of tweets in the algorithmic timeline

Shift in topics

They clustered tweets containing political, health, economic information and information about fatalities. Except for the political cluster, other categories had reduced exposure.

‘Slight’ partisan echo chamber effect

Tagging accounts as Influencers on the Left, Niche Left, Bipartisan. Niche Right and Influencers on the Right, here’s what happened

For left-leaning puppets, 43% of their chronological timelines came from bipartisan accounts (purple in the figure below), decreasing to 22% in their algorithmic timelines

Right-leaning puppets also saw a drop. 20% of their chronological timelines were from bipartisan accounts, but only 14% of their algorithmic timelines

Notably, the representation of Influencers on the Left and Right remained more or less constant across both types of timelines and left/right-leaning accounts.

Yes, there are limitations to this study, and we need to learn more about these kinds of effects. Disappointingly, for those of us who love a good villain, this neither absolves algorithms nor exonerates them.

Also Read:

Benedict Evans’ 2018 post about ‘The death of the newsfeed’:

according to Facebook, its average user is eligible to see at least 1,500 items per day in their newsfeed. Rather like the wedding with 200 people, this seems absurd. But then, it turns out, that over the course of a few years you do ‘friend’ 200 or 300 people. And if you’ve friended 300 people, and each of them post a couple of pictures, tap like on a few news stories or comment a couple of times, then, by the inexorable law of multiplication, yes, you will have something over a thousand new items in your feed every single day.

…

This overload means it now makes little sense to ask for the ‘chronological feed’ back. If you have 1,500 or 3,000 items a day, then the chronological feed is actually just the items you can be bothered to scroll through before giving up, which can only be 10% or 20% of what’s actually there. This will be sorted by no logical order at all except whether your friends happened to post them within the last hour. It’s not so much chronological in any useful sense as a random sample, where the randomiser is simply whatever time you yourself happen to open the app. ’What did any of the 300 people that I friended in the last 5 years post between 16:32 and 17:03?’ Meanwhile, giving us detailed manual controls and filters makes little more sense - the entire history of the tech industry tells us that actual normal people would never use them, even if they worked. People don't file.

And yet, I’ll be sticking with my combination of Latest Tweets + Lists + Advanced Search on keywords and lists, filtered by engagement.

They will curate, and I’ll go read’em.

(col)Lapses in time

A video made by a 5-year old complaining about excessive online classes on Sunday, uploaded as a status message on Whatsapp, took on a life of its own, resulting in the district administration announcing a reduction in the duration and number of classes on Tuesday [Naveed Iqbal - Indian Express].

Imagine how long this would have taken through ‘official channels’ and ‘established procedure’.

In a very different example of this ‘time collapse’, Kashmir Hill noted, while writing about Emily Wilder being fired by The Associated Press after old posts were dug up.

Part of the problem is how time itself has been warped by the internet. Everything moves faster than before. Accountability from an individual’s employer or affiliated institutions is expected immediately upon the unearthing of years-old content. Who you were a year ago, or five years ago, or decades ago, is flattened into who you are now. Time has collapsed and everything is in the present because it takes microseconds to pull it up online.

The way I see it, there are 2 distinct ‘time collapses’ to consider here.

The time lapsed between something from the past and now - a lapse in judgement, a problematic position/opinion, etc. Referred to as 1.

Now that this ‘something’ has been brought up, the time to respond/react. Referred to as 2.

We’ve seen this countless times in India too, pulling up old tweets/posts and then (typically) pressurising an employer to take action, who then (again, typically) cave and oblige.

1 (also, typically - not always) centres around an individual and seems to have increased thanks to the internet. 2 (generally) attains significance when enacted by some sort of collective - a community/group of people, an institution, etc. and has significantly shrunk in our modern information ecosystem.

There’s a lot to consider about 1, the calling out it results in and wide range of outcomes it can have. Indeed, there have been many notable examples over the last few weeks alone [stand-up comics past tweets/jokes being brought up].

As someone who thinks about both public discourse and public policy, 2 grabs my interest more. Note that I am considering the pressure to respond/react faster and not the ability to respond/react faster.

What kind of institutional responses does this time-collapse lead to? Do we have the luxury to really think things through? Is the need to be ‘seen’ to be respondeing quickly eclipsing the need to put together ‘quality’ responses?

The kind of responses communities/insitutions craft in 2, can determine how much 1 remains a factor. As Kashmir Hill quotes Krystal Ball

“The less successful it is, the less that it works,” she said, “the less interest in it people are ultimately going to have.”

Also Read:

Charlie Warzel - The Internet is Flat

Moderating Content Moderation

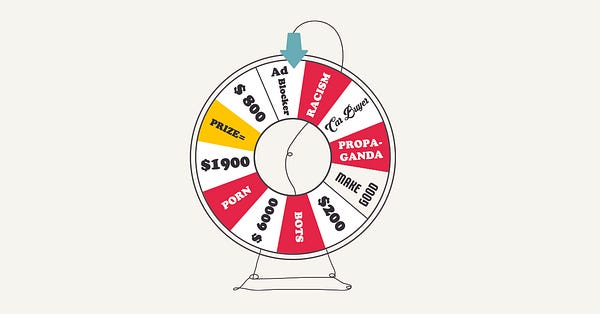

“Content Moderation is the essence of what platforms do” - (heavily paraphrased) Tarleton Gillespie. - Yes, true.

“We need platforms content moderate the hell out of conent on platforms” - Simplistic version of the argument made by many people on the internet - Err, let’s talk about this.

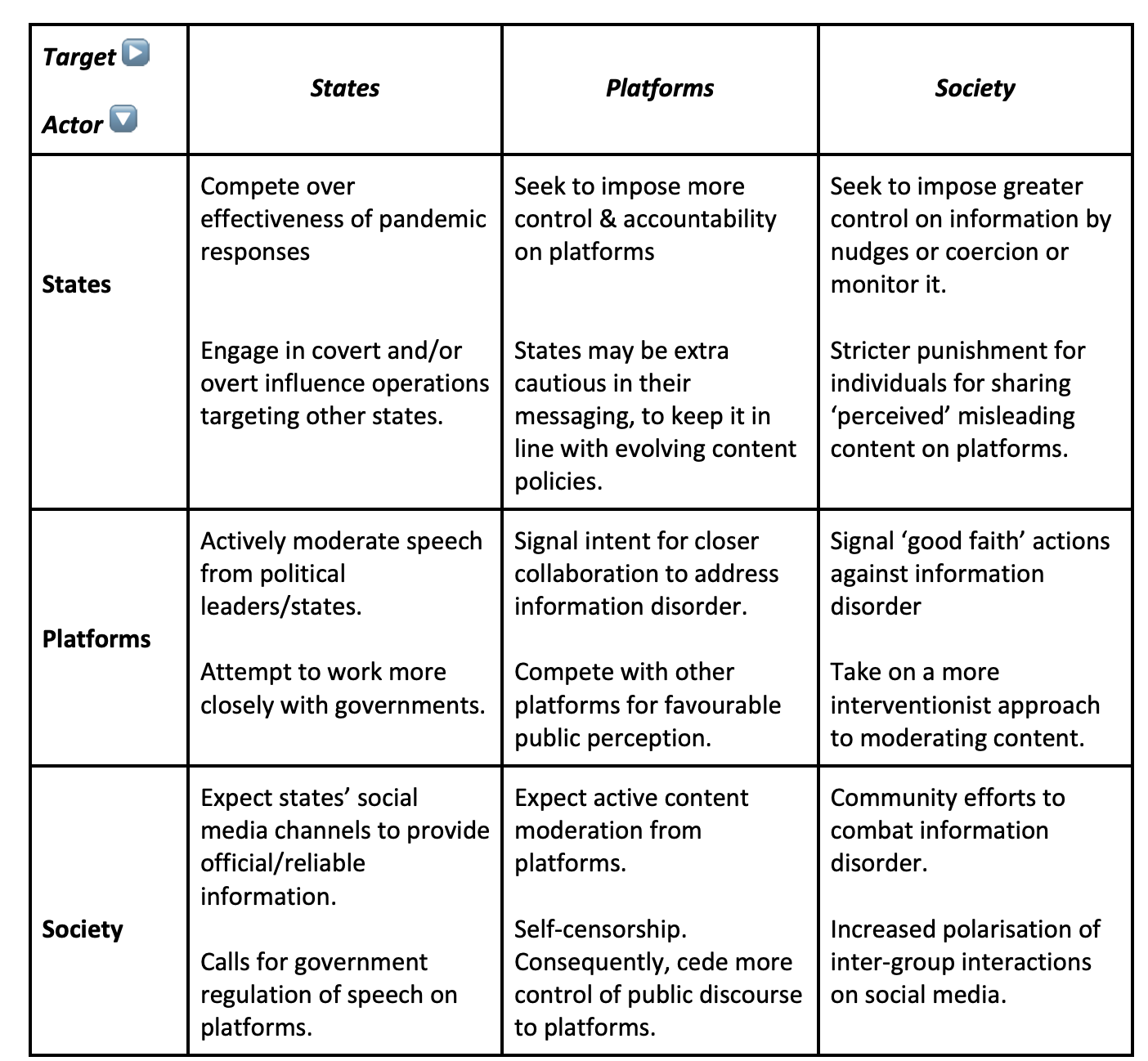

Back when platforms started playing a more active role with COVID-19 information disorder, Rohan Seth and I speculated in a document about their responses.

The bits about calls for more moderation by society and a more interventionist role by platforms seem to have played out.

As Evelyn Douek writes in Wired in an aptly titled piece ‘More Content Moderation Is Not Always Better’

The internet is sitting at a crossroads, and it’s worth being thoughtful about the path we choose for it. More content moderation isn’t always better moderation, and there are trade-offs at every step. Maybe those trade-offs are worth it, but ignoring them doesn’t mean they don’t exist.

…

As companies develop ever more types of technology to find and remove content in different ways, there becomes an expectation they should use it. Can moderate implies ought to moderate. After all, once a tool has been put into use, it’s hard to put it back in the box.

Oh, and look, India finds a mention too:

Authoritarian and repressive governments around the world have pointed to the rhetoric of liberal democracies in justifying their own censorship. This is obviously a specious comparison. Shutting down criticism of the government’s handling of a public health emergency, as the Indian government is doing, is as clear an affront to free speech as it gets. But there is some tension in yelling at platforms to take more down here but stop taking so much down over there. So far, Western governments have refused to address this.

Aside: As I was writing this edition, cartoonist @MANJUtoons tweeted that this account was the subject of a legal request from India.

Anyway, there’s 2 big takeaways here.

Content Moderation at scale is impossible to do well, as Mike Masnik says. More moderation almost certainly means more false positives. Just ask Palestinian users who have been facing this for years. Perhaps we need to moderate how much content we moderate, and

Just deleting content is not going to solve the complex politico-socio-economic problems we have to fix. So maybe we need moderate our expecations about outcomes from simply increasing how much content we moderate.

Also Read:

I realise this the 2nd Benedict Evans’ post in this edition - Is content moderation a dead end?

However, it often now seems that content moderation is a Sisyphean task, where we can certainly reduce the problem, but almost by definition cannot solve it. The internet is people: all of society is online now, and so all of society’s problems are expressed, amplified and channeled in new ways by the internet. We can try to control that, but perhaps a certain level of bad behaviour on the internet and on social might just be inevitable, and we have to decide what we want, just as we did for cars or telephones - we require seat belts and safety standards, and speed limits, but don’t demand that cars be unable to exceed the speed limit.

…

Hence, I wonder how far the answers to our problems with social media are not more moderators, just as the answer to PC security was not virus scanners, but to change the model - to remove whole layers of mechanics that enable abuse. So, for example, Instagram doesn’t have links, and Clubhouse doesn’t have replies, quotes or screenshots. Email newsletters don’t seem to have virality. Some people argue that the problem is ads, or algorithmic feeds (both of which ideas I disagree with pretty strongly - I wrote about newsfeeds here), but this gets at the same underlying point: instead of looking for bad stuff, perhaps we should change the paths that bad stuff can abuse.

Also Read #2

Terrorists are hiding where they can’t be moderated [Adam Hadley - Wired]

… Meanwhile in India

▶️ A book with 56 blank pages titled ‘Masterstroke’ made it onto Amazon’s listings [Nivedita Niranjankumar - BoomLive]

Related:

This is not surprising, since we have seen past coverage about conspiracy theory books on Amazon [BuzzfeedNews], or how it puts ‘misinformation at the top of your reading list’ [TheGuardian] and even a study by ISD Global. What was implication? I’ll go read em… er.. the algorithm.

(This is the last Benedict Evan’s link in this edition) Does Amazon know what it sells?

There’s an old cliché that ecommerce has infinite shelf space, but that’s not quite true for Amazon. It would be more useful to say that it has one shelf that’s infinitely long. Everything it sells has to fit on the same shelf and be treated in the same way - it has to fit into the same retailing model and the same logistics model. That’s how Amazon can scale indefinitely to new products and new product categories

▶️ Ayushman Kaul investigates India v/s Disinformation and Press Monitor [DFRLab]

This instance is the latest in a series of cases in which PR companies and digital communications firms operate online publishing networks claiming to fight disinformation while simultaneously aligning with individual politicians or governments. The DFRLab has reported on prior examples of so-called “disinformation-as-as-service” providers, including our coverage of Operation Carthage and Archimedes Group.

In a written response to questions posed by the DFRLab, the company confirmed that it had contracts with the Indian government and some of the country’s foreign embassies to conduct media monitoring services but claimed that India Vs. Disinformation was a separate independent initiative not related to these contracts. Press Monitor also told the DFRLab that it created the websites as a means of gaining the favor of and receiving further commercial contracts from the Indian government.

Related:

If you’ve been a subscriber for a while, you may recall that India v/s Disinformation made an appearence in edition 13 > New India, New Twiplomacy.

And, as I said in edition 29 > Eww Disinfo, expect to see more investigations focusing on India.

There’s also a broader point here. Influence operations can be found wherever you look for them (if you look hard enough and are smart enough, obviously) - we just seem to hear a lot more about Russian, Chinese and Iranian ones today because a lot of resources are focused on looking there. In fact, Mahsa Alimardani, who spoke about Iranian influence operations before the EUDisinfo team said (in the context of an overemphasis on Iran) [from ~14:22:30 in the video]

▶️ An intriguing piece: “Covid19 Is the First Global Tragedy in a Post‑Truth Age. Can We Preserve an Authentic Record of What Happened?” [Saumya Kalia - TheSwaddle]

▶️ The Telagana police is ‘closely watching’ social media [Aihik Sur - Medianama]

Around the world in 10 points

▶️ Twitter appears to be gearing up for a wider rollout of Birdwatch, its crowdsourced fact-checking programme. [Lucas Matney - TechCrunch].

Read Poynter’s analysis on issues with Birdwatch

▶️ Advertisers want to audit platform transparency efforts

▶️ The Truth Brigade will counter right-wing disinformation on social networks. [Cat Zakrzewski - Washington Post]

▶️ EU wants to strengthen its Code of Practice on Disinformation.

▶️ Self-regulation 2:0? A critical reflection of the European fight against disinformation - Ethan Shattock

▶️ In Pakistan, pro-government fact-checkers are trolling and targeting journalists. [Ramsha Jahangir - Coda]

▶️ Australia’s drug regulator is considering referring COVID vaccine misinformation to law enforcement. [Paul Karp - TheGuardian]

▶️ Misinformation and the Mainstream Media

Do Not Blame the Media! The Role of Politicians and Parties in Fragmenting Online Political Debate - Raphael Heiberger, Silvia Majó-Vázquez, Laia Castro Herrero, Rasmus K. Nielsen, Frank Esser based on an analysis of almost half a million election-related tweets collected during the 2017 French, German, and U.K. national elections.

“One Big Fake News”: Misinformation at the Intersection of User-Based and Legacy Media - Aya Yadlin, Oranit Klein Shagrir with a case study of Israeli televised content.

▶️ Do You See What I See? Capabilities and Limits of automated multimedia content analysis - Carey Shenkman Dhanaraj Thakur Emma Llansó.

▶️ #Scamdemic, #Plandemic, or #Scaredemic: What Parler Social Media Platform Tells Us about COVID-19 Vaccine - Annalise Baines, Muhammad Ittefaq and Mauryne Abwao.