Of Muddied Water, Explosions, The Big QAn(o)on(A) and New Twiplomacy

MisdisMal-Information Edition 13

What is this? This newsletter aims to track information disorder largely from an Indian perspective. It will also look at some global campaigns and research.

What this is not? A fact-check newsletter. There are organisations like Altnews, Boomlive etc who already do some great work. It may feature some of their fact-checks periodically.

Welcome to Edition 13 of MisDisMal-Information

This edition will not feature Donald Trump's Executive Orders covering Bytedance and Tencent. It is very much a developing story at the time of writing this edition and a lot more is likely to happen by the time this edition reaches your inboxes.

Muddying the waters

It is raining incessantly in various parts of the country, but that's not what this is about. Muddying the waters (metaphorically speaking) are a big part of why Information Pollution succeeds.

Back in January, at a Disinformation Event someone remarked that "nearly 80%" of the information disorder in India comes from one side of the political spectrum (discussions were under chatham house rules so I will not disclose who made the statement).

At the time, it seemed plausible. However, I was also convinced that if it seems to be serving "one side" so well, it was very unlikely that the status quo will persist. As I wrote in Edition 2 - "Everyone uses information disorder equally, some just seem to be more equal." Here's a fun pie chart from that edition on the idealogical (subjective) leaning I attributed to debunked COVID-19 information disorder categorised as “culture”.

August 5th

Given the events on August 5th, it was not surprising that Social Media Platforms in general were a battleground of sorts. The usual suspects were at it, and were being called out.

This thread also links to an older thread from 2018, it seems absurd that this gentleman is still used in the manner he is. It is almost like people don't care about the truth

And there was also some bizarre posts by opposition party members.

The former was debunked in short order stating that the image bears an inscription in English on the inner part of the band. And I can ascribe no logic to Dr. Tharoor’s tweet (I really tried).

To Mexico

But since we’re on the topic of muddying waters, stay with me as we head to Mexico (this might just be the only way many of us can go there in the near future anyway). A post by @DFRLab details how impartial fact-checking were used to further political objectives. The report studies tweets from @esp_covid19 and @covidmx + accounts that engaged with them (i.e. were mentioned by these orgs or were otherwise at the receiving end of interactions such as RTs, likes and replies).

A former President selectively amplified a fact-check by one of these outfits to show that the current government was not reporting the COVID-19 death toll accurately. However, he did not include a clarification by the government explaining the errors. It is impossible to tell if this was unintentional or deliberate.

It also highlights how the orgs faced some backlash because they were tagged in posts attacking the government. Apparently, they did not reciprocate/amplify this engagement in any way.

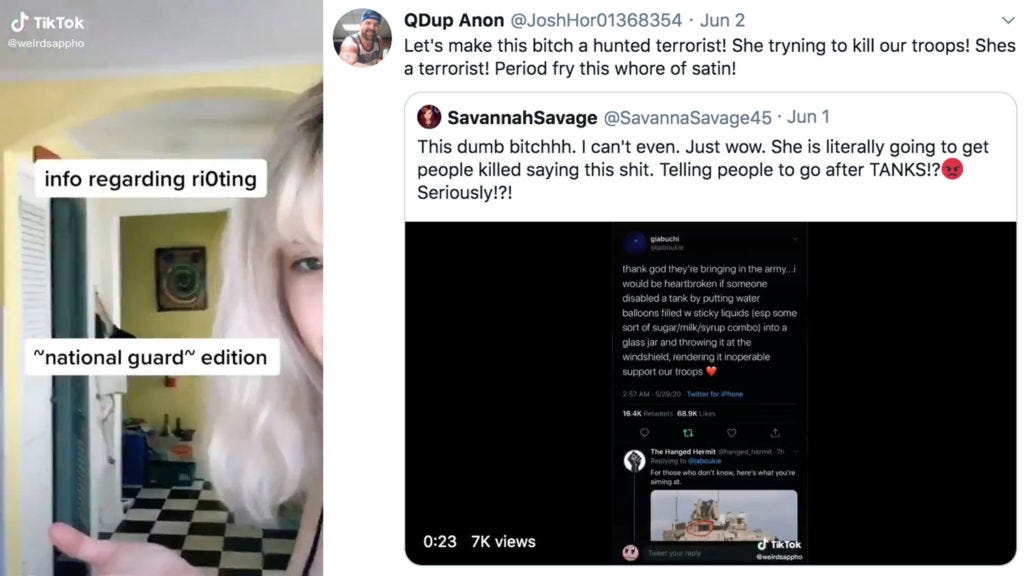

TikTok Terror

An example of “coordinated behaviour” cutting the other away. A TikTok creator’s satirical video was picked up by Qanon accounts with the accusation that she was training terrorists. The Mainer story that reported this (also the source of the image) mentions leaked Department of Homeland Security documents which were being shared with police departments in Maine.

Sciencing_Bi Bye

h/t to @TheNaikMic for flagging this story regarding BethAnn McLaughlin (founder of MeTooSTEM - website now offline) to me.

Twitter has suspended the account of MeTooSTEM founder BethAnn McLaughlin after allegations emerged that the former Vanderbilt University neuroscientist fabricated the Twitter account of an apparently nonexistent female Native American anthropologist at Arizona State University (ASU) who had claimed to be an anonymous victim of sexual harassment by a Harvard professor. McLaughlin announced on 31 July that Alepo, the woman supposedly behind the @Sciencing_Bi account, had died after a COVID-19 infection. The company has also suspended that pseudonymous account.

….

But during the Zoom memorial service, Eisen and several others began to doubt that Alepo was real. After they learned new details about the supposed ASU professor during the service but could not find any evidence of her existence or death—or evidence that anyone on the Zoom call had ever met her—their grief turned to protests of anger and betrayal.

I will admit that I wasn’t fully aware of earlier controversies surrounding MeTooSTEM either. And while platforms (as private entities) are entitled to enforce their rules (more on this later) - what are the criteria that went into determining that an account suspension was the appropriate course of action? There’s another obvious question linked to Edition 11 that I am not asking here.

Misinformation - Nothing official about it

This post on TechDirt highlights a case of misleading information from official sources, in the context of a story by NYPost about internal NYPD communication. The details are largely irrelevant because the same thing is likely to play out in multiple different contexts. In some ways, I am reminded of the Elephant from Kerala story. The key ingredients are:

A story/article that reported on the basis of official information/sources

It then turns out to be incorrect/out-of-context/only partly true - pick one.

A minor correction may or may not be made, but uncorrected versions are already out there.

There were some interesting questions listed here that are worth considering:

Questions for Twitter:

Should it flag potentially misleading tweets when published in major media publications, such as the NY Post?

Should it matter if the information originated at an official government source, such as the NYPD?

How much investigation should be done to determine the accuracy (or not) of the internal police report? How should the NY Post’s framing of the story reflect this investigation?

Does it matter that the NY Post retweeted the story a day after the details were credibly called into question?

Questions and policy implications to consider:

Do different publications require different standards of review?

Does it matter if underlying information is coming from a governmental organization?

If a media report accurately reports on the content of an underlying report that is erroneous or misleading, does that make the report itself misleading?

How much does wider context (protests, accusations of violence, etc.) need to be considered when making determinations regarding moderation?

Election Misinformation

Politico ran a story about Silicon Valley losing the election misinformation battle. Joan Donovan makes a very important intervention though:

This is important, framing matters, foreign interference is scary but is taking up way too much oxygen in this conversation. Domestic information needs more attention. Of course, those of us in India, know that already.

Incidentally, the US State department’s Global Engagement Center released a report on Russia’s Disinformation and Propaganda Ecosystem. There are 2 particularly interesting infographics detailing the mechanisms on a spectrum ranging from attributable to non-attributable and a claim that US created COVID-19.

And its Rewards for Justice Program is.. well.. read the quote:

The U.S. Department of State’s Rewards for Justice (RFJ) program, which is administered by the Diplomatic Security Service, is offering a reward of up to $10 million for information leading to the identification or location of any person who works with or for a foreign government for the purpose of interfering with U.S. elections through certain illegal cyber activities.

I tend to agree with DFRLab’s Zarine Kharazian:

Not Truth, but hyper-truth

And to close this section out, Tommy Shane of First Draft writes about the difference between facts and truth.

Not everyone is interested in accounts of the world based on institutionalized processes and the perspectives of experts.

The post gives contracts search results for “coronavirus facts” and “coronavirus truth”

On Bing, we saw something similar but more pronounced. Where searches for “facts” yielded stories from official sources and fact checkers, one of the top results linked to “truth” is a website called The Dark Truth, which claims the coronavirus is a Chinese bioweapon.

While in retrospect, this seems obvious, the stark difference in the results has taken me by surprise. Maybe we need to rethink the phrase “post-truth”.

Muddied waters indeed.

The Beirut Explosion

The explosion in Beirut once again reminded that in additional to the loss of lives and property, accurate information is another casualty during such incidents.

From nuclear bombs to attacks and Donald Trump, Marianna Spring has a round-up that demonstrates this

And I realise the contradiction with my mini-rant about foreign interference above but here Marc Owen Jones details activity from Saudi focused accounts.

And just in case I was able to live that one down, here is Samantha North with a post from @adico that talks about the resurrection of previously de-platformed “South Front”. (Bonus Question: Does de-platforming work? @ me on twitter)

The Big QAn(o)on(A)

Hey, it isn’t my fault QAnon lends itself to some really bad puns.

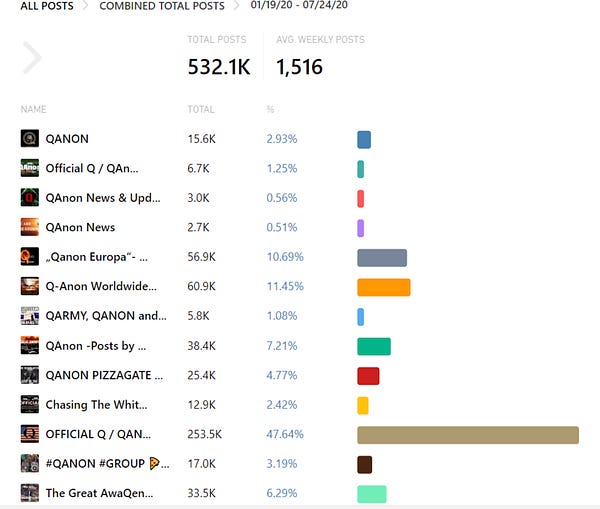

Ok, but QAnon is serious business. Brandy Zadrozny and Ben Collins have done some excellent reporting on the group. They were also on this week’s episode of Lawfare’s Arbiters of Truth series.

In this conversation with Charlie Warzel, Adrian Hon (chief executive of the gaming company Six to Start) likens it to an Alternate Reality Game:

> QAnon was born on forums like 4chan and 8chan, and the way that people interact with it initially is so purely online. But the effects bleed into the real world much like an alternate reality game. > QAnon uses a phrase like, “I did my research.” I kept hearing that and I couldn’t get it out of my head. This research is, basically, typing things into Google but when they do, they go down the rabbit hole. (remember facts v/s truth) > Many people feel alienated and left behind by the world. There’s something about QAnon like ARGs that reward and involve people for being who they are. They create a community that lets people show off their “research” skills and those people become incredibly valuable to the community. > It is good thing that Twitter is trying to ban accounts and viral conversations around QAnon. It helps reduce the spread. But the reason this is so dangerous is that the little rabbit holes that take you deeper into QAnon are everywhere. A YouTube video might lead you to a Wikipedia page that takes you to another video. Each one is maybe harmless but the combined effect might draw you into the world.And some time early on Friday morning in India, Facebook took down one of the largest QAnon groups.

Aside: During the podcast, Ben Collins made a reference to the fact that groups like ISIS have nowhere near the footprint the kind of footprint that QAnon does. It also reminded of a very comprehensive and insightful thread from Daphne Keller on GIFCT earlier in the week.

Meanwhile in India

Thanks, but no thanks, Whatsapp!

WhatsApp is running a pilot that puts a magnifying glass next to frequently forwarded (forwarded more than 5 times) messages and lets people run a search to verify if those messages have been fact-checked.

The countries included? -

Search the web is being rolled out starting today in Brazil, Italy, Ireland, Mexico, Spain, UK, and US for those on the latest versions of WhatsApp for Android, iOS and WhatsApp Web.I guess it is a good thing then that their largest market does not have an information disorder problem. It is admittedly a pilot, but I am still extremely curious as to why India was left out.

As Manish Singh, writing for TechCrunch, put it, “there’s only so much a tech firm can do to fight human stupidity.”

Aviv Ovadya brings a different perspective to this - everyone wants to make information disorder someone else’s problem.

And, since I love contradictions, I’ll leave with you this article by Joan Donovan and Claire Wardle on how information disorder is everybody’s problem (emphasis mine).

As researchers, this pandemic has taught us that when information seekers search online for medical guidance, society cannot shoulder the burden wrought by rampant medical misinformation, scams, and hoaxes. In assessing the true cost of misinformation, it doesn’t just cost more financially to undo the damage caused by misinformation, it also costs us socially and politically, which taxes every one of us in different ways.

I’m also going to gloat a little here. I may have said something similar 3 weeks ago.

Is this madness?

In the context of the (forever) impending revision to Intermediary (Amended) Guidelines Kazim Rizvi and Ishani Tikku make a case for not shooting the messenger. It does sometimes feel like we live in Sparta.

A survey by Social Media Matters showed that out of ~4000 respondents, 69% claimed to have received “fake news” during the pandemic. 70% reported cross-checking.

An MP from East Tripura who was booked for “spreading false rumours” went after the BJP government for trying to stifle dissent.

“It is a matter of pity that no one, not even Lenin, Karl Marx, even Rabindranath Tagore, Maharaja Bir Bikram, is safe under this regime and those who want to raise any voice are slapped with an FIR or verbal harassment.”

An editorial in Economic Times argues in favour of getting Google and Facebook to pay for news.

Activists in Ahmedgarh have pledged to curb fake news. I guess we’ll just have to take their word for it:

Office-bearers and activists of various social and religious organisations have vowed to deject rumour mongers who might attempt to disrupt peace by posting misleading posts on the social media on the occasion of foundation stone laying of Ram Temple on Wednesday.

Not quite madness, but slightly crazy, this thread by the good people of Tattle Civic Technologies on Deepfakes, Cheap fakes and Information Disorder in India.

New India, New Twiplomacy

The Indian envoy to the UN, in an interview to ANI singled out Pakistan for creating disharmony through disinformation campaigns. Ok, no surprises there. What was interesting though that the Indian embassy in Egypt decided to tweet this exchange from its official handle.

This isn’t a one-off, a few weeks ago, the Indian embassy in put out an even stranger tweet. It does seem to be a shift in how we’re engaging through this channel. If you’re looking to track this, @IndianDiplomacy maintains Twitter lists. There’s possibly more to this, but let’s leave that for another day.

Religious intolerance and bigotry knows no limits in Pakistan. Priceless heritage being destroyed under the sledgehammer of fanatics. Lord Buddha, please forgive them, for they know not what they are doing! @IndiavsDisinfo @MEAIndia1800 old Bhudda remains founded in takht bhai & smashed by religious extremists. The real aim of putting extremism in people’s mind is to washout the identity of those who are living in this region as they done before in Afghanistan.Proper action needed to secure such identities. https://t.co/oEsAexE6E3

Religious intolerance and bigotry knows no limits in Pakistan. Priceless heritage being destroyed under the sledgehammer of fanatics. Lord Buddha, please forgive them, for they know not what they are doing! @IndiavsDisinfo @MEAIndia1800 old Bhudda remains founded in takht bhai & smashed by religious extremists. The real aim of putting extremism in people’s mind is to washout the identity of those who are living in this region as they done before in Afghanistan.Proper action needed to secure such identities. https://t.co/oEsAexE6E3 Ihtesham Afghan @IhteshamAfghan

Ihtesham Afghan @IhteshamAfghan

Big Tech Watch

Remember the Ad ban that was supposed to get Facebook to heal? Well, Sara Fischer’s article in Axios points that it made little difference revenue wise. With 9M advertisers, it was always going to be a challenge. That said, she points out that the ban does have signalling value.

Facebook also released its Coordinated Inauthentic Behaviour report for July.

Total number of Facebook accounts removed: 798

Total number of Instagram accounts removed: 259

Total number of Pages removed: 669

Total number of Groups removed: 69

Networks removed (activity originated -> target)

Romania -> US.

US, Canada, Australia, New Zealand, Vietnam, Taiwan, Hong Kong, Indonesia, Germany, the UK, Finland and France. -> English and Chinese-speaking audiences globally and Vietnam

Iraq and Switzerland -> Iraq

Canada and Ecuador -> El Salvador, Argentina, Uruguay, Venezuela, Ecuador and Chile

Democratic Republic of Congo -> Domestic

Yemen -> Domestic

Brazil -> Domestic

Ukraine -> Domestic

US -> Domestic

Note how 5 of the 9 groups seemed to have engaging in domestic campaigns.

Ryan Mac writes for Buzzfeed about an Instagram that seems to have favouring Donald Trump over Joe Biden. What happened here is that a feature that shows related hashtags surfaced negative content along with hashtags related to Joe Biden but not Donald Trump. Instagram said it was technical issue that some hashtags did not show any related content. And it apparently did affect neutral content too, while some lower engagement Trump related hashtags did surface negative content. Let’s take them at face-value for now.

Another Buzzfeed story (Craig Silverman and Ryan Mac) highlighted instances of Facebook intervening in fact-checks to supposedly favour the right-wing. It seems like just last week that Republicans were asking Big Tech CEOs about biases against the right.

In a first, Facebook took action against content posted by Donald Trump for violating its rules against coronavirus misinformation. Twitter, meanwhile, took action against the handle @TeamTrump. Though they did manage to cause some confusion with their communication which led people to believe that Donald Trump’s account was facing suspension (it wasn’t). Donnie Sullivan has a thread which (kind of) captures this.

Twitter went a step further and even took action against tweets and accounts that shared the same content while criticising Donald Trump. This included accounts of the DNC and journalists. These tweets were in violation of the same rules that were used act against Trump’s tweet.

TikTok, announced 3 changes to deal with information disorder and election disruption efforts. Aside: I couldn’t access the link directly since TikTok websites appear to be blocked by the ISPs I have access to.

> We're updating our policies on misleading content to provide better clarity on what is and isn't allowed on TikTok. > We're broadening our fact-checking partnerships to help verify election-related misinformation, and adding an in-app reporting option for election misinformation. > We're working with experts including the U.S. Department of Homeland Security to protect against foreign influence on our platform.A report by Reuters indicates that Google took down 2500 China linked YouTube channels but details were scarce.

Google did not identify the specific channels and provided few other details, except to link the videos to similar activity spotted by Twitter and to a disinformation campaign identified in April by social media analytics company Graphika.

And, as we saw with the South Front earlier, it remains to be seen if these networks can be kept off.

Google also recently announced updates to its policies dealing with leaks.

Publishers who accept Google advertising also can’t directly distribute hacked materials, though links to places like Wikileaks or BlueLeaks — a collection of information leaked from police departments — would be allowed.

This sounds like it is going to get very messy.

I said I wouldn't be addressing the Trump EO. It is still important to address the Microsoft acquisition situation. At least tangentially, in a detailed write-up in Gizmodo Shoshana Wodinsky details how an acquisition may not change much in terms what data goes to China because of Ad Networks. You are free to disagree due to your own geo-political assessment, but it is still worth a read.

Study Corner

This essay by Mahsa Alimardani, Mona Elswah covers religious misinformation in the MENA Region. Not that I am happy about information disorder in different parts of the world, but it was interesting to read about a new region. Some excerpts below:

new dynamics for religion in the age of the Internet are contributing to a uniquely regional and religious form of misinformation.

the phenomenon of religious misinformation is a defining characteristic of the MENA’s online sphere, becoming even more acute during the COVID-19 pandemic.

religious misinformation is harder to fact-check and requires a deeper knowledge of religion and its sociopolitical context to discern.

(In this context) The use of religious misinformation draws upon a powerful appeal on the role of Islam, which is deeply entrenched in the culture, society, and politics of the region.

The perpetrators of some of these messages are sometimes religious authorities with ties to ruling establishments. In other instances, these manipulations are promoted by content creators who fabricate, misinterpret, and misuse religious sculptures to attract followers for monetary gain.

Social media platforms are defining new parameters for religious dynamics and authority. They are the impetus behind why religious misinformation is contributing to this infodemic.

While mainstream platforms have often fallen short in curbing misinformation in their native English-speaking countries, their responses in non-English speaking countries may have fared worse. MENA-related content moderation issues predate the pandemic and have often been excused as a lack of local linguistic capacity.

Mapping Youtube by Bernhard Rieder, Òscar Coromina and Ariadna Matamoros-Fernández, as the name suggests, attempts to explore Youtube.

First, we investigate stratification and hierarchization in broadly quantitative terms, connecting to well-known tropes on structural hierarchies emerging in networked systems, where a small number of elite actors often dominate visibility.

Second, we inquire into YouTube’s channel categories, their relationships, and their proportions as a means to better understand the topics on offer and their relative importance.

Third, we analyze channels according to country affiliation to gain insights into the dynamics and fault lines that align with country and language.

They defined 3 tiers (1k–10k, 10k–100k, and 100k+), with the largest one being called the elite tier. Unsurprisingly, this tier dominates activity on the platform. India had the 2nd highest number of elite channels. They also determined that India, South Korea and Egypt publish a disproportionate number of videos while the US does better with fewer videos published considering its subscriber and view count. They conclude elsewhere that this an indicator of industrialisation in the US. India and Pakistan have low like ratios which the authors believe are indicators of disapproval or controversy.

Since we’re on YouTube, I do want to include a study from 2018 by Rebecca Lewis on the platforms “Alternative Influcen Network”.

Few things to wrap up:

An excerpt from Rob Brotherton’s Why We Fall for Fake News.

And this rather hilarious “Rage Point” by Charlotte Kupsh