Of Equal disorder, Arabian Knights, What me-me worry and What the FOX.

MisDisMal-Information Edition #2

What is this? This newsletter aims to track information disorder largely from an Indian perspective. It will also look at some global campaigns and research.

What this is not? A fact-check newsletter. There are organisations like Altnews, Boomlive etc who already do some great work. It may feature some of their fact-checks periodically.

Welcome to Edition #2 of MisDisMal-Information.

Thank you special people!

Before getting into this edition's content, I do want to thank everyone who has subscribed over the last week. It takes a special group of people to ask for more bad news in today's world. Congratulations! You made the list.

Everyone uses information disorder equally, some just seem to be more equal.

Last week, I had included some research by Syeda Zainab Akbar, Divyanshu Kukreti, Somya Sagarika and Joyojeet Pal titled Temportal Patterns in COVID-19 misinformation in India. I played around with their dataset (which they built on top of a database of fact-checks maintained by Tattle Civic Technologies) over the week. I specifically looked at those annotated as "Culture" and tried to determine which side of the political spectrum they were meant to favour. Now, let me admit that this will be subjective. I classified the 39 pieces of debunked items based on.

Target: International or Domestic

Political Leaning: R (Overtly nationalistic, anti-minority, pro-majority), L (pro-minority, anti-majority ), NA - No political leaning could be attributed.

I excluded those targeted at an international audience and removed duplicates.

Here's what it looked like:

Now, do remember that this is a small sample set and that the culture category 'may' just be the most egregious case. If you are going to tweet this out- please share it responsibly, with the context.

Arabian Knights and Shadows

As it turns out, tweeting hateful content against the religion of the state you are residing is among the not-too-smart things that a person can do. A number of twitter accounts belonging to Indian users residing in the Middle East were deleted after sustained efforts to bring these to the attention of local authorities there. Some lost their jobs, some had to apologise. Many prominent twitter users from the Middle East also began expressing concerns over the treatment of Muslims in India, as well as past statements made by many BJP members. Hashtags like "islamophobia_in_india" and "RSSTerrorists" started doing the rounds. I looked up diffusion charts for both of these on Hoaxy.

An account that featured prominently in the coverage of these instances was @AlGhurair98. I used a tool to look into its activity. The pattern seems odd.

Of course, it is important to point out that nothing has been reported specifically about its authenticity (or the lack thereof). Unlike a number of other accounts which were found to be re-purposed and/or inauthentic. @Zoo_bear called some of these out.

ThePrint ran a story documenting many of them. While information operations were certainly a part of this whole story, how Swarajya then attributed 'most' of the activity to bots, trolls and people in Pakistan - is not something I know.

Security agencies and independent social media users, after investigation, found that the recent hashtags like “Islamophobia in India” on Twitter, were mostly sourced to bots, trolls and people in Pakistan.

dAR-NA(B) Mana Hai

Another battle that played out was over whether Arnab Goswami was Anti-India or a 'boon for journalism'. Yes, that happened - sort of.

But I misrepresented that (haha!), the hashtag battle was between 'ArrestAntiIndiaArnab' and 'ISupportArnabGoswami'. For some reason, reaching a million tweets was considered a landmark.

Who needs POFMA?

POFMA or the Protection from Online Falsehoods and Manipulation Act is Singapore's anti-misinformation law, if I can use that term. The risk with such legislation is that it ends up criminalising information in general and not just misinformation in particular. Incidentally, POFMA was first used against an opposition politician

So, who needs such laws? Well, not us for sure. The police in J&K bookedjournalists for 'fake news' and 'anti-national' posts.

What, me-me worry?

That a visual element helps make information operations more appealing has been studied. If you're a regular visitor to the sections of various social media platforms, you'll probably already know what I am talking about. And that's precisely why we should be worried. The Interpreter writes about the risks of these patterns and implications for elections in Asia after seeing how they've been used during the pandemic. Oh, and while you're here, also read this MIT Tech Review article on 'How memes got weaponised'.

Some other articles that touched upon Information Disorder in India:

Ritika Jain's story on the Spiral of Islamophobia

Suhas Borker on PSYOPS in India.

Remember Kanika Kapoor? Well, here's her version courtesy Outlook via Press Trust of India.

Sanna Balsari-Palsule writes in TheWire’s Science edition about How Behavioural Science Can Help India Overcome The Pandemic.

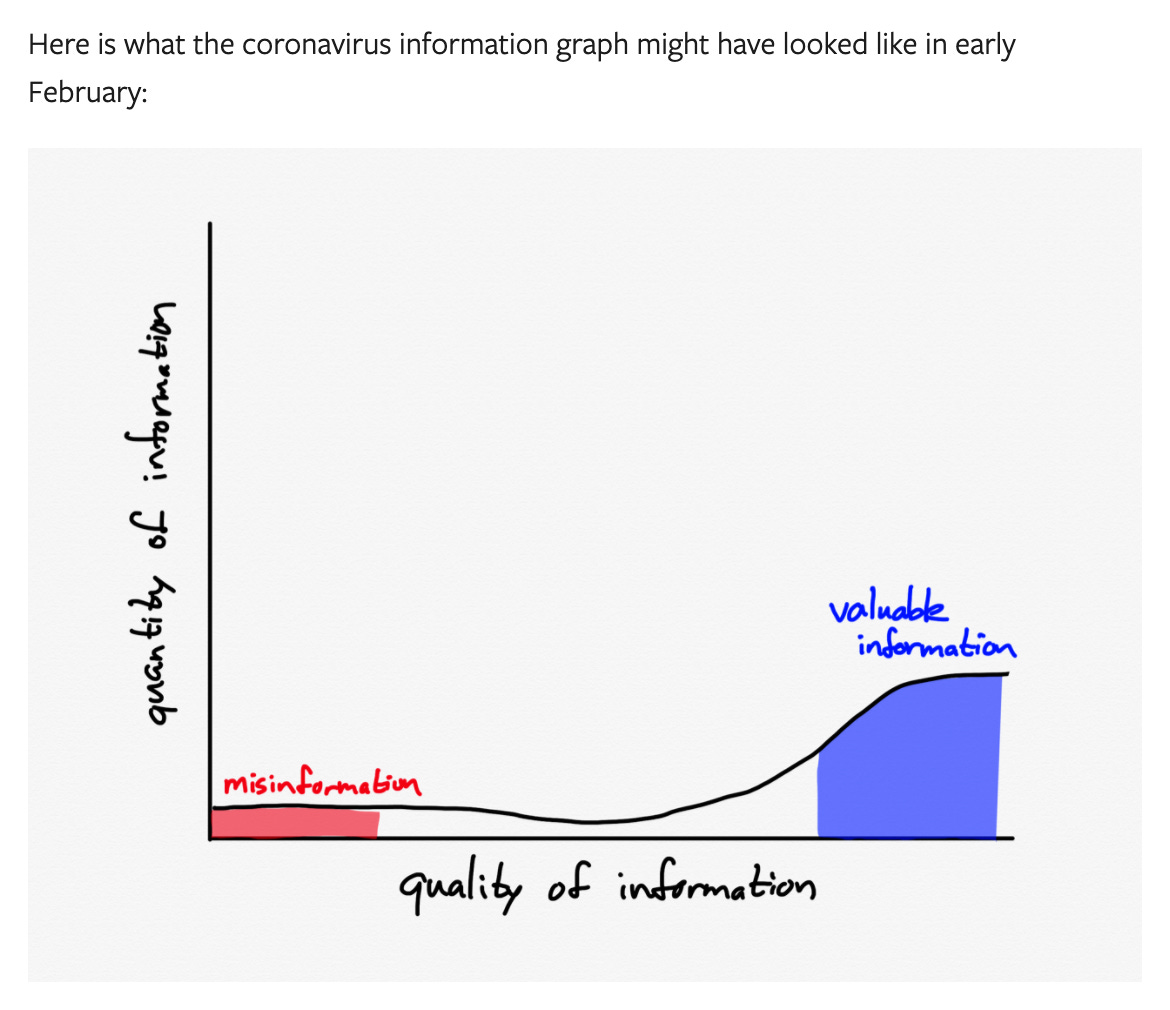

Prasid Banerjee in Livemint on Covid-19s misinformation curve.

"We are all exclusively COVID-19 content creators now"

Peak Information and Peak Disorder

I remember reading that tweet by Pranav Dixit back in mid-March and almost falling off my chair laughing. Because it was one of the those funny things that makes you laugh (obviously) and makes you feel like you've been hit by a truth-brick at the same time (ergo - almost falling off the chair). So if we're also creating COVID-19 Information, there is also going to be more COVID-19 related Information Disorder. Ben Thompson (Stratechery) laid this out with a series of illustrations in a post titled 'Defining Information'

And finally,

In this context, it is also interesting to look at an article that Jeff Jarvis published on Medium about The Open Information Ecosystem. He contends that the Media no longer delivers information and that journalists should rethink how they add value.

What the Fox?

H/T to @pranaykotas for pointing me to this working paper - Misinformation During a Pandemic.

From the abstract

We study the effects of news coverage of the novel coronavirus by the two most widely-viewed cable news shows in the United States — Hannity and Tucker Carlson Tonight, both on Fox News — on viewers’ behavior and downstream health outcomes. Carlson warned viewers about the threat posed by the coronavirus from early February, while Hannity originally dismissed the risks associated with the virus before gradually adjusting his position starting late February.

And from the conclusion

We begin by validating differences in content with independent coding of shows’ transcripts. Consistent with the differences in content, we present new survey evidence that Hannity’s viewers changed behavior in response to the virus later than other Fox News viewers, while Carlson’s viewers changed behavior earlier. Using both OLS regressions with a rich set of controls and an instrumental variable strategy exploiting variation in the timing of TV consumption, we then document that greater exposure to Hannity relative to Tucker Carlson Tonight increased the number of total cases and deaths in the initial stages of the coronavirus pandemic. Moreover, the effects on cases start declining in mid-March, consistent with the convergence in coronavirus coverage between the two shows. Finally, we also provide additional suggestive evidence that misinformation is an important mechanism driving the effects in the data.

Bee-Tee-Dubs

No, no, I haven't discovered my inner millennial voice (or is it a Boomer term?). This is a section called Big Tech Watch. Why?

SaveTheDataSaveTheWorld: A collective of ~75 organisations and researchers wrote an open letter to social media platforms calling on them to store information related to automated content takedowns for future research to understand that impact of algorithmic content moderation. They cited the 'emergency powers' that the platforms effectively have. So it is only fair to dedicate some portion of Mis-Dismal Information to Big Tech.

Let's start with Facebook.

Not linked to the pandemic (phew) but Reuters carried a story claiming that Facebook agreed to censor posts in Vietnam after its traffic was throttled (wehp - that's me taking back the phew).

An investigation by TheMarkup revealed that it had ‘pseudoscience’ as a targetable category.

It banned listings for anti-lockdown protest events in some states in the United States. David Kaye has a thread on why it isn't as black and white as it appears.

Twitter:

It updated its guidlines to cover 5G and COVID-19 related misinformation.

And while it has acted against tweets by politicians, it still says Donald Trump's Disinfectant Videos do not violate its policy. But it did block the 'InjectDisinfectant' hashtag. Go figure.

Google announced that all advertisers will be required to complete a verification process. It will start with the US, then expand globally. The process is expected to take a few years to complete.

Chinese whispers with the world

In Edition #1, I touched upon the more combative nature of official PRC presence on Social Media. Earlier in the week, Jessica Brandt from SecureDemocracy had an insightful thread. If you make it to the end, there's also a webinar on tracking these state-backed information operations on the coronavirus.

And again, there's a good write-up on this topic by Laura Rosenberger in Foreign Affairs (paywall).

A Confession from the writer's desk.

I had thought of doing this weekly and restricting it to 1500 words. But Information Disorder is such a high growth industry that I have the push out some of the other research I had planned to cover to the next edition. Well, hopefully that gives you something to look forward to.

Bonus Content:

Cyberpeace post on How the COVID-19 Infodemic Facilitates Cyberattacksand a webinar on How Cyber Operations Transgress International Laws and Norms

Motherboard's Cyber podcast had an episode with Thomas Rid where he talks about his book "Active Measures". During the episode, Rid makes the excellent point of not becoming a useful idiot for information operations by underestimating or overestimating (especially the latter) their effectiveness.

A great example of a weaponised fact-check.