Of GIFs, eww… disinfo? vac-scenes from around the world and everything is a content moderation problem

MisDisMal-Information 29

What is this? This newsletter aims to track information disorder largely from an Indian perspective. It will also look at some global campaigns and research.

What this is not? A fact-check newsletter. There are organisations like Altnews, Boomlive, etc. who already do some great work. It may feature some of their fact-checks periodically.

Welcome to Edition 29 of MisDisMal-Information

Again, a number of images/gifs in this edition. So if you are blocking images in your mail client, I recommend viewing this in a browser (or downloading content for this edition).

I am starting off with 2 stories that I believe were important but did not get enough attention over the week.

GIFs and NayaBharatNet

Today, let’s start by talking about GIFs.

No, not that GIF,

Not that either, but the stress on the GIF means we’re getting closer. I am, of course, talking about a Great Indian Firewall - or what I’ve been referring to as NayaBharatNet so far.

But why now?

A lot is going on, so I actually missed this a few days ago:

The article (Surajeet Das Gupta) in the Business Standard lead with:

The government has sent notices to Chinese apps including TikTok and others, making the interim ban on these apps now permanent.

And, then some days later, Mint reported (Shreya Nandi, Prasid Banerjee) something similar, this time supported by an anonymous quote:

“The government is not satisfied with the response/explanation given by these companies. Hence, the ban for these 59 apps is permanent now," the person cited above said, adding that the noticed was issued last week.

I haven’t come across the actual text of the notices yet (if you have, please be a good samaritan and share it), but since this seems to have been followed by TikTok cutting[Manish Singh - TechCrunch] a large number of jobs (possibly over 1800 [Jai Vardhan - Entrackr]). So, clearly, the notice they received didn’t leave much room for optimism.

Ok, Prateek - where are you going with this?

It is simple, really, but not something I’ve seen called out. If bandemic 1 is now permanent, this means we now have a set of services that cannot be accessed from India on the basis that they were ‘engaged in activities, which is prejudicial to sovereignty and integrity of India, defence of India, security of state and public order’ [PIB notification, 29 June 2020].

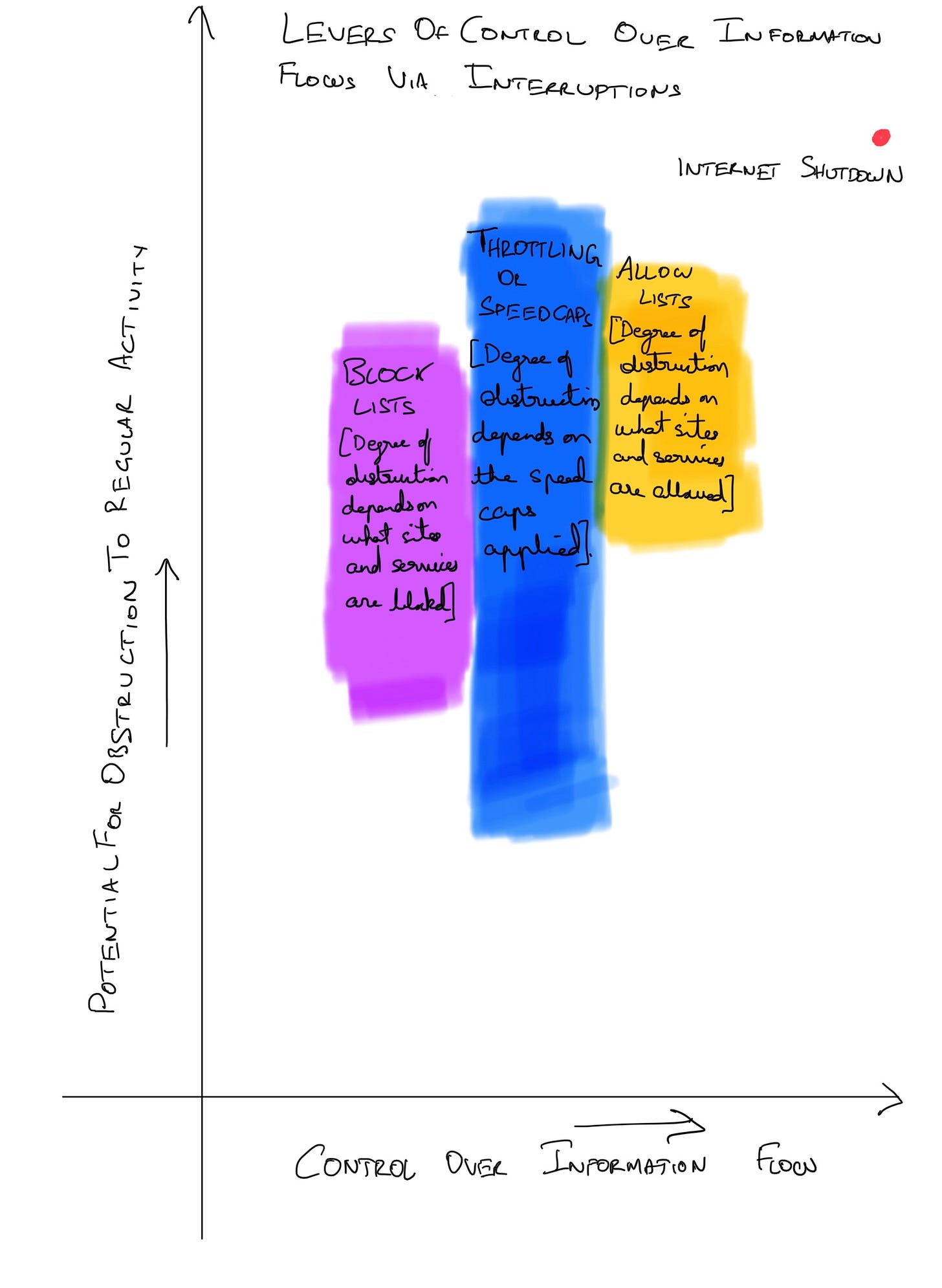

Now, let’s digress for a bit. I was trying to figure out how to represent the different levers available to states to control the flow of information (and subsequently) narratives by imposing restrictions on the Internet.

And while this was done in the context of an Internet Shutdown (I’ll come to that later), it can be applied in the context of a sovereign internet too. Note that I have limited this ‘control’ over ‘flow’ and not ‘interception’ - which would go into breaking encryption territory.

China’s Great Firewall - can be considered (simplistically) to be a combination of “block-lists”, throttling (not in the same we do, but is likely a byproduct of attempts to intercept internet traffic to allow for things like keyword filtering which slows things down). This effect is more pronounced if a user is trying to use a website/service which hosted in the U.S or Europe rather than one that runs its operations in Mainland China.

Russia’s RUNET - would be closer to the allow-list category since the goal is for it to be cut itself off from the global internet, meaning that only specific websites/services would be accessible (without some degree of tinkering).

Iran’s work on ‘National Intranet Network’ (ongoing since 2013/2014) would also eventually allow it have a functional ‘domestic internet’. After a prolonged week-long (not by our standards) internet shutdown in November 2019, the Iranian government was believed [Ryan Daws - TelecomsTechNews] to be working with state-run companies to make a list of ‘foreign websites’ they relied on.

Some context: I did spend some time managing Products for China and Russia at a Content Delivery Network.

Ok, let’s go back to the 58 apps: we now have a ‘block-list’ of websites and apps that cannot be accessed from India - permanently. This is, in my mind, the formalisation of an Indian Firewall. In the short-ish term, we’re still far away from China, Russia, Iran territory. But that doesn’t mean we won’t get there, in the long term. A domestic internet isn’t technically feasible today without breaking a lot of things - it won’t always be this way. Also, put this in context with the stress on data localisation, fits of data nationalism and bursts of ban-happy hashtags calling for foreign companies to be removed from the country, India’s position on ‘cyber sovereignty’, etc. And I should remind you that we have also experimented with an allow-list in Jammu and Kashmir - which didn’t really work [Rohini Lakshané and, well, me - Medianama].

This hasn’t been challenged in court by the companies themselves (contrast this with how prepared [Mike Isaac, Ana Swanson - NYTimes] TikTok was to go to court in the U.S.) or anyone else - even though there has been no demonstration of how these services in particular were engaged in the activities that are prejudicial to sovereignty and integrity of India. [I should add that in researching this, I found a follow-up feature dated 4th July 2020 attributed to MEITY which referenced a researcher’s use of fitness tracking data from Strava to determine locations of military bases - as an example of threat to national security]

From a piece where Scott Malcomson [Rest Of World] takes a rather bull-ish view of the splinternet (making the case that it will actually mean less Chinese control of the internet). Needless to say, I don’t share in that sentiment.

… leaving aside technical concerns, the U.S. and Europe have been expanding their control over internet content for years, mostly in the name of privacy, anti-terrorism, drug-law enforcement, and taxation. The same has been true in countries such as India, China, and Russia. The movement toward state control, which is inherently a de-universalizing force, could not be clearer.

And in a Livemint article highlighting the differences in contracts available to Indian and European users of Whatsapp, Shweta Taneja ends on this sobering note, that applies here as well.

The global internet, if it was ever alive, is now dead. Having experienced the downfall of the idea of the freedom that internet provides, citizens of the world now want the powers of the tech companies curtailed. However, privacy and power come to select people, as countries scramble for more rights for their own citizens.

As the pandemic brings borders back into fashion and citizens look inwards, the internet’s future, ruled by for-profit companies, will continue to be chopped up by regulators, governments and laws in order to curtail the power tech companies have begun to have on our lives.

Frying pan, fire, and all that.

EWW… Disinfo?

In early December, EU Disinfo Lab published an investigation they called ‘Indian Chronicles’ which, as per them, spanned a 15 year period, resurrected 10+ UNHRC accredited NGOs and even the late Louis B. Sohn (2006) and had a network of 750+ fake media outlets spanning 119 countries.

Phew, that’s quite a bit. I won’t go into specifics (because my main point is actually adjacent to this investigation itself) but there are a few points to note:

This was a follow-up to a 2019 investigation which uncovered another fictitious organisation (EP Today).

They attributed both these operations to the Srivastava Group (who also organised the ’tour’ of Members of the European Parliament to Jammu and Kashmir).

Interventions of these NGOs were mainly pro-India and anti-Pakistan.

Notably, it also implicated ANI in the way it non-transparently passed off some Op-eds as reports - which were further amplified by other mainstream publications (likely as part of their syndication agreements).

Just like the 2019 investigation, this got some coverage in the Indian press for a few days and generated some chatter on social media (mainly due to ANI) before it seemingly faded out of collective memory [this is opinion, I cannot quantify this - I tried date range searches on Google News but since there is no way to filter by a set of publications it wasn’t particularly useful].

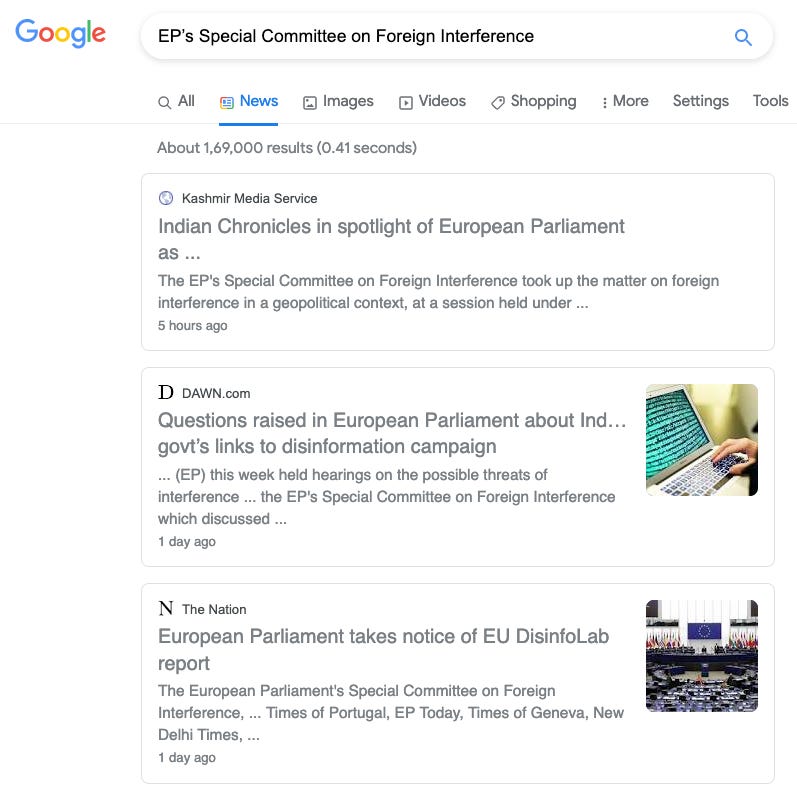

But that’s not what this section is about. On 26th January, a committee of the European Parliament took this item up.

Did any Indian outlets cover it? Doesn’t seem like it. It was mainly covered by outlets that seemed to be from Pakistan (not surprising).

Here’s a Google News search from 28th Jan.

And here’s a link to a date filtered search from January 29th, going back a week. For the diplomacy track journalists one would think that this would be worthy of a cursory mention at the very least? I guess no one wants to touch information disorder when we’re implicated. It’s like …

Oh, there’s a video too (the 2nd half pertains to India). 2 MEPs even seemed to intervene on India’s behalf. Surely, someone could have scored some nationalism and propaganda points, no? [I don’t mean that seriously, do not do that!]

There’s also a broader point here. Influence operations can be found wherever you look for them (if you look hard enough and are smart enough, obviously) - we just seem to hear a lot more about Russian, Chinese and Iranian ones today because a lot of resources are focused on looking there. In fact, Mahsa Alimardani, who spoke about Iranian influence operations before the EUDisinfo team said (in the context of an overemphasis on Iran) [from ~14:22:30 in the video]

There are a lot of entities within the cyber security space, the disinformation space that have motivations to focus on Iran. There is various geopolitical interest that has oversaturated funding in the space of studying Iran and often times there is more of a focus on Iran than there should be on other countries…

On the one hand, it is positive to note that there is any sort of ‘over saturation’ in funding to focus on influence operations. But, I’ll be very surprised if some of these funds aren’t diverted to studying India as we continue a rightward swerve. Playing ostrich with head in the sand isn’t going to cut it as a defence against such accusations. Neither is, whatever this headline is:

Related: I think I’ve mentioned this before but I’ll throw it on here again anyway

In Active Measures, Thomas Rid says that democracies cannot be good at disinformation.

(I don’t think I’ve referenced this earlier) In an episode of The Lawfare Podcast titled ‘Can Democracies Play Offense on Disinformation?’ Alina Polyakova and Daniel Fried recommend (there’s also a paper)

Cyber tools to identify and disrupt foreign disinformation operations.

Sanctions/Financial tools against disinformation actors and their sources of funding

Supporting free press/media.

Vac-scenes from around the world

States and Union Territories have been urged to use the Disaster Management Act and Indian Penal Code to take action against people for spreading misinformation about the COVID-19 vaccines [Yahoo! News].

Related 1: Threats not the best way to fight vaccine hesitancy [Rema Nagarajan - TOI]

Related 2: In an article for Scroll, Sandhya Srinivasan, Amar Jesani & Veena Johari call on the government to disclose details of investigations into 9 deaths since the start of the vaccine rollout.

Related 3: This article about health-workers avoiding vaccination should have done a better job of contextualising with overall numbers rather than relying purely on anecdotes and narrow examples-sets. Potentially, this one too.

EUvsDisinfo (not the same as EU Disinfo Lab referenced earlier) documents Kremlin media campaigns against the Pfizer vaccine [Telling half truths is also lying]

Pro-Kremlin disinformation outlets are mastering the art of almost not lying(opens in a new tab). Several outlets in the disinformation ecosystem are carefully reporting on virtually every case of recorded side-effects of the Pfizer vaccine, while just as carefully avoiding any reports on issues with the Russian Sputnik V vaccine.

ASPI’s Ariel Bogle and Albert Zhang highlight the risks examples of China’s and Russia’s influence campaign which could lower trust in COVID-19 vaccination programs. India is name-dropped in the first paragraph, and there is a reference to Covaxin later (emphasis mine).

As the world bets on vaccines as a way out of the Covid-19 pandemic, vaccine candidates have quickly become a vehicle for national influence. China, Russia and India, for example, are each quick to publicly celebrate, through traditional media and official social media accounts, every new (<- link to tweet by Narendra Modi) country that signs up to use their vaccines.

…

Our analysis of 455,940 tweets mentioning vaccine-related terms between 11 January and 19 January found an overall shift towards negative sentiment on vaccines following the Norwegian Medicines Agency report. This suggests that negative portrayals of one vaccine might erode public trust in all vaccination programs. Tweets mentioning ‘pfizer’ had the greatest relative increase in negative sentiment across the eight-day period. Sputnik V and Covaxin both also had highly variable sentiments reflecting polarising debates over each vaccine on Twitter.

An IANS wire story in Business Standard states that 92 countries have approached India for COVID-19 vaccines.

Ninety-two countries have approached India for Covid-19 vaccines, bolstering New Delhis credentials as the vaccine hub of the world.

Highly placed sources said that scores of countries are approaching India, as the word spreads that Indian vaccines are showing negligible side-effects, since the immunization drive in the country began on Saturday.

Just ignore this line at the end “(This content is being carried under an arrangement with indianarrative.com)”.

Indonesia and Malaysia are dealing with COVID vaccine hesitancy too.[Amy Chew and Vasudevan Sridharan - SCMP]

Indonesia

A Ministry of Health survey in November 2020 found the vaccine acceptance rate was only 45-74 per cent, said Muhammad Habib Abiyan Dzakwan, a researcher from the Centre for Strategic and International Studies’ (CSIS) disaster management research unit. That same month, a Unicef survey found that Aceh had the lowest vaccine acceptance in the country at just 46 per cent, while 30 per cent of the population doubted Covid-19 vaccines.

“It was never fully supported … this might add layers of homework for the government, as they not only [have to] convince people at the grass roots level but also national politicians,” said Habib.

Malaysia

A Ministry of Health online survey in December found 17 per cent of the 212,000 respondents were unsure of the Covid-19 vaccines’ efficacy, while 16 per cent said they would reject the vaccine.

Phillipines too, [Alan Robles and Raissa Robles - SCMP]

In November, a poll conducted by Pulse Asia Research Inc showed that only 32 per cent of respondents in the Philippines were willing to receive a Covid-19 vaccine.

Almost half of the 2,400 people surveyed said they would skip immunisation, while 21 per cent could not say what their decision was. Many of those who didn’t want to get inoculated said the main reason was they were not certain of the vaccine’s safety.

Alice Lu-Culligan and Akiko Iwasaki in NYTimes about vaccine-related rumours leading to hesitancy among women.

Cécile Guerin in WIRED UK about the overlap between Yoga instructors, anti-vaxx and QAnon.

As a yoga teacher in my spare time, I’m part of an online community of wellness enthusiasts. But in my day job, as a researcher at the Institute for Strategic Dialogue, I monitor the spread of online disinformation and conspiracy theories.

…

The same conspiracy theories I was tracking at work began appearing in my personal Facebook and Instagram feeds, shared by accounts I followed, by influencers suggested to me, and sometimes by acquaintances.

The researcher in me became curious – I started digging. Instagram and Facebook’s algorithms took me to new corners of the yoga, wellness and holistic health world. In the early days of lockdown, I saw posts about how juices, miracle cures and turmeric could boost my immunity and ward off the virus. As the pandemic intensified, disinformation became darker, from anti-vaxx content and Covid denialism to calls to ‘question established truths’ and wilder conspiracy theories.

Meanwhile in India

Note: The violence at Singhur is a developing situation, so I will not be covering it as things are likely to change between the time I write this and it reaches your inboxes.

This is a classic example of engagement and group-dynamic incentives on social media can get the best of pretty much anyone - after a lot of confusion over whether the President had unveiled a photo of Subhas Chandra Bose or not - the matter was settled by his grand nephew. [Pratik Sinha - AltNews] “Think before you tweet” says a TOI Editorial.

An FIR was lodged against 3 Journalists (Mohit Kashyap, Amit Singh and Yasin Ali) in Kanpur Dehat district for “fake news” about students of a government school shivering in the cold during an event held on January 24 on the occasion of Uttar Pradesh Foundation Day (UP Diwas) [Asad Rehman - The Indian Express]

An FIR has been filed against (in Uttar Pradesh) against Shashi Tharoor and 6 journalists (Rajdeep Sardesai, Mrinal Pande, Zafar Agha, Anant Nath, Paresh Nath, and Vinod K. Jose) for misleading tweets. [The Hindu]

In the FIR, registered in Sector 20 police station of Noida, the police have invoked sections 153 A (promoting enmity between different groups), 153 B, 295A (malicious acts to outrage religious feelings), 298 (deliberate intent to wound religious feelings), 504, 506, 124A, 120B (criminal conspiracy) and Section 66 of the IT Act 2000.

In Bihar, after criticism for a letter instructing officers of the Economic Offences Unit to take legal action against people for making ‘offensive’, ‘indecent’ and ‘misleading’ statements against MLAs, MPs, government officials etc. through social media, the state police clarified that “‘constructive criticism was welcome’ and only posts spreading rumours and using ‘insulting language’ would be targeted.” [Manish Kumar - NDTV]

Another copypasta (small and unsuccessful this time) campaign, this time to discredit Farmer protests. Most of the accounts have been deleted or have gone private/protected (high-profile ones) but you can see what’s left at this Twitter Search Link. I discovered it through this tweet.

Farm-vil-ification

On 26th Jan, an Internet Shutdown was executed in specific parts of Delhi and the rest of the NCR region was throttled to 2G (with a maximum prescribed speed of 64 kbps)

As I referenced earlier, this can result in lop-sided control over narrative. Ajay Joshi of Tribune reports in the context of diaspora support for the movement:

We have lost contact with them in these two days. Farmers back home told us on voice calls that the NRIs were confused about the entire episode. Having received videos and photos of the incident through leading news channels, some NRIs have also condemned the events of the day. They still seem to be divided over the issue.

The Delhi police said that 308 Twitter handles were created in Pakistan from January 13th to 18th ‘disturb farmers’ tractor rally’. [ThePrint via ANI]. As I mentioned earlier, I don’t doubt we’ll see evidence of an influence operation in the mix somewhere. But such opaque announcements don’t help researchers (or anyone) trying to understand this better.

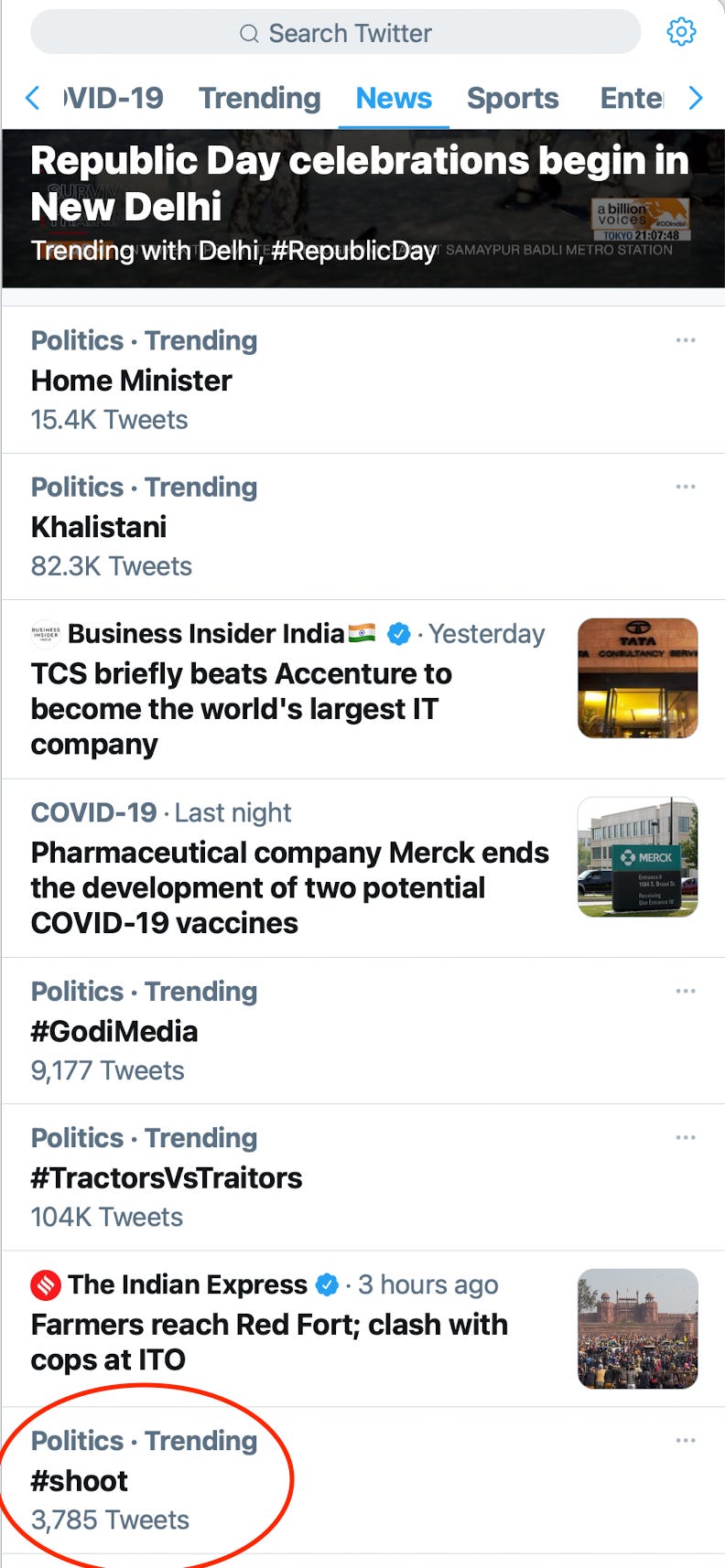

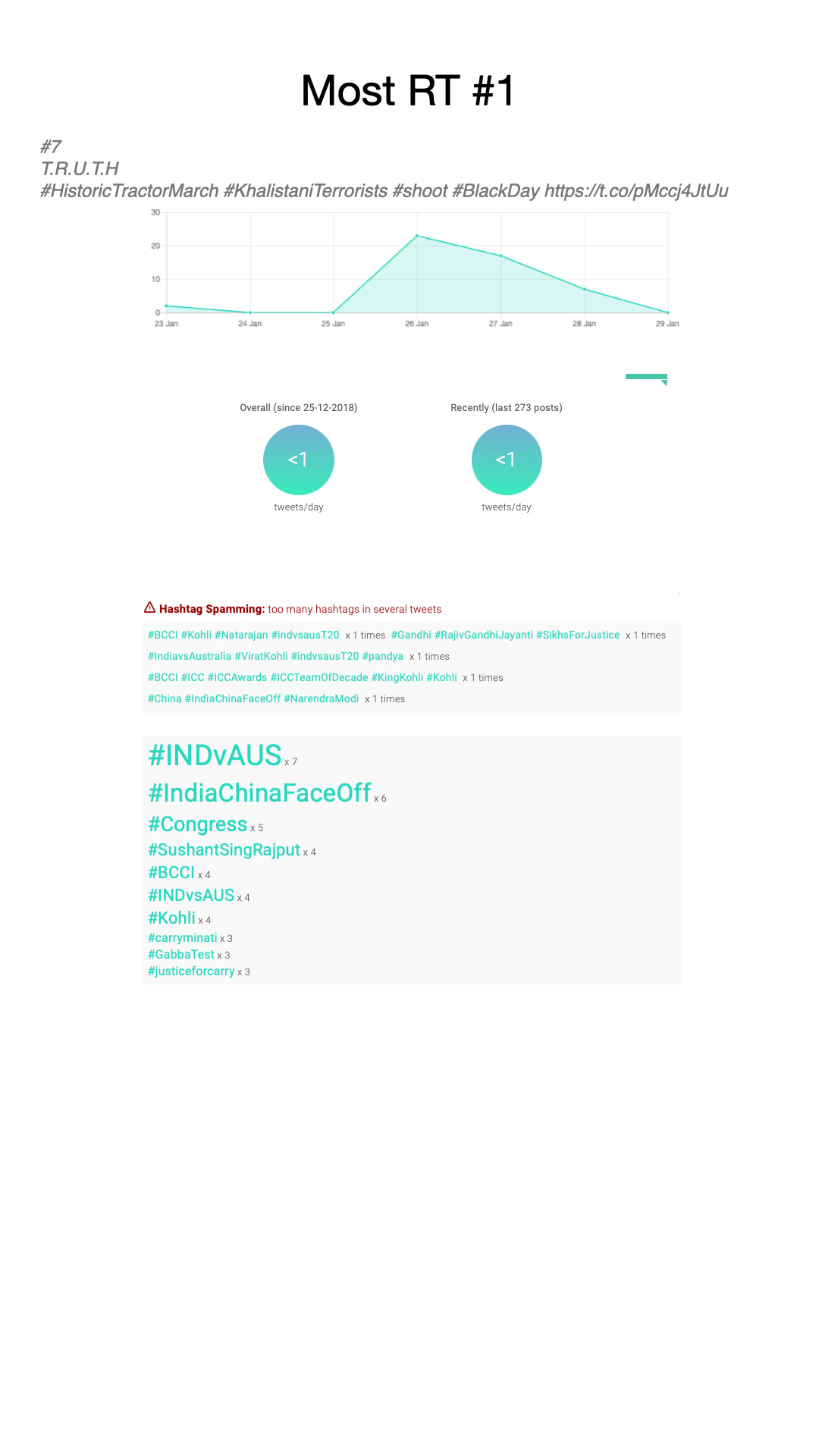

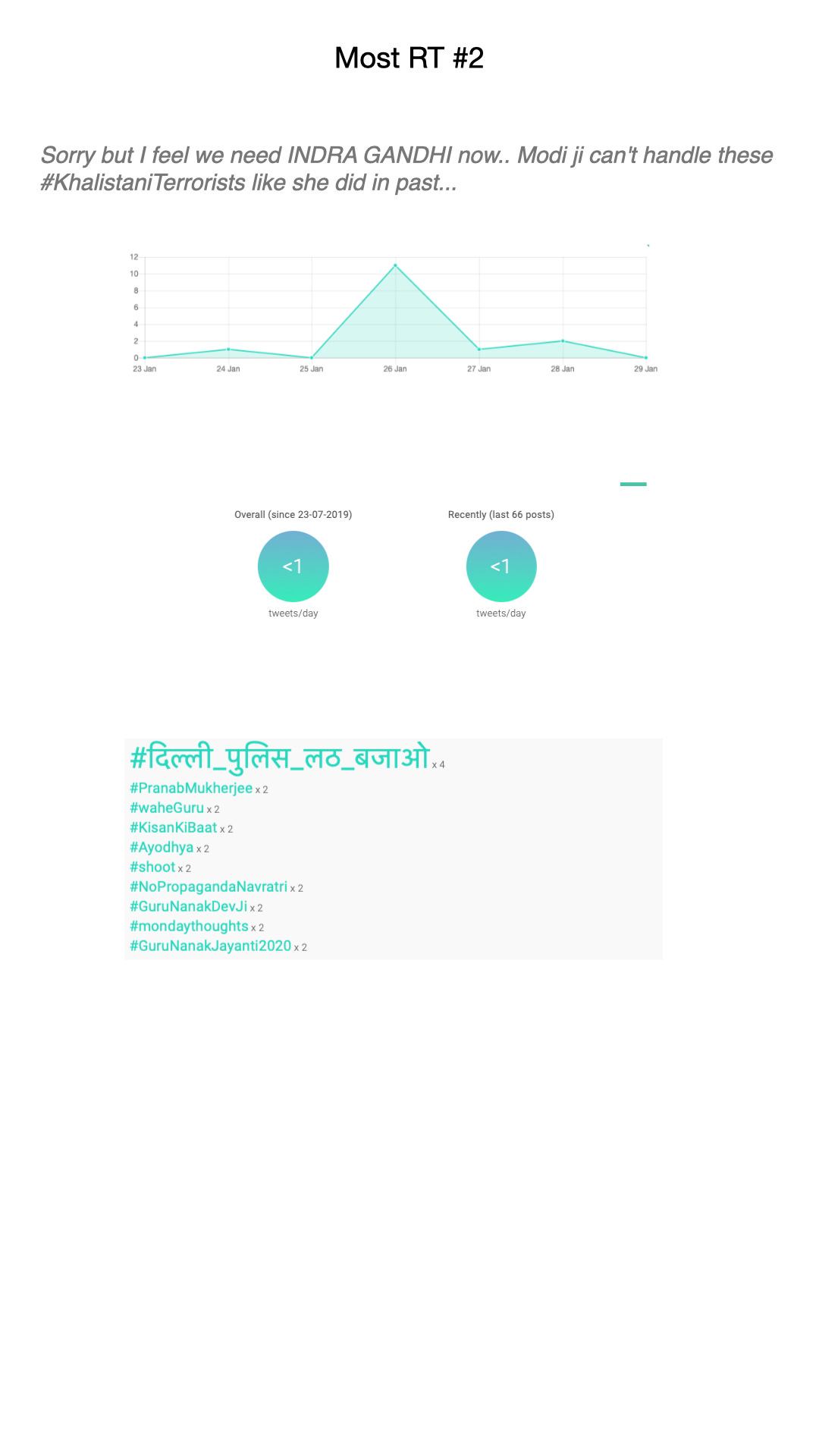

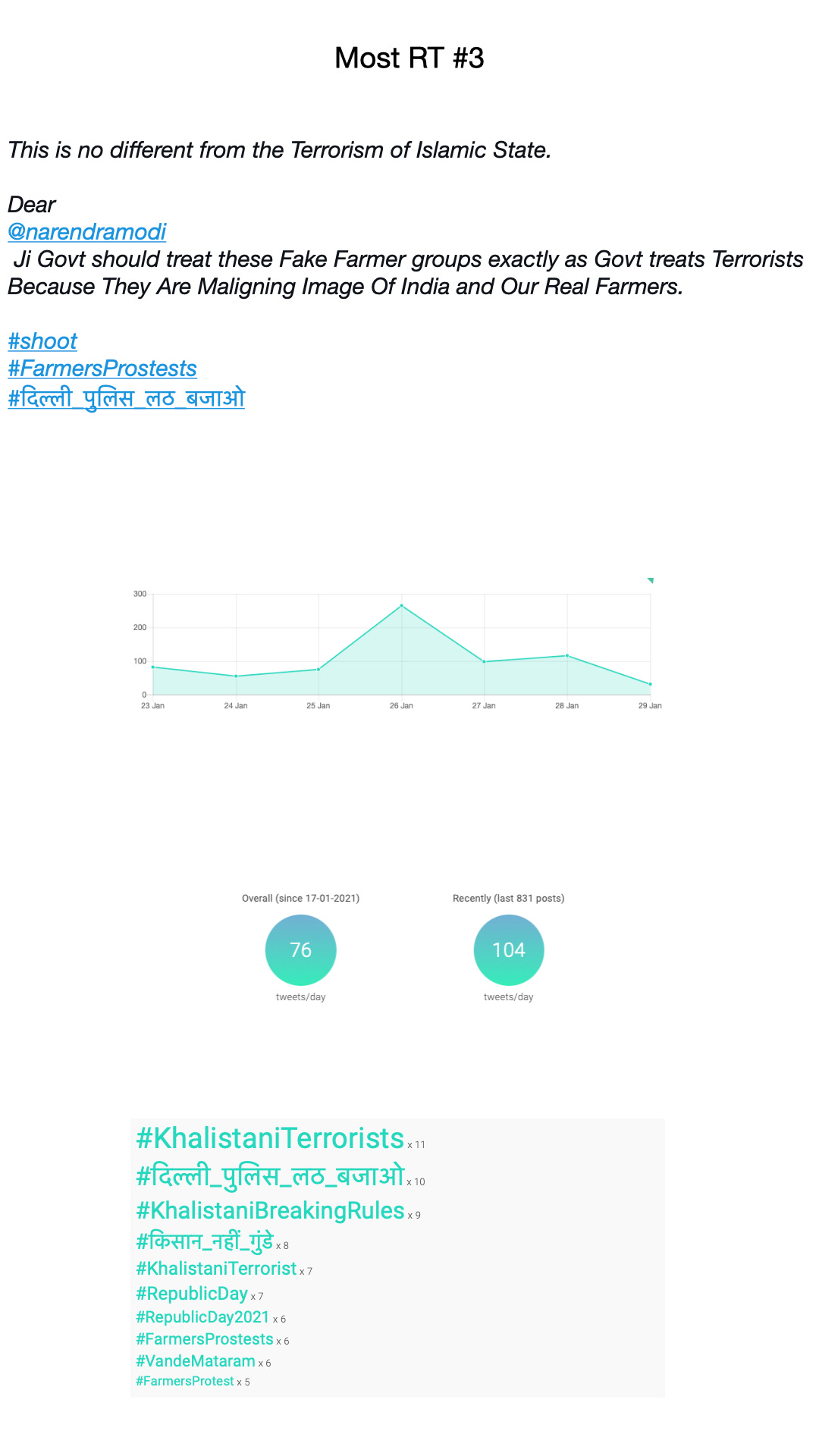

As things turned violent, the term ‘Shoot’ started trending on Twitter. Pranav Dixit, for BuzzFeed News:

On Tuesday morning, “Shoot” was one of the top trending topics on the platform in India, in addition to the Hindi phrase “Dilli Police lath bajao” — which loosely translates to “Delhi Police, hit them with your batons."

…

A day later, Twitter issued a new statement saying that it suspended more than 300 accounts engaged in spam and platform manipulation.

The number accounts later went up to ‘over 550’ [ANI via New Indian Express]

"We have taken strong enforcement action to protect the conversation on the service from attempts to incite violence, abuse, and threats that could trigger the risk of offline harm by blocking certain terms that violate our rules for trends, the spokesperson said.

"Using a combination of technology and human review, Twitter worked at scale and took action on hundreds of accounts and Tweets that have been in violation of the Twitter Rules, and suspended more than 550 accounts engaged in spam and platform manipulation," the spokesperson told ANI.

Twitter certainly did flag at least one video citing its manipulated media policy [I won’t link to it here but I have added it to my Labeled Tweet Repository on Notion and Twitter Moment where I am trying to document these].

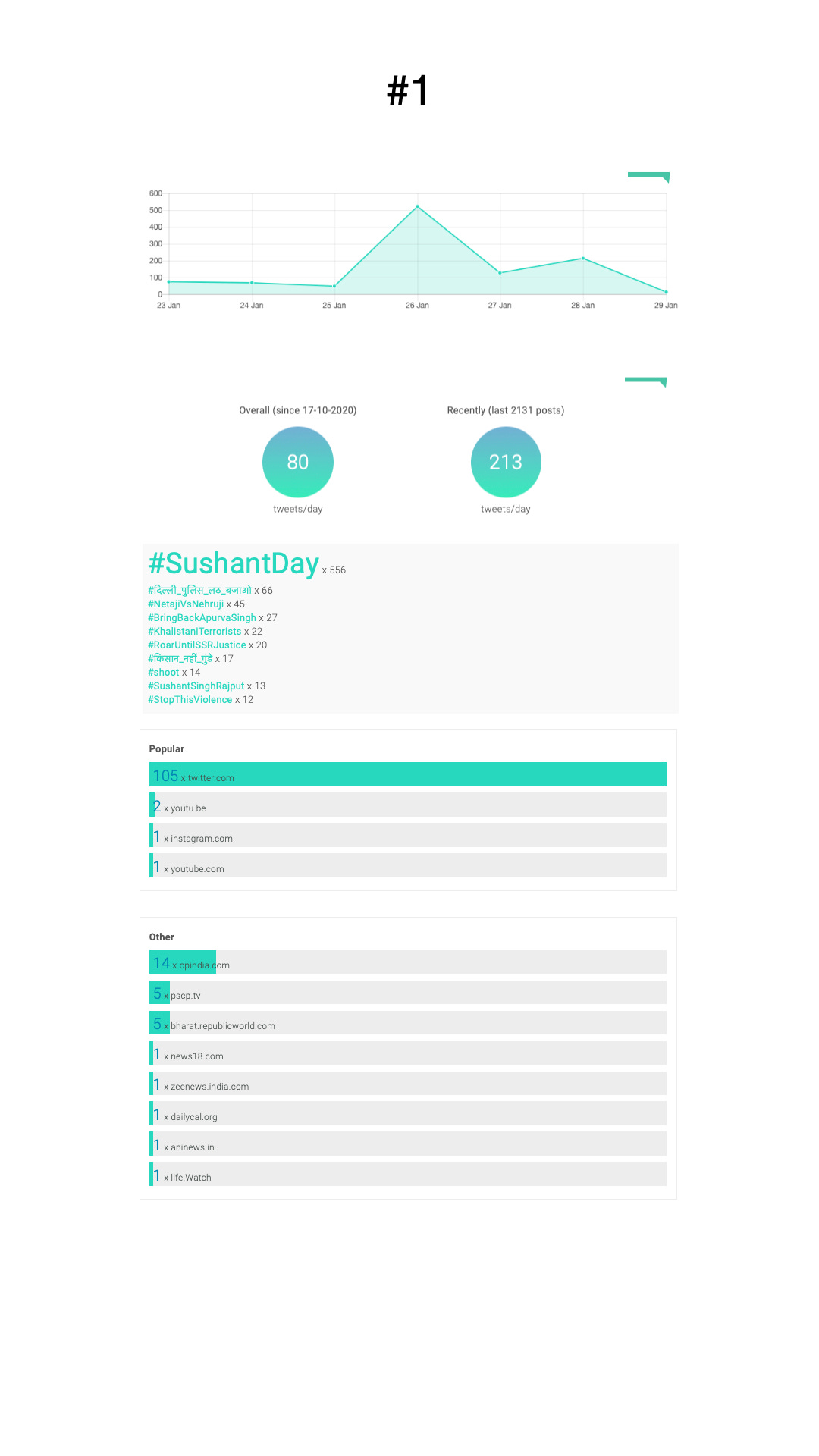

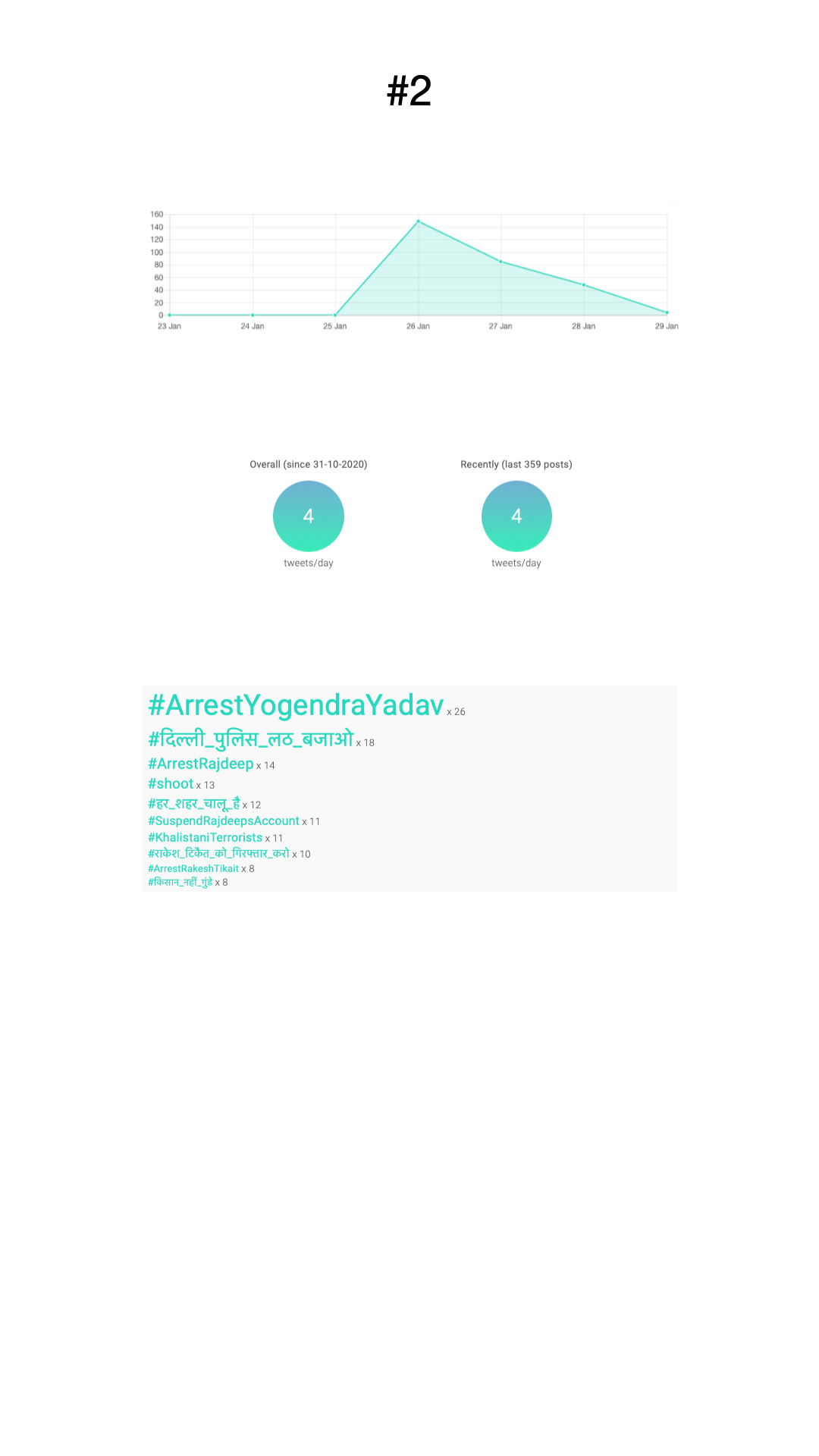

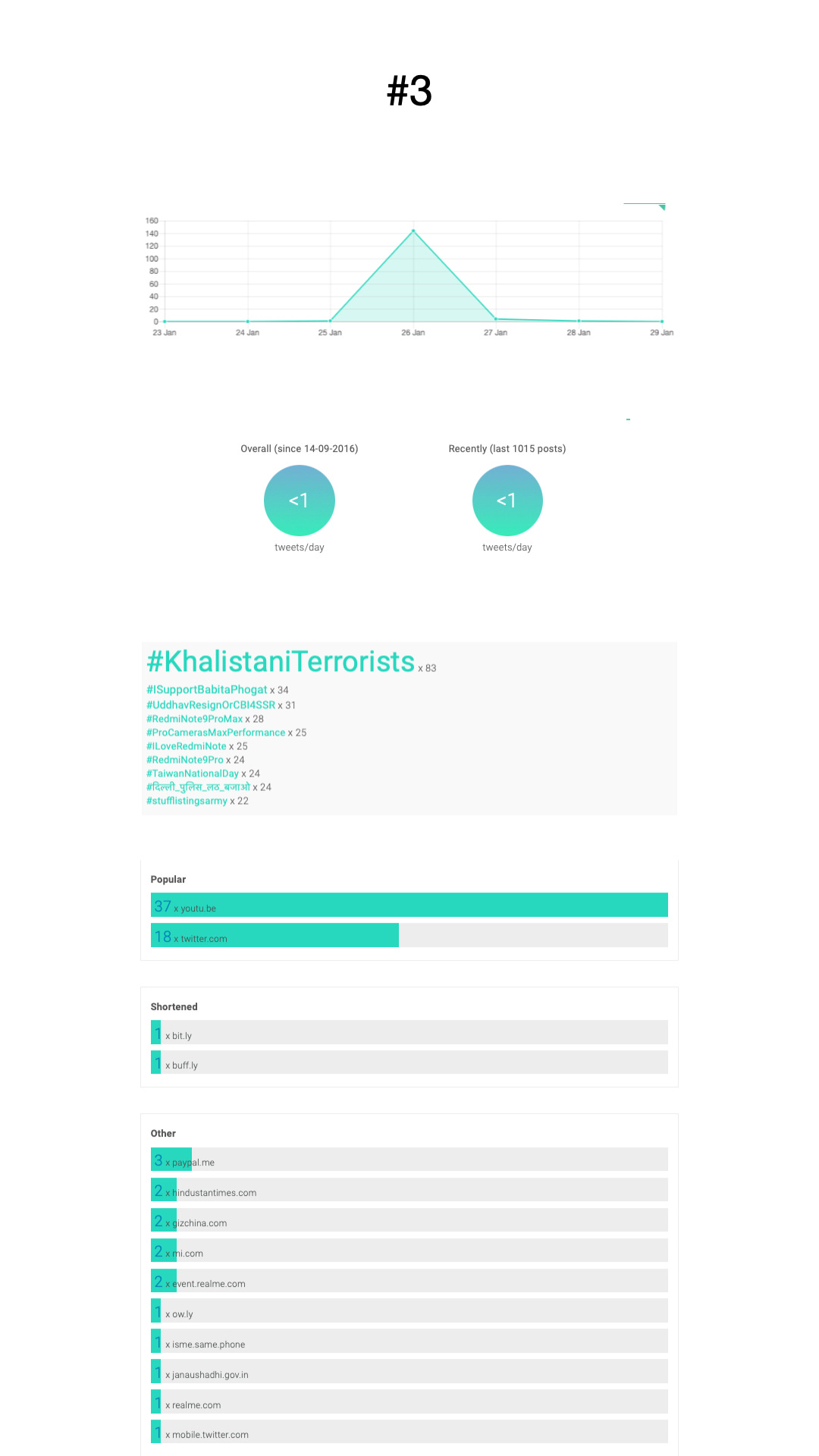

I also happened to extract some tweets just as the hashtag shoot was picking up (yes, it was a hashtag too). This batch consisted of ~3000 tweets. I looked at which on which date most of the accounts were created (26th Jan 2021 - my previous caveat about number of accounts vs engagement still applies), the accompanying hashtags (not pretty reading) and if the accounts with most tweets and most retweets were still around (they were).

Possibly unrelated to Twitter’s actions (no way of knowing thanks to a lack of transparency), a number of accounts of Sikh/Punjabi news and analysis sites, activists, etc. were taken down.

And in the lead-up to protests on 26th January, there was confusion after the Ghazipur police ‘allegedly issued notices to petrol pumps asking them to not sell fuel to tractor owners’. [Omar Rashid - The Hindu]

After receiving criticism on social media, Ghazipur police issued a clarification on Sunday saying that no such order or direction was issued in the district. However, referring to a specific police station, Ghazipur police said that a notice had been issued “by mistake” from the Suhwal thana (station) but the order was immediately withdrawn.

On Monday, the Supreme Court issued notice to the Center seeking its views on a regulator for TV News [ANI via BusinessWorld].

Related 1: Clair Wardle on how TV News Failed on Electoral Fraud Claims in the US.

The news media need to stop focusing on or responding to individual false or misleading claims one by one. Instead, there needs to be a shift in attention to the power of the larger narratives that those individual claims construct.

Researchers need to assess—empirically—how the news media amplify rumors and baseless assertions.

The news industry needs to stop tying itself in knots about whether to cut away from someone making false claims. It’s time to talk about new strategies for covering falsehoods, including new reporting styles, graphics, and guidelines.

Related 2: Ariana Pekary, the CJR public editor for CNN calls for an end to the panel discussion format.

The actual cost of this simple format is one the public cannot afford: the loss of fully informed and civil conversation.

Related 3: An oldie - from a 2012 Caravan Magazine article on english news broadcasting in India:

Investigative reporting is more expensive. “It costs me two to three lakh rupees to produce a two-minute story,” he said. Most broadcasters do not have the resources and patience to send teams on assignment to remote parts of the country; they prefer, instead, to bring in expert panelists to debate the most popular topics of the day. That “costs almost nothing”, the journalist said.

In the end, many broadcasters are left without the financial resources or talent needed to produce original journalism.

KN Govindacharya has approached the Delhi HC to implead the Ministry of Information and Broadcasting in an ongoing petition ‘seeking removal of fake news and hate speech on social media’ [ANI]

Alaphia Zoyab, writing in Rest of World, raises the important question of social media platforms’ double-standards when it comes to policy enforcement in the context of Trump’s deplatforming v/s a number of Indian politicians.

There’s an obviously cynical conclusion to make: Donald Trump has lost his political power, while Kapil Mishra is only gaining his. The way that Facebook and Twitter have treated these two politicians highlights the fact that the platforms exist to protect the powerful and their own profits over everything else.

…

The problem is not freedom of speech— that is sacrosanct. The problem is that, in the pursuit of profit, social media giants will amplify incendiary voices, giving them the freedom to reach more followers.

…

Social media algorithms are the automated editors that decide what you see in your feeds. They are built on massive volumes of data that platforms extract by surveilling everything their users do online. These algorithms are built to maximize our attention and keep us clicking and scrolling so that people can be shown more ads. This addictive design has not been programmed to discern what is true or what is false, what is harmful or what is not.

And that’s the perfect segue into the next section.

Big Tech and Model Watch

On effectiveness of Behavioural ads based on a week-long study by researchers at the University of Minnesota, University of California, Irvine, and Carnegie Mellon University [Keach Hagey, WSJ]

publishers only get about 4% more revenue for an ad impression that has a cookie enabled than for one that doesn’t.

…

“It is a huge finding in terms of the policy debate,” said Ashkan Soltani,

…

“All of these externalities with regard to the ad economy—the harm to privacy, the expansion of government surveillance, the ability to microtarget and drive divisive content—were often justified to industry because of this ‘huge’ value to publishers,” Mr. Soltani said.

A study by a researcher at Forrester claimed that firms like Adidas, Birchbox, Best Buy and Clorox that participated in the Facebook ads boycott saw minimal impact to their revenues. Given the business model of the companies [sales rather than advertising] this may seem unrelated, but I believe the conclusion the research is driving towards is that most ads end up targeting cases where a sale was likely to happen anyway.

2 articles (ok, 1 is an opinion piece) on how brand-safety tech that prevents advertisers from placing ads on certain pages which may have undesirable/sensitive content is actually resulting in cutting funds to legitimate media outlets.

Ben Parker for The New Humanitarian, based on research by Krzysztof Franaszek whose findings indicated that “mainstream brands don’t just avoid mention of violence, misery, and pornography in ad placement, but also of racial groups, religions, and sexual orientations.”

Augustine Fou, for Forbes makes the case that brand-safety tech inadvertently funds websites carrying misinformation and disinformation in some cases, and that if a brand believes in retargeting then the publisher shouldn’t necessarily matter (I am not sure these are necessarily mutually exclusive)

Taboola (remember them from Edition 28) is merging with ION Acquisition Corp in a deal that values it at $2.6 billion.

Facebook’s ‘not Facebook’s just Oversight Board’ oversight board published its decisions in 5 cases. It overturned 4 of them. The ruling on the case from India (2020-007-FB-FBR) that it picked up after the initial batch of 6 cases is due in a few days.

It also announced its next set of cases as I was writing this edition. This time there are just 2. One, as we know, pertains to Donald Trump’s deplatforming. The second, a case from Netherlands, where Facebook had removed content under its Hate Speech Policy.

Twitter announced Birdwatch - which will let users add contextual notes. But these notes won’t affect Twitter’s recommendations in any way. For now, this pilot will be running on a separate site (not available in India). There are also concerns about Brigading and user-safety as Ben Collins and Brandy Zadrozny call out.

Twitter also announced that academic researchers could get full access to its APIs.

YouTube claims to have taken down over 500,000 videos for COVID-19 misinformation since Feb 2020. The post also added that these policies were being expanded to cover COVID-19 vaccines in October. There were no numbers on takedowns related to this (sub?)category.

In October, we expanded our COVID-19 medical misinformation policy on YouTube to remove content about vaccines that contradicts consensus from health authorities, such as the Centers for Disease Control or the WHO

A report by Jeremy B. Merril for The Markup states that Google and Facebook ran ads for far right militia sites while Amazon listed such merchandise despite a ban.

Everything is Content Moderation Problem

No doubt you’ve been following the GameStop and r/WallStreetBets shenigans already. In the off-chance you’re not - Kyle Orfland has written the complete moron’s guide to it. I didn’t name it! But I did have to read it.

Now this whole thing Gamestop thing is supposed to be a David (retail investors) v/s Goliath (Wall Street hedge funds) powered by a ‘Robinhood’ no less (an app that lets people trade for free). I won’t claim any expertise on short-selling, day-trading etc. But it is fascinating how this has become partly a content moderation issue

I borrowed that framing from this article by Issie Lapowsky who argued that it was a content moderation for Reddit. And she ends with the clincher that the professional worrier in me was thinking about from the moment I heard of it.

But one thing that seems obvious from the last decade or more on the internet is that the same tools that can be used to build mass movements — be they political or financial — can be used by bad actors to manipulate the masses later on. If and when that happens, Reddit will have few excuses for not being prepared.

If you want to get hooked to a stream of smart tech policy folks taking about Gamestop and Robinnhood check out this custom search based on one of my twitter lists - feel free to play around with the filters. One of my favourite so far:

Robinhood playing the same Silicon Valley "free" trick. Saying it helps people by letting them buy and sell for free, when in fact it sells peoples' order flow. https://t.co/29UKDe51ycRobinhood CEO Vlad Tenev defends the company's decision to limit trading on some symbols following the Gamestop stock chaos, saying they must "prudently manage the risk and the deposit requirements." "It's about us complying with the financial and clearing house deposits..." https://t.co/CaMJRXS0fo

Robinhood playing the same Silicon Valley "free" trick. Saying it helps people by letting them buy and sell for free, when in fact it sells peoples' order flow. https://t.co/29UKDe51ycRobinhood CEO Vlad Tenev defends the company's decision to limit trading on some symbols following the Gamestop stock chaos, saying they must "prudently manage the risk and the deposit requirements." "It's about us complying with the financial and clearing house deposits..." https://t.co/CaMJRXS0fo Cuomo Prime Time @CuomoPrimeTime

Cuomo Prime Time @CuomoPrimeTimeDouglass Mackey was arrested based on ‘federal charges of election interference stemming from allegations of a voter disinformation campaign during the 2016 election’ [Brandy Zadrozny - NBC News]. This may just be the first time that the U.S. has arrested anyone for disinformation, no? I didn’t come across any instances when I was tracking state responses to information disorder.

Jacob Mchangama, for Lawfare, contrasts government mandated content moderation timeslines with time taken by various domestic legal authorities to decide. I am going to include to tweets by Daphne Keller here too.

How long should platforms spend deciding if users' online expression is illegal? Lawmakers keep coming up with answers like "24 hours" or "7 days." Meanwhile, courts spend hundreds of days on the same questions. Here is some great data from @JMchangama lawfareblog.com/rushing-judgme….

How long should platforms spend deciding if users' online expression is illegal? Lawmakers keep coming up with answers like "24 hours" or "7 days." Meanwhile, courts spend hundreds of days on the same questions. Here is some great data from @JMchangama lawfareblog.com/rushing-judgme….

The spectrum looks something like this. The % of "easy" claims will vary a lot by platform, legal issue, national law, etc. But for claims other than (c) and CSAM I'd very roughly posit 30% easy or easy-ish calls. I'd LOVE to see other people's informed guesses on that %age! 2/

The spectrum looks something like this. The % of "easy" claims will vary a lot by platform, legal issue, national law, etc. But for claims other than (c) and CSAM I'd very roughly posit 30% easy or easy-ish calls. I'd LOVE to see other people's informed guesses on that %age! 2/

Studies/Analyses

A report by The Wilson Center [Malign Creativity: How Gender, Sex, and Lies are Weaponized Against Women Online].

In an analysis of online conversations about 13 female politicians across six social media platforms, totaling over 336,000 pieces of abusive content shared by over 190,000 users over a two-month period, the report defines, quantifies, and evaluates the use of online gendered and sexualized disinformation campaigns against women in politics and beyond

Related: Alexandra Pavliuc, one of the report’s co-authors, wrote in The Conversation that 78% of this was directed at Kamala Harris. “That equates to four abusive posts a minute over the course of our two-month study period”

A study by Nadia M. Brashier, Gordon Pennycook, Adam J. Berinsky, and David G. Rand [Timing matters when correcting fake news] found that debunking was more effective than labeling and prebunking.

Providing fact-checks after headlines (debunking) improved subsequent truth discernment more than providing the same information during (labeling) or before (prebunking) exposure.

To explain this:

These results provide insight into the continued influence effect. If misinformation persists because people refuse to “update” beliefs initially (16), prebunking should outperform debunking; readers know from the outset that news is false, so no updating is needed. We found the opposite pattern, which instead supports the concurrent storage hypothesis that people retain both misinformation and its correction (17); but over time, the correction fades from memory (e.g., ref. 18). Thus, the key challenge is making corrections memorable. Debunking was more effective than labeling, emphasizing the power of feedback in boosting memory.

Sonal Gupta for The Quint [How Queerphobia & Misinformation Stifle LGBTQ+ Safe Spaces Online]

Archit Lohani undertakes a comparative analysis of some global efforts to deal with hate speech and disinformation.

Adrienne Goldstein [Social Media Engagement with Deceptive Sites Reached Record Highs in 2020] found that

“On Twitter, shares by verified accounts of content from deceptive sites reached an all-time high in the fourth quarter of 2020.”

“On Facebook, there was a decline in interactions with all types of sites—including reputable and deceptive sites—in the fourth quarter of 2020, but interactions with deceptive sites were higher than in previous years, and more than two times higher than in the run-up to the 2016 election.”