Of (Un)equal Democratisation, My Fake is better than your fake, Prebunk

MisDisMal-Information Edition 24

What is this? This newsletter aims to track information disorder largely from an Indian perspective. It will also look at some global campaigns and research.*

What this is not? A fact-check newsletter. There are organisations like Altnews, Boomlive, etc. who already do some great work. It may feature some of their fact-checks periodically.*

Welcome to Edition 24 of MisDisMal-Information

Note: MisDisMal-Information will be on a break until 20th November.

Social Media democratised the information ecosystem but some are still more equal than others

We’ve heard this often:

Social Media has democratised content creation/sharing

It has dis intermediated the traditional intermediary in the information ecosystem, namely (the ‘mainstream’ media or the ‘traditional’ media - whatever monicker you choose to use)

This is true, partially. Yes, anyone “can” create content - and we have seen ample instances of events/news that may not have been picked by the ‘traditional’ media unless they were already amplified on ‘social media’. Depending on the particular instance and how we view them owing to our on political beliefs - we tend to view this as being either, a good or bad thing, shifting from event to event. We should recognise that this is, ultimately, ambivalent.

I want to highlight 2 particular incidents from last week, both featuring the parliament proceedings in neighbouring Pakistan.

1) When MPs were shouting ‘Voting, Voting’, we heard ‘Modi, Modi’ (Insert joke about votes going to a particular party irrespective of which button is pressed.). See this round-upfa by Newslaundry

2) One particular MP, seems have to claimed that ‘Pulwama’ was a success for the current establishment. He has since ‘clarified/back-tracked’ depending on which publication you read.

In both cases, we saw multiple media outlets rushing to cover both instances without, seemingly, asking basic questions such as ‘why?’.

Despite, whatever, challenges the business models are facing - they still retain an ability to influence conversations in the public sphere, in other words, “setting the agenda”. And at the risk of sounding like a broken record, in our bid to hold platforms accountable - we cannot overlook the need for systemic changes. (Here’s a thought experiment: If we were to shutdown all social networks with more than say 5 million users, would you expect the tone of TV news coverage to shift significantly?)

But then, this is hardly a monolith and now has many more participants than just the ‘old school’ media houses. Which is why, the recent announcements about the formation of:

Indian Digital Media Association (IDMA) - “the country’s largest conglomeration of digital media platforms and one that puts the nation’s interest above everything else. IDMA is a pro-nation, Indian-owned, Indian-edited and Indian-controlled digital news association.” Including the likes of OpIndia, Republic, NewsX, Goa Chronicle, OTV, Sunday Guardian, InKhabar.

Digipub News India Foundation to “underline the fact that pursuits and interests of legacy media may not always be the same as that of digital media – especially in regards to regulation, business models, technology and structures”. Including the likes of Alt News, Article 14, Boomlive, Cobrapost, HW News, Newsclick, Newslaundry, Scroll, News Minute, Quint, and Wire.

are potentially significant, in that they now seem to formalise the 3 distinct groups (imperfect labels for now) in the digital news space:

The old-school kind

The right-leaning kind

The ‘liberal-ish’ kind

And that the current information ecosystem hasn’t completely dis intermediated anyone, as much as just shift who these information intermediaries are. Note: I am referring to people/entities that create/share content not the platforms when I speak of intermediaries, here

The ‘traditional/mainstream’ media outlets are still information intermediaries, they just have a lot more competition than they did before - what we call ‘influencers’ now. And this does shift the incentive structure, something that I won’t quite be able to explore in this edition.

My Fake is better than your fake

I am sure you’ve seen some version of this argument.

“So what if it is fake? x.y.z is true”

First, let’s head over to Israel

Lawmaker Osnat Mark of Prime Minister Benjamin Netanyahu’s party cited on Tuesday a fake Facebook post in which a prominent anti-corruption movement called on anti-government protests to equip themselves with pepper spray and stun guns against the police at demonstrations.

The post had originally been cited by the police , but major media outlets reported it was false. The police later admitted that they had erred. At first, Mark said that “it doesn’t matter if it’s false.” She later conceded that it was and retracted her statement, without offering an apology.

Now, let’s talk about Sonu Sood,

From a News18 post on Oct 26th:

On Sunday, Sonu responded to a Twitter user’s cry for help as their son had to undergo an open heart surgery. Netizens raised suspicion as Sonu’s Twitter handle was tagged in the original post that was made out.

Earlier in the same post:

However, a recent social media post by Sonu led many to believe that his charity work during the pandemic is nothing more than a PR gig of his team. After this recent episode came to light, Sonu was dubbed by many a ‘scam artist’ too.

This is the post in question:

And Sonu Sood did respond to one of the tweets claiming that it was all a PR ruse:

P.S. The account was created on Oct 18th, based on the information I pulled from Twitter’s API.

Going into a purely hypothetical situation now. Let’s assume that this, and some other instances on Twitter were indeed ‘managed’ to highlight them (Let me reiterate, I am not suggesting that this is actually what happened, I have no evidence to make any such claim).

Does that change how we view these situations?

Should it? - as long as people are being helped in the bargain?

How does it affect the information ecosystem? Is it pollution in a sense? (I am not necessarily implying a negative connotation here)

And, the difficult question: if we’re ok this (since we believe people are being helped in the process) - is there a way to prevent justification-creep? (i.e. others justifying using similar forms of ‘management’ but without the accompanying philanthropy or worse - outright adverse outcomes)

Pre-bunking

Over the last few weeks, there’s good chance you’ve come across the term ‘Pre-bunking’. And if you hadn’t, I am almost certain you did after Twitter announced it would be using ‘pre-bunks’ last Monday.

Pre-bunking is essentially:

placing messages at the top of users’ feeds to pre-emptively debunk false information

A broad measure, being applied in the (relatively, if you consider that there is a whole rest of the world out there too) in the narrow context of the U.S. Elections. Facebook is doing something similar, though it hasn’t called them pre-bunks.

For example, when polls close, we will run a notification at the top of Facebook and Instagram and apply labels to candidates’ posts directing people to the Voting Information Center for more information about the vote-counting process. But, if a candidate or party declares premature victory before a race is called by major media outlets, we will add more specific information in the notifications that counting is still in progress and no winner has been determined.

This is certainly interesting, in that it could prime someone about the broader context. It may not, however, work against localised claims such as x.y.z is happening here, OR, a.b.c has done this, etc.

There has, already been some study in this area:

The thread by Emily Vraga (paper wasn’t open access) asserts that:

Logic-based corrections tend can work when they come before the post containing misleading information.

Fact-based correction works only after the misleading post.

So, will pre-bunks work in this election context?

I’d like to say, time will tell - but I don’t think we’ll know for sure considering the number of different moving parts there are.

This section is not about the Section 230 hearing

Some of the most insightful posts/comments were not about the specific hearing itself. (If you want to, you can spend 3+ hours watching the whole thing)

The one post that you should read is by Zeynep Tufekci. It isn’t about this hearing per se, but about such hearings in general.

We should stop asking questions of these men and start giving them answers. The only way to do this is to make these questions into what they actually are: political questions. That’s where we have to debate everything from the dominance of few social media companies over the public sphere to the problem of regulating attention in an age of information glut.

…

There is no avoiding this dilemma. I don’t want to hear another apology , another ‘we willdobetter ’ promise from any CEO. The real problem is that these men testifying today have emerged as unelected, unaccountable referees of our public sphere,.Until we address that, until we take back our power as the public, we will be left with more rounds from the World Wrestling Federation.

Daphne Keller’s thread on ‘Regulators’ hearing appeals on content moderation decisions. I’ve only embedded the last tweet

One of the notable parts of the hearing was Ted Cruz going after Jack Dorsey following Twitter’s decision to block the NY Post URLs + freeze their account until they deleted the tweets that ran afoul of their policy. I was under the impression that the action was taken on the basis of the personal/sensitive information policy, but this hearing made it clear that it was blocked on the hacked materials policy. Something that Twitter has amended since then. So - Jack Dorsey was pushed about why the NY Post’s account continues to be suspended. He didn’t; have a better response than since it was a prior enforcement action, all they need to do is delete that tweet. They can then re-post the same content again - which would not be a violation of the current (post amendment) policy. And then, this happened:

I guess Will Oremus was right when he wrote:

“For YouTube, Facebook and the rest, if a decision becomes too controversial, change it”

The questions did make one thing clear - for eg (heavily paraphrased). “If you act against Donald Trump’s posts then why don’t you act as forcefully against other world leaders”. This will get harder for platforms moving forward. And the more they act - the more we can expect vociferous calls from politicians, states and society for them to up the ante. Something Rohan Seth and I had called out in a research documentback in June.

Wicked Problems

Shape Shifting

Back in edition 16, we looked at how disinformation actors shape-shift and evolve. More evidence of that continues to come our way.

Cade Metz in NY Times about a disinformation campaign that is now using good old fashioned text messaging, since social media platforms do take action every now and then (not always as we all know). At this point, there seems to be no easy way to get a sense of the scale of this. One data point that the article does mention 2.6 billion of ‘political’ text messages.

The Independent on the anti-vax movement’s ‘rebranding’ in the COVID-10 era:

Historically, the anti-vaccine community has been known for its concerns around vaccine safety and the debunked theory that vaccines cause autism. Broniatowski and researchers found, though, that civil liberties have emerged as a common narrative among vaccine refusal pages on Facebook, including those who also supported alternative medicine and conspiracy theories about the pharmaceutical industry and billionaire philanthropist Bill Gates.

There are differences between the last Disinformation World Cup (2016) and this one (2020).

“One thing that gets lost is disinformation and online media manipulation in 2020 does not look the way it looked in 2008 and even 2016,” said Zarine Kharazian, an assistant editor at the Atlantic Council’s Digital Forensics Lab. “And what we’ve actually seen is detection has gotten a lot better.”

Is this thing still (QAn)On?

Big Tech’s enforcement actions against QAnon continue

Google’s shopping results now don’t show any search for QAnon. *I checked a few more variants such as ‘queue anon’, ‘cue anon’, ‘kew anon’ - none of which seemed to return any results. ‘Que Anon’ did.*

Kim Lyons, in TheVerge - Patreon will now remove accounts that promote QAnon content. As the tentacles of action against conspiracy theories spread (or dominoes keep falling, as Evelyn Douek refers to it) - any platform dealing with user generated content will need to figure out how to deal with them - especially if they take action against one.

Now combine expanding enforcement actions and shape-shifting. I don’t know where this ends. But here’s a glimpse of how it is going (insert how it started, how it is going meme) - Rachel Greenspan writes about Facebook choosing to limit hashtags such as ‘Save our Children’ which Qanon co-opted. To set some context, there has been detailed reporting by reporters like Brandy Zadrozny and Ben Collins documentinghow this hashtag was being used to mobilise people for protests in many cities.

YouTube, meanwhile, is being sued by a group of channel owners who were de-platformed after YouTube updated its policies “to prohibit content that targets an individual or group with conspiracy theories that have been used to justify real-world violence.” - and followed through with some enforcement,

Meanwhile in India

LiveLaw reports that a PIL in the Supreme Court wants the Union government to enable criminal prosecution of “persons involved in spreading hate and fake news through social media.“ It also asks for separate laws for regulating social media platforms and essentially stripping away intermediary protections. And a mechanism for the “automatic removal of hate speeches and fake news within a short time frame”, as well as having an ‘expert investigating officer’ for each case that is ultimately registered using these laws. The PIL was in response to content posted by @ArminNavabi which was “against Hindu goddess and using derogatory terms.”.

And, in other court-related developments, former RSS idealogue K N Govindacharya told the Delhi HC that mechanisms put in place by Google and Twitter were inadequate.

The submission by Govindacharya has been made in his rejoinder to the replies of Google and Twitter who have opposed his plea seeking directions to the Centre and social media platforms, including Facebook, to ensure removal of fake news and hate speech circulated on online media as well as disclosure of their designated officers in India. Twitter and Google had contended that they have no physical presence in the country, but have appointed designated officers as mandated under the law to liaise with the government and investigating agencies.

A survey by LocalCircles indicates that a little over 60% of those who responded would be in no hurry to get a COVID-19 vaccine should it be available in 2021.

Sixty one per cent of 8,312 respondents said they are “skeptical about the Covid-19 vaccine and will not rush to take it in 2021 even if it is available”. Only 12% respondents said to “get vaccinated and go back to living pre-Covid lifestyle”, while 25% said they will “get vaccinated but still won’t go back to pre-Covid lifestyle”, and 10% said they “won’t take it at all in 2021”.

Related, a blog on TOI tries to the address the “What’s the harm?” question surrounding natural remedies - or what the post refers to as pseudoscience.

Now that you’ve heard me bemoan the general lack of questioning, I think it is only fair that we talk about the one question that does get asked, especially in context of herbal supplements etc: “What’s the harm?”. I do hope that everyone who ask this, spare a moment and follow through. Because if you do, I bet you’ll find that more often than not, there IS harm! In the last few months, there have been regular news reports of health complications from taking too much of vitamin or herbal supplements.

A term frequently tossed around on Ayurveda products and herbal supplements is “natural”. Just because something is natural does not mean it’s good for you. Arsenic is natural, as is death.

Ananya Bharadwaj, for ThePrint, with a round-up of journalists across 18 states and UTs facing police action “even for tweets”. I counted 53 people. And Dipanjan Sinha, writes in Newslaundry, about an increase in attacks on journalists in Tripura.

In Kolkata, the police initiated legal action against a post claiming that a 48-hour lockdown is impending. Obviously, this post supposedly went ‘viral’.

Some general Hall of Shame stuff:

OpIndia happened to interview the Union Information and Broadcasting Minister, Prakash Javadekar (many thoughts on this and what it represents, but probably outside the scope of this edition). Obviously, among other things, they covered ‘fake news’.

On the matter of prevalence and furtherance of fake news by not just self-proclaimed fact-checkers and established media organisations, Prakash Javadekar said the debunking of fake news is being robustly done by the fact-checking arm of the Press Information Bureau(PIB).

He also added that media organisations, both the local as well as foreign organisations, are officially communicated through PIB if they are found peddling distorted interpretation of news.

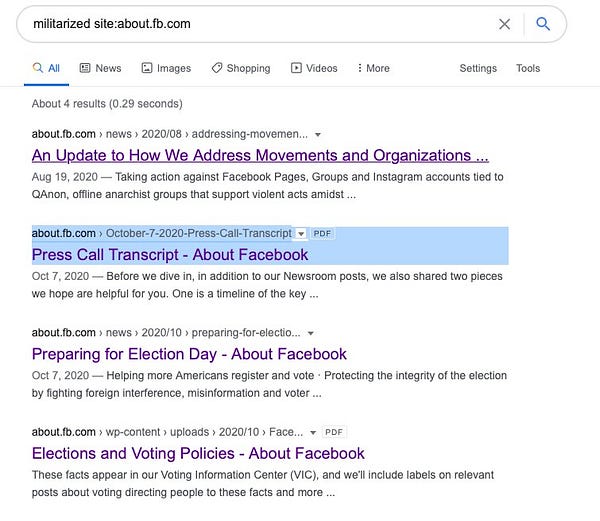

Shweta Desai, this time goes into the ‘world that killed the Tanishq Ad’. Going through this post once again brought forth a question that has been on my mind a lot lately - what parameters does Facebook look at to determine the militarised aspect of a ‘militarized social movement’ - which can be considered grounds for policy enforcement of some sort.

Around the world

Follow-up from Edition 21 - Nicaragua has passed a law criminalising “what government considers fake news”. The 4 year prison term still applies.

Reuters reports that Nigeria is considering regulation of Social Media “just a week after protesters spread images and videos of a deadly shooting using Twitter, Instagram and Facebook.”

Martin Chulov, in The Guardian about the disinformation campaigns against the White Helmets in Syria and their impact on one of its co-founders.

YouTube apparently has more ads than ad slots in the run up the U.S. elections. The NY Times reports that on Election Day, that the Trump campaign will feature prominently on its homepage thanks to… ads…

It is the conclusion of a yearlong tug of war between the two presidential campaigns for the ad space on YouTube’s home page, the front door to the internet’s second-most visited website. The Biden campaign secured the so-called masthead on the days after the presidential debates and the Republican convention. But it was the Trump campaign that landed the coveted ad property for the most critical days of the election cycle, starting Sunday and going through Election Day. How Google allocates the home page on important election dates has become a source of tension between the company and the Democratic National Committee.

Wikipeda is preparing for Election Day information disorder.

Myanmar elections are happening in early November too. The Economist reports(potential paywall) that Facebook which (as per the article) is the primary source of news for 2 out of 5 users, once again, seems to be struggling:

As the country gears up for an election on November 8th, independent social-media monitors have recorded growing volumes of hate speech and disinformation—such as claims that Aung San Suu Kyi, Myanmar’s de facto leader, has died of covid. On October 8th Facebook announced that it had removed a popular network of accounts it says were run by the army, which promoted the view that the Rohingya do not belong in Myanmar.

But supporters of the National League for Democracy, the party of Ms Suu Kyi, are also targeting Muslims and pushing falsehoods in an effort to convince ethnic minorities to vote for their party, according to Myat Thu of Myanmar Tech Accountability, a monitoring firm. The targets of bigotry now encompass anyone who is not “pure Bamar”, the ethnic majority, says another analyst who monitors Burmese social media, and asked not to be named.

Staying with Myanmar, as part of its Coordinated Inauthentic Behaviour report on October 27th, Facebook did remove “9 Facebook accounts, 8 Pages, 2 Groups and 2 Instagram accounts” which “originated in Myanmar and focused on domestic audiences.”

This network posted primarily in Burmese about current events in Rakhine state in Myanmar, including posts in support of the Arakan Army and criticism of Tatmadaw, Myanmar’s Armed forces. This activity did not appear to be directly focused on the November elections in Myanmar.

The previous Economist article does point that Facebook’s actions in Myanmar are limited to Burmese and do not cover the “country’s ethnic-minority languages, spoken by a third of the population”

David Cloud and Shashank Bengali, write about Facebook’s actions in Vietnam, where it is playing a part in curbing dissent.

Studies/Analsyes

A study by Richard Rogers asserts that the scale of Facebook’s “Fake News” problem depends on how narrowly or broadly the term is interpreted.

If one applies a stricter definition of ‘fake news’ such as only imposter news and conspiracy sites (thereby removing hyperpartisan sites as in Silverman’s definition), mainstream sources outperform ‘fake’ ones by a much greater proportion.

For context

The findings are made on the basis of Facebook user engagement of the top 200 stories returned for queries for candidates and social issues. Based on existing labelling sites, the stories and by extension the sources are classified along a spectrum from more to less problematic and partisan.

A paper by the team at Tattle Civic Technologies based on “factcheck-worthy” posts in Hindi. An encouraging insight - that 62% of posts contained a verifiable claim. They describe check-worthy claims as “1. statistical/numerical claims. 2.Descriptions of real work events or places about notewor-thy individuals. 3. Other factual claims such as cures andnutritional advice.”

Not a study per se, based on Crowdtangle data, the Myanmar Tech Accountability Network (MMTAN) created a set of Election Dashboards to monitor election-related social media activity.

— Politico worked with the Institute for Strategic Dialogue , a London-based thinktank that studies extremism online, to “ analyze which online voices were loudest and which messaging was most widespread around the Black Lives Matter movement and the potential for voter fraud in November’s election.” In their analysis of more than 2 million Facebook, Instagram, Twitter, Reddit, and 4Chan posts, the researchers found that

a small number of conservative users routinely outpace their liberal rivals and traditional news outlets in driving the online conversation — amplifying their impact a little more than a week before Election Day. They contradict the prevailing political rhetoric from some Republican lawmakers that conservative voices are censored online — indicating that instead, right-leaning talking points continue to shape the worldviews of millions of U.S. voters.

— A nine-month study by the progressive nonprofit Media Matters, using CrowdTangle data, found both that partisan content (left and right) did better than non-partisan content and that “right-leaning pages consistently earned more average weekly interactions than either left-leaning or ideologically nonaligned pages.[…] Between January 1 and September 30, right-leaning Facebook pages tallied more than 6 billion interactions (reactions, comments, shares), or 43% of total interactions earned by pages posting about American political news, despite accounting for only 26% of posts.”