What is this? This newsletter aims to track information disorder largely from an Indian perspective. It will also look at some global campaigns and research.*

What this is not? A fact-check newsletter. There are organisations like Altnews, Boomlive, etc. who already do some great work. It may feature some of their fact-checks periodically.*

Welcome to Edition 21 of MisDisMal-Information

Conspiracies, Rumour and Humour

Just a joke, or not just a joke? Framing counts

From 28th September - 2nd October, EU Disinfo Lab has been hosting a virtual conference on Disinformation (yes, yes, I know everyone is). Wednesday featured Emmanuel Choquette who spoke about humour, free speech and hate speech. It will eventually be posted online, but I want to focus on 3 key points he made. I’m listing them in the reverse order.

1) Humour, in the context of discourse, is not necessarily neutral. In fact, it shares similar characteristics (Slide included).

2) It does have mediated effects, as he demonstrated with an experiment that consisted of exposing people to pointed humour consisting of racial stereotypes.

3) He also referenced a study he carried out between 2006 - 2018, building on [trigger language warning] work by Lanita Jacobs-Huey which indicated that certain ethnic groups were more likely to be targeted by humour/jokes than others.

So, no, this doesn’t mean we have to stop joking around (phew). But we do have to realise that in certain contexts it may not ‘just be’ joking around.

And in some cases, humour can also be used as a vehicle to counter disinformation. Taiwan’s Digital Minster Audrey Tang, spoke about this in a conversation moderated by Joan Donovan. The underlying intention being to counter the rage and distrust that situations like pandemics, or what was phrased as ‘Humour over Rumour’ and that ‘Humour has a greater than R0’ value. Than what? That wasn’t very clear. Worth noting is that while these seemingly worked well in the context of medical information, but it remains to be seen how effective it can be at countering political disinformation. As I covered in edition 2 (What me-me worry): it does work well for the propagation of political disinformation.

Tin-foil pyramids

I am going to try to get through this edition without mentioning QAnon (crap!). But that still leaves us plenty of things to talk about pertaining to conspiracy theories

TikTok user @Tofology has come up with a really interesting inverted pyramid (tin-foil hat?) to classify conspiracy theories starting from those grounded in reality at the bottom and then going further away from reality as you climb higher (speculation, leaving reality, science denial and past of the point of anti-semitic no return). Somewhere along the upward journey (into despair), it also includes harm. Now, for those of us in India, TikTok still blocked, but fortunately she did it post it on twitter as well.

And another Twitter user was helpful enough to illustrate the pyramid as an image. The examples are mostly U.S. centric, but with a little modification, it can be made more location neutral. I have to admit, I am confused about the ‘harm’ aspect though, because those near the bottom (based in reality) can fall at different places on this spectrum.

Catherine Stihler, the chief executive of Creative Commons writes about defeating conspiracy theories by government transparency. Now, I don’t know if that will defeat it (and to be fair, the headline was probably chosen by the publication). The concluding sentences raise an extremely pertinent issue:

There is an urgent need to address humanity’s greatest global challenges through collaboration and accessing information.

It’s time to unlock knowledge for everyone, everywhere.

This creates a tension between the need to pay for quality information/knowledge (since it has high costs of production) and the need to ensure this knowledge is disseminated evenly. The pandemic has made it painfully evident that public knowledge (I realise the term is doing some heavy-lifting here), like public health should not be exclusive.

Nathan Allebach has a long read (~60 minutes) Conspiracy Theories in America. 2 sections in particular stood out to me:

Declining Institutions and Distrusting Experts

People need agreed-upon information for a democracy to function. When someone handpicks data they want to believe from counter authorities and demand others trust it, while simultaneously dismissing expert consensus on that very data, it’s a massive problem.

Experts and institutions should not be immune from criticism for cases of corruption, costly mistakes, and the like, but the public response should be accountability and solution-based, rather than based in paranoia and populism. Scientists, researchers, journalists, and experts generally haven’t been the most effective at communicating messages over the years.

If people distrust expert consensus on an issue, they’ll always find a source to justify their beliefs, which is how pseudoscience and conspiracy theories gain momentum, and if people feel lied to by institutions, it creates a vacuum for these exploitative forces.

From Extremely Online To Extremists

As expected, this is U.S. centric, but there are some aspects which apply across contexts

The cross-pollination of views across different platforms.

“The culture wars around feminism, LGBTQ, and Islam in particular became proxies for reactionaries to rally around online.”

“Trolling online was once contained to a small community, but now opened to millions of people. Extremists learned that if they could make a splash, people would react, then journalists would be pressured to cover it, which would amplify the message and accelerate distrust in those media sources. They gamed the system.”

A matter of Frames

I’m going to use this to segue to my next point, in my mind, these points about the dual use of humour as well as amplification of conspiracies relate to how things are ‘framed’. And this idea of framing, perhaps, applies as much to what we would refer to as traditional or mainstream media. A few events got me thinking about this even more recently:

An English language national daily in India, using a hate-inspired hashtag in their social media updates which implies a conspiracy involving marriage.

A legal reporting outfit using a different hate-inspirted hashtag to report on court proceedings.

This editorial by the NY Times editorial board calling on social media platforms to have a clear, transparent, coordinated plan to deal with a scenario in which one candidate (we know which one) claims an illegitimate victory.

Now, it is good to push platforms to have a clear plan so that crucial information flows related to important events are not affected by arbitrary decision making. But, as these 2 tweets show - this conversation is not complete without involving the original intermediaries of information and their role in framing issues.

In an editorial for Telegraph, Sevanti Ninan writes, of the business model underpinning news (television news in particular)

The first takeaway from this box-office logic is that self-regulation will not make a dent in the practices of tabloid news TV. Why would they want to self-regulate when they have to compete to sell viewers to advertisers? Serious fines that hurt their revenues are more likely to dim their enthusiasm for re-enacting an actress’s death in a bathtub, or reconstructing an actor’s suicide or conducting a media trial of his girlfriend and her family.

Wait, wait, I am not giving platforms a free-pass here. I am only making the point that in an information ecosystem - where incentives are skewed, this isn’t a question to just getting platforms to ‘heel’.

And in the same vein, it’s not just the information intermediaries who are responsible.

Sumedha Raikar-Mhatre has a very interesting piece on crowd psychology in Mid-day

It is interesting to note that since subtlety of words doesn't work with crowds, mass leaders feed them with black-and-white, often loud and figurative, rhetoric. Provocative imagery is the choice for all seasons. Rulers, past and present, manipulate the crowd's capacity to derive energy from images and symbols, like a roti, a pool of blood, slain soldiers, and underprivileged children.

But Prateek, won’t critical thinking and healthy skepticism solve everything?

Not quite, Danah Boyd has argued before about even that can be weaponised (sigh, what can’t?). Jack Falinski also includes this quote in a recent article.

How should we approach getting our information?

Assistant Professor Dustin Carnahan said he too believes healthy skepticism is a way to combat rogue information.

“I think especially when it comes to the source of information it’s always important to be skeptical,” Carnahan said. “I think there’s also the flip side, right? Being skeptical of everything is a problem because then we don’t have the resources — the cognitive resources — to think critically and skeptically about everything we read and see.”

Bot-Ching Democracy

I don’t know about, but for all its flaws, I quite prefer living in a democratic society.

An article adapted from Sinan Aral’s book - The Hype Machine asserts that ‘bots’ and people are in a ‘symbiotic relationship’ of sorts when it comes to the propagation of information disorder. Humans spread false information which they perceive as novel, and which also evoked surprise and disgust. The piece concludes:

To win the war against fake news, we can't rely only on defeating the bots. We have to win the hearts and minds of the humans too.

Marietje Schaake believes democracies need to take power back from the private governance of big-tech (I’m paraphrasing)

Every democratic country in the world faces the same challenge, but none can defuse it alone. We need a global democratic alliance to set norms, rules, and guidelines for technology companies and to agree on protocols for cross-border digital activities including election interference, cyberwar, and online trade. Citizens are better represented when a coalition of their governments—rather than a handful of corporate executives—define the terms of governance, and when checks, balances, and oversight mechanisms are in place.

This disparity between the public and private sectors is spiraling out of control. There’s an information gap, a talent gap, and a compute gap. Together, these add up to a power and accountability gap. An entire layer of control of our daily lives thus exists without democratic legitimacy and with little oversight.

The laissez-faire approach of democratic governments, and their reluctance to rein in private companies at home, also plays out on the international stage. While democratic governments have largely allowed companies to govern, authoritarian governments have taken to shaping norms through international fora. This unfortunate shift coincides with a trend of democratic decline worldwide, as large democracies like India, Turkey, and Brazil have become more authoritarian. Without deliberate and immediate efforts by democratic governments to win back agency, corporate and authoritarian governance models will erode democracy everywhere.

And the rather sobering observation that:

The reality, however, is that there are only two dominant systems of technology governance: the privatized one … and an authoritarian one.

She proposes a coalition that could:

adopt a shared definition of freedom of expression for social-media companies to follow.

limit the practice of microtargeting political ads on social media: it could, for example, forbid companies from allowing advertisers to tailor and target ads on the basis of someone’s religion, ethnicity, sexual orientation, or collected personal data.

adopt standards and methods of oversight for the digital operations of elections and campaigns.

Joan Donovan, proposes 6 strategies for countering misinformation hate speech for a civil society response. Note how hate speech and misinformation are effectively bundled together. If you’ve read previous editions of this newsletter, then you know that I have spent a lot of time talking about hate as well.

1) Well functioning connected communities.

2) Truth sandwich: Rebuttals of memetic/pithy information disorder slogans should be in the form of fact, fallacy, fact.

3)Prebunking: Anticipating disinformation narratives and keeping responses ready.

4) Distributed Debunking: A coordinated response to proliferate the truth once a disinformation artefact has broken out.

5) Localise the context of a debunk as far as possible.

6) Humour over Rumour: <scroll up>

Meanwhile in India

Even by the hallowed standards of 2020, it has been ‘some week’ here in India. Unfortunately, the information disorder train keeps chugging along, and so we must too.

In Kolkata, one person was arrested for making claims about an impending lockdown in the North 24-Paraganas district.

Mamta Banerjee was quoted as telling senior police officers:

"These days problems are created by spreading fake news through social networking platforms. I ask the SPs, ICs to play a proactive role. People are spreading lies to create trouble. Once you come to know about it block them instantly.”

"These days mental terrorism is much more devastating than physical terrorism.. We have to stop this at any cost,"

Always eager to engage in some good old fashioned moral policing, a Residents Welfare Association claimed it had been asked by the police to track bachelors and spinsters staying alone… because… drugs. It is with some sense of irony that I am linking to TimesNow as the source for this story.

Schools in Bhopal are staging a silent mass protest against ‘misinformation on social media about court orders on educational institutions and safety of schools and teachers’.

The Delhi HC ordered Twitter to “take down and/or block/suspend” the URLs of 16 tweets by a former India Today employee and provide ‘basic subscriber information’ to the media house ‘within 48 hours’.

After the passage of the Farm bills, BJP state units are taking to state-level efforts to counter ‘misinformation’ about the bills. West Bengal, Haryana, Rajasthan, Karnataka, Punjab.

Himanshi Dahiya, writing for TheQuint, goes beyond the W-T-F trio and looks into Telegram as a platform for fuelling Hate. Akriti Gaur, in Protego Press, makes a necessary point about the need for well thought out steps as we look to deal with hate speech on social media platforms in India.

However, it is crucial that any legislation of this nature is carefully thought out and balances public interest with constitutionally protected rights of free speech and privacy. This caveat arises from the fact that similar instances in the past have spurred the Indian Government to propose hasty and ill-thought regulations.

And since we’re on the topic of platforms - especially messengers where conversations seem to be plastered on TV Screens and just about everywhere else - Sushovan Sircar on what the leaks mean.

In a sneak peak of what we can expect when a vaccine comes out, ThePrint’s video feature on the impact of ‘lies and half-truths’ on Punjab’s struggles with COVID-19 features people confidently saying that ‘they haven’t seen COVID’.

Googly Eyes at self-reliance

I’m not sure what’s up with Google, but they decided to clarify a policy stating that digital goods will be liable for the 30% app-store tax from next year. Manish Singh, writing for TechCrunch, that Indian startups are looking at an alternative to Google’s monopoly. There is talk of [paraphrased] ‘foreign companies’ controlling the destinies of Indian apps. Some have called for app store for India.

We’ve been here before, sort of, Airtel, Vodafone and many others had tried their hand at Indian app stores in the early 2010s. They fizzled out over time, but this is 2020. 🤷♂️

This is very much an ongoing story and we’ll have to keep an eye in the weeks/months to come.

Given all the noise about anti-trust, app store policy backlash against Apple and Google - it seems odd that Google would pick another battle at this point. There is of course a case to argue about whether it is another battle or not, but at the very least, it is a new front in India. It isn’t like India has recently demonstrated both an ability and willingness to block services to make a point - oh wait!. And its also not like the rhetoric of self-reliance/cyber-sovereignty finds purchase both amongst the state and indian entrepreneurs - oh wait, again!. Just a few days, the Economic Times ran an Op-ed by Vivek Wadhwa arguing for a forced sale of Facebook India.

Google’s lock-in is pretty powerful at this point. It is going to take more than nationalist rhetoric alone to break this. What kind of actions will people lobby for? That’s one thing that worries me.

While, I can make no arguments against the idea of an alternative app-store. Some already exist. But depending on how successful it is, Aggegation Theory would still apply. And there are no guarantees that this new mythical entity will not act in a self-serving manner. I guess what matters is who is this ‘self’ that gets served.

This Op-ed in TOI argues:

Moreover, if data is the new oil, then Indian citizens should have first right over it and their right to privacy should be protected. Thus, their data cannot be the sovereign property of the Indian state or Indian companies either.

I am docking it 5 points for still saying ‘data is the new oil’.

Around the word in information operations

Twitter took down, wait for it, 130 accounts that originated in Iran which ‘were attempting to disrupt public conversation around the first Presidential Debate’.

DFRLab launched a tool - Foreign Interference Attribution Tracker, an interactive database of alleged influence operations - relevant to the 2020 U.S elections. Hopefully it will be expand beyond that scope because it is a challenge to track these operations across multiple information sources currently. Oh, and don’t worry, the aRep Global operation that was disclosed by Facebook earlier this year finds mention. I’d include a screenshot, but they’ve used an ‘anti-national’ map.

Facebook disclosed the removal of 3 networks originating in Russia for engaging in Coordinated Inauthentic Behaviour (CIB).

214 Facebook users, 35 Pages, 18 Groups and 34 Instagram accounts. Focussed primarily on Syria and Ukraine. Also included Turkey, Japan, Armenia, Georgia, Belarus, and Moldova.

1 Page, 5 Facebook accounts, 1 Facebook Group and 3 Instagram accounts. Primarily focused on Turkey and Europe.

23 Facebook accounts, 6 Pages, and 8 Instagram accounts. Focused on global audiences and Belarus.

Graphika Labs published a report titled GRU and the Minions based on 1.

Most of the clusters in the takedown operated across multiple platforms.

discovered related accounts on Twitter, YouTube, Medium, Tumblr, Reddit, Telegram, Pinterest, Wordpress, Blogspot and a range of other blogging sites.

None of the clusters built a viral following. The largest group on Facebook, which posted in English on the Syrian conflict, had 6,500 members; the largest page, which posted in Russian about political and military news, had 3,100 followers.

Another Graphika Labs report (3rd in less than 10 days if anyone is keeping count) detailed Russian operations concentrated on alt-right platforms such as Gab and Parler. They called this one Step into My Parler. I would have called Parler Tricks based on what I’ve read about the quality of the operation.

Graphika contends that it is possibly the other half of the operation which targeted left-leaning/left-wing networks. “Taken together, NAEBC and PeaceData echoed the IRA’s approach to targeting U.S. audiences in 2016-2017: they pushed users toward both ends of the political spectrum with divisive and hyper-partisan content.”

“NAEBC’s various assets attracted around 3,000 followers on Gab and 14,000 on Parler, but in each case they were following substantially more accounts than followed them in return: 11,000 on Gab, 22,000 on Parler.”

From the Reuters article about this operation. Hat-tip @JaneLytv

The website’s own name, however, is a pun on a Russian expletive meaning to deceive or “screw over.”

🤦♂️

In the New York Times, Maggie Astor writes that a video released by Project Veritas was part of a ‘coordinated campaign’

“It’s a great example of what a coordinated disinformation campaign looks like: pre-seeding the ground and then simultaneously hitting from a bunch of different accounts at once,” Mr. Stamos said.

Many of the same accounts that had shared promotional tweets also shared the video as soon as it was released, moving it quickly into Twitter’s trending topics alongside The Times’s tax investigation.

Now, this is possibly unrelated to the coordinated campaign bit, but Tulsi Gabbard tweeted that the video was evidence to call for ‘banning balllot harvesting’. But that’s not why this is featured here. Twitter account @conspirator0 analysed the accounts that were retweeting this. Most were pro-Trump. Because it is Tulsi Gabbard, some were Indian. Few were prominent (see 3 and 11).

Last week, Ben Nimmo (another Graphika Labs entry) published a paper that sought classify information operations by scale. The 6 point scale works like this

Category 1: “exist on a single platform, and their messaging does not spread beyond the community at the insertion point”

Category 2: “either spread beyond the insertion point but stay on one platform, or feature insertion points on multiple platforms, but do not spread beyond them.”

Category 3: “insertion points and breakout moments on multiple platforms, but do not spread onto mainstream media.”

Category 4: “break out of the social media sphere entirely and are reported by the mainstream media, either as embedded posts or as reports”

Category 5: “beyond mainstream media reporting, if celebrities amplify their messages — especially if they explicitly endorse them.”

Category 6: “triggers a policy response or some other form of concrete action, or if it includes a call for violence.”

Still Spinning

Bangladesh accused Myanmar of carrying out a disinformation campaign after the latter accused to former of supporting militant groups.

Nicaragua has proposed a law that could make ‘fake news’ on social media an offence carrying a 4 year prison sentence.

Guardian has compiled and published information of the people who have so far been arrested under Hong Kong’s National Security Law.

Studies/Analysis

The Election Integrity Partnership analysed narratives around ‘mail dumping’ in the U.S. They identified 5 techniques

Falsely assigning intent

Exaggerate impact

Falsely framing the date

Altering locale

Strategic amplification

As former practitioner and disinformation scholar Lawrence (Ladislav) Bittman explained, the best disinformation is often built around a true or plausible core. But, as we see in these cases, it is shaped and amplified in misleading ways to achieve political aims.

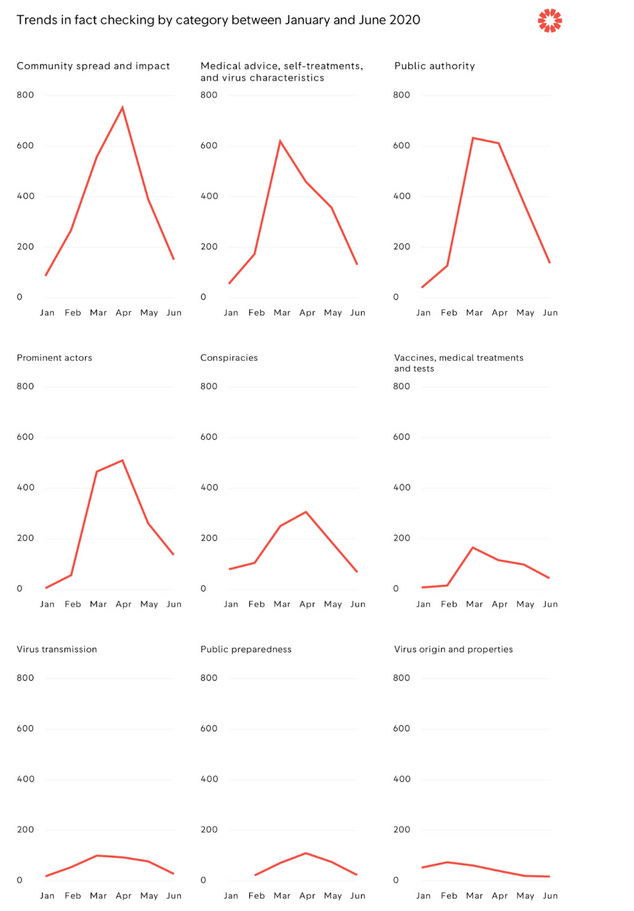

Bethan John, for FirstDraft, on what they learned after analysing 9,722 fact-checks. Even across categories, they tend to follow the overall trend, with minor variations on when the peak occurred.

Shorenstein Center’s Misinformation Review published research on the spread of political misinformation on subcultural platforms (reddit and 4chan).

Surprisingly, it found that users on these platforms were better at avoiding sharing ‘low-quality information’. To clarify, from what I understood, low quality was defined as a function of the news source - not the content itself. So while the sources were mainstream news sources - I’m not sure that it ranked the actual articles themselves. Researchers found a low percentage of ‘pink slime’ sources (imposter news sources pushing mainly conservative viewpoints).

YouTube was the most shared site on 4Chan (9 times higher than Twitter in second place), while on Reddit - it was most popular once auto-posting moderator bots which link to internal Reddit content were excluded.

Remember Zombie Information Disorder from Edition 3 (using wayback machine archives)? Well, here’s a study about it.

Understanding these manipulation tactics that use sources from web archives reveals something vexing about information practices during pandemics—the desire to access reliable information even after it has been moderated and fact-checked, for some individuals, will give health misinformation and conspiracy theories more traction because it has been labeled as specious content by platforms.

A comprehensive report on Countering Digital Disinformation while respecting freedom of expression.

I will wind-up this section with 2 publications by EU Disinfo Lab

And this one, which I thought was an interesting direction to take: How COVID-19 conspiracists and extremists are using crowd funding platforms.