Of So(S)cial Media, Kindness of the crowds, 100 ways to address criticism and Oops! We did it again

MisDisMal-Information Edition 38

What is this? MisDisMal-Information (Misinformation, Disinformation and Malinformation) aims to track information disorder and the information ecosystem largely from an Indian perspective. It will also look at some global campaigns and research.

What this is not? A fact-check newsletter. There are organisations like Altnews, Boomlive, etc., who already do some great work. It may feature some of their fact-checks periodically.

Welcome to Edition 38 of MisDisMal-Information

Things in India haven't changed significantly since the last edition a little over two weeks ago. If anything, official case numbers are even higher now, and things will be this way for some time to come. I hope you, your families/friends are safe.

So(S)cial Media and Kindness of the crowds

In 37, we looked at some characteristics of social media feeds now inundated with SOS calls.

Without Context (or Context-less) : Disparate pieces of information need to be pieced together. But you don't know how one fits in with in another.

Evolving : As more information comes through, individual pieces of content are consolidated into threads or lists, then shared documents with all combinations of nesting possible.

Flattened : Amid so many SOS calls, tagging different people queries that would have different levels of urgency (how do I get from A to B for work in a curfew v/s Which hospital has beds or oxygen supply) get clubbed and consumed together.

Latency : How long has it been since this particular piece of information was put together? Is it still relevant? Is it still accurate?

Integrity : Just because it says 'verified' in some form, typically in caps, as a hashtag or both; or says that they heard it from a doctor - does that mean it is true?

Provenance : Where has a particular piece of content come from? Yes, you can see who posted it, but where did they get it from? How many people have seen it before you? How many have acted on it? What happened when they acted on it?

And the question of low-trust environments and their fragility.

The community (I'm using the losing the term loosely) has also evolved its own set of norms. Around the time edition 37 went out, Varun Grover had posted a thread about clarity while asking for help (providing information - this has further evolved to using a fairly exhaustive form now), and best practices will offering help (verifying leads, etc.).

But these efforts have run into some problems:

Scammers and black operators are using this as an opportunity to profit off the vulnerable. This thread by Sugandh Rakha in late April explained how some of them worked. IndiaToday reported on a few of them too.

Disturbing reports of calls individuals self-identifying as police officers asking volunteers not to post SOS messages and unverified leads have emerged. And in at least one case in UP, a person posting an oxygen requirement was charged with spreading misleading information online. - (Nilesh Christopher - RestOfWorld)

Resource depletion: Something I missed pointing out last week. The more efficient these mechanisms get, the faster resources are allocated - and depleted. Until the bottleneck that is supply or availability of healthcare resources/infrastructure, this will be a huge challenge.

And then, there are instances of people just being obnoxious.

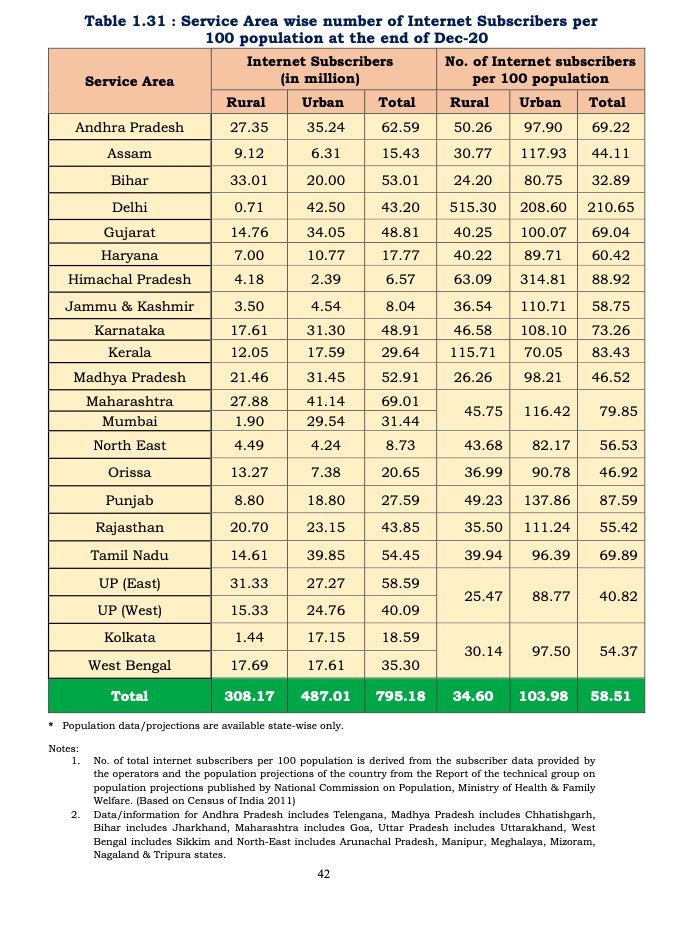

Another major shortcoming, of course, is inequity.

Here's a look at the number of internet subscribers for every 100 people from TRAI's performance indicators report - Page 42

Two stories bring this out.

In The epistemology of live blogging, Donald Matheson and Karin Wahl-Jorgensen highlighted 5 main characteristics of live news blogs. There seem to be some parallels.

(1) produce a fragmentary narrative that (2) reflects particular moments in time, (3) curate an array of textual objects from a range of information sources to produce ‘networked balance’, (4) gain coherence from an often informal authorial voice or voices and (5) generate claims to knowledge of events which are simultaneously dynamic and fragile.

I also want to course-correct on something I said in the last edition

These volunteer driven information flows, however, remain fragile. Ideally, you would want 'official' or 'authoritative' sources conveying this information, but these hierarchical models of communication just don't work in the instant information environment we have today. Even daily update frequencies on bed/ICU/oxygen availability may not be good enough.

When I wrote that line (highlighted), I had in mind situations like natural disasters. I don't think I engaged enough with the more fundamental question of should we be in this position at all?

And now, nearly three weeks later, should we still be here?

The future of internet speech

Given the circumstances, you'd think we wouldn't have the bandwidth for too many other things. Yet, we somehow managed to embroil ourselves in various controversies about not-so-trivial matters like the future of free speech on the internet.

100 ways to address criticism

On 24th April (it was a Saturday evening), Medianama reported that Twitter complied with an order by the Union Government to withhold ~50 tweets (critical of how the government has handled the second wave) in India. Through the weekend and into the following week, Twitter would continue to get criticised for complying (look at the tweets section on this Techmeme page). Simultaneously, it also emerged that more platforms had received these/or similar orders. On Sunday, Deeksha Bharadwaj (HindustanTimes)reported that 100 posts across Facebook, Twitter and Instagram were targeted. On Monday, a report by Pankaj Doval (TOI) included YouTube too. We know these companies got orders only because a spokesperson for the government seems to have named them. Neither of these platforms has confirmed whether they received orders, nor if/how they complied.

I wrote about what this means for the power dynamics between states, platforms and citizens/civil society for TheWire:

Expanding on legal scholar Jack Balkin’s model for speech regulation, there are ‘3C’s’ available (cooperation, cooption and confrontation) for companies in their interaction with state power. Apart from Twitter’s seemingly short-lived dalliance with confrontation in February 2021, technology platforms have mostly chosen the cooperation and cooption options in India (in contrast to their posturing in the west).

This is particularly evident in their reaction to the recent Intermediary Guidelines and Digital Media Ethics Code. We’ll ask for transparency, but what we’re likely to get is ‘transparency theatre’ – ranging from inscrutable reports, to a deluge of information which, as communications scholar Sun-ha Hong argues, ‘won’t save us’.

Reports allege that the most recent Twitter posts were flagged because they were misleading. But, at the time of writing, it isn’t clear exactly which law(s) were allegedly violated. We can demand that social media platforms are more transparent, but the current legal regime dealing with ‘blocking’ (Section 69A of the IT ACT) place no such obligations on the government.

Twitter should be criticised for complying, yet, I also think it deserves (some? very little?) credit for disclosing their actions through the Lumen Database - which is a voluntary mechanism. This is significantly better than the (absence of) responses we get from Facebook (including Instagram) and YouTube.

Oops, we did it again

Even as this was unfolding, we were hit with another free speech related controversy. Facebook had temporarily restricted posts with the hashtag 'ResignModi' from showing up in search results. You could still post using it, and the restriction would not have affected any posts in your newsfeed. They eventually claimed it was an error and lifted the restrictions. And that's likely all we're ever going to hear about it.

Given the political context (platforms had recently received takedown orders and WSJs reportage of alleged closeness between the BJP and some members on Facebook's staff), it wasn't surprising that people suspected thought it was a deliberate act perhaps taken at the behest of the Union government.

I don't think this was the case for 2 reasons.

Precedent: We have been here many times before. Hashtags like 'sikh', 'blacklivesmatters', 'endSARS', to name just a few, have run into various technical glitches. Occam's Razor implies that a simpler explanation (someone making a mistake, a system behaving unexpectedly) is more likely to be true. I included more details, along with the explanations Facebook offered in a post I wrote for Medianama.

Optics: If our mental model of how Facebook operates is of a company that weighs its actions (heavily, among many other things) based on potential PR fallout, then the disincentives to do this would be pretty strong. Unlike restricting individual posts, restricting hashtag searches are likely to impact more users and generally be more visible (it depends to some extents on posts/users affected).

The deeper issue here is that Facebook offers little explanation past its 'Oops!' and 'Look! We fixed it’ kind of responses'. And that isn't acceptable anymore. As I argued in the Medianama piece

Let’s accept that these were mistakes for now. What happens next? Silence, until the next time. Then, rinse and repeat. Technological systems are complex, and people make mistakes, let’s accept that too. Though when they can have political consequences (even without political intent), the responsibility cannot end with answers that offer no further understanding of what happened. Especially if it is a pattern that keeps repeating.

Returning to the ‘ResignModi’ case, there’s a significant chance that this too was the result of ‘community reporting’ just like the ‘sikh’ and ‘sikhism’ hashtags. But reading between the lines of Facebook’s response in those instances suggests that anywhere between one and an unknown number of reports were sufficient to trigger whatever combination of manual and/or automated processes that can lead to a hashtag being temporarily hidden. Note the number of unknowns there.

...

Facebook — this applies to other networks too, but Facebook is by far the largest in India — needs to put forward more meaningful explanations in such cases. Ones that amount to more than ‘Oops!’ or ‘Look! We fixed it!’. There are, after all, no secret blocking rules stopping it from explaining its own mistakes. These explanations don’t have to be immediate. Issues can be complex, requiring detailed analysis. Set a definite timeline, and deliver. No doubt, this already happens for internal purposes. And then, actually show progress. Reduce the trust deficit, don’t feed it.

Run out of Twitter

I do apologise for using the already-overused run out pun on this one. You probably know where this going.

But, some context first.

On the back of distressing news of post-poll violence in West Bengal comes even more distressing news that the events are being distorted with a communal intent. (Violence, but not communal - Newslaundry)

As with any highly polarising news story, there will be a lot of information that triggers us coming our way. Already resource crunched fact-checking organisations are overwhelmed too. As this quote in a story by Himadri Ghosh (TheWire) suggests:

Reporters from a top fact-checking website, who asked not to be named, told The Wire, “There is a flood of disinformation and fake news unleashed by BJP handles. We don’t have the resources to go through all of it because of its sheer scale.”

So, extra caution is warranted when engaging with this story. And we'll have to watch this closely over the coming days.

Ok, now back to an offshoot of this situation where we know what happened.

Twitter has permanently suspended Kangana Ranaut, citing its 'Hateful Conduct' policy (HindustanTimes). Nikhil Pahwa has a great thread on some of the policy implications of this.

. It is going to be interesting to see:

If more platforms follow, assuming the nature of content doesn't change?

How much more pressure there will be on platforms to suspend other users (potentially even politicians) who engage in hateful rhetoric?

What, if any, changes this will introduce to the must-carry v/s must-remove debate in India? I covered this edition 36.

In contrast, the Koo-founders of Koo seemed happy to welcome her to Koo. Weren't they apolitical/neutral?

Exhibit A: Koo app is apolitical platform, we have no political lineage, says co-founder

Exhibit B: Social coup: Twitterati deeply divided over Koo; co-founder says app is apolitical

Exhibit C: (scroll to the last question) How Koo became India’s Hindu nationalist–approved Twitter alternative

To be fair, they still could be. And using this purely as a growth opportunity. In which case, I have many thoughts. But that's not what this section is about.

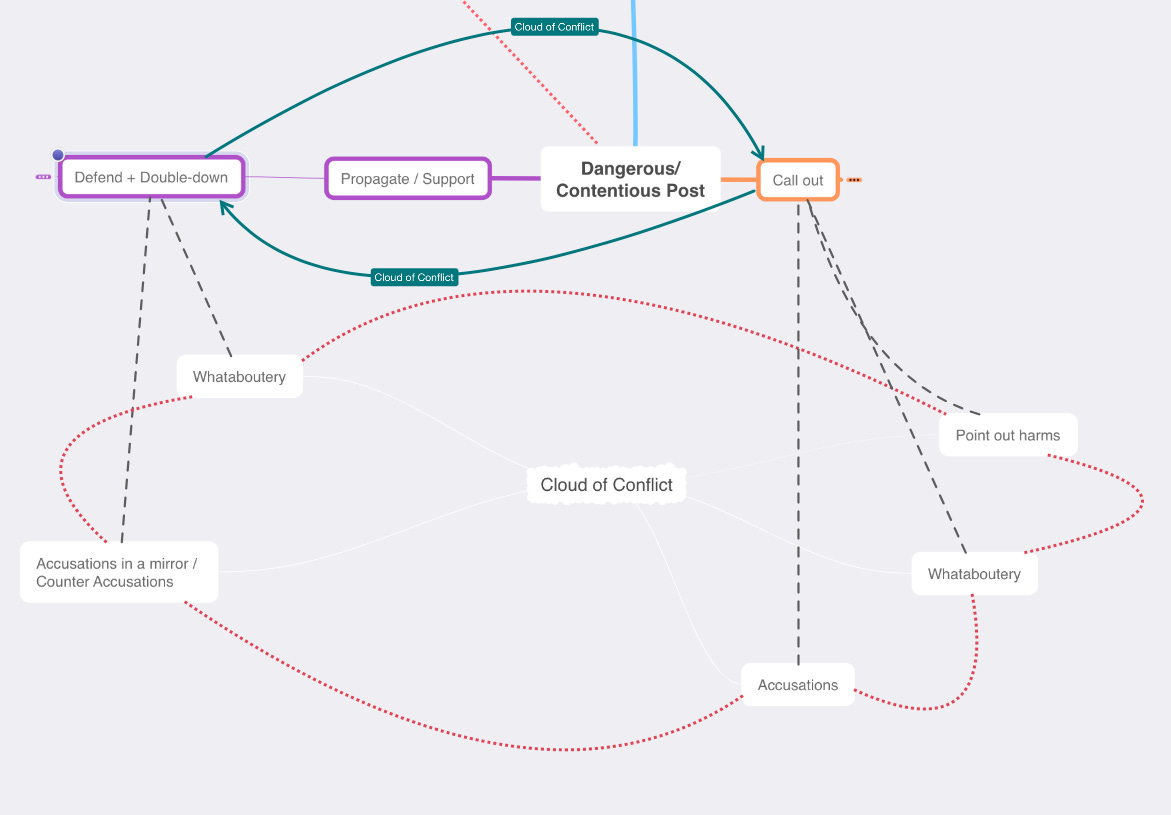

I also want to illustrate the pattern that plays out using the Anatomy of an Internet Conflict mind map I referenced in edition 26 and edition 16. Instead of throwing the whole graphic at you, I'll try to explain this using portions of it.

A few things first, in any such conflict:

Effects will be ambivalent.

An Algorithmic reward will create parallel feedback loops- may or may not have a tangible outcome.

The tension between taming the beast and feeding the monster.

Outcomes are what should matter to us.

Here's what happens. We have two opposing groups. Let's call them A and B. A member of group A posts something that can be dangerous/contentious/hateful (a lot more adjectives are possible, let's stay with these for now).

3 reactions are possible

Calling it out(mostly group B).

Supporting/Propagating(mostly group A).

Ignore (Everyone who doesn't do either of these. Could include A and B members who haven't got involved yet)

In reaction to Group B's 'calling out', Group A members will likely double down. Creating a 'cloud of conflict' consisting of.

Pointing out harms (B)

Accusations (B)

Accusations in a mirror (A)

Whataboutery (A and B)

The result of B's calling out will be amplification. Same with A's defence+double down reaction. Creating an 'algorithmic reward' on platforms.

What can the amplification result in?

B(Orange)

create awareness

signal

recruit [inactive B members or outside] & create pressure [on A, platforms, law enforcement, private entities/persons]

All of them play off each other.

Pressure on platforms may have worked this time since there were many calls for suspending her account. It is always hard to know how much of a role this played, and we're typically left guessing.

Similarly, with A (purple)

create awareness

signal

recruit [inactive A members or outside] & counter-mobilise [claim victimisation, file away B methods for future use]

Again, they play off each other

What this does is create feedback loops that amplify each other. 9/

This is already taking shape with the Deplatforming.

In fact, even Twitter's act of deplatforming kicks off this whole cycle with that action at its centre. So instead of 'dangerous or contentious post', we could also label that box 'Anything'.

I realise I haven't been consistent with the sections - Meanwhile in India, Around the world, Big-Tech-and-Model-Watch and Studies/Analyses for some time. The truth is, there's an overwhelming amount happening each week on all these fronts, and I am trying to figure out how to include these sections without flooding the newsletter with links while also not missing out on big trends/themes—still a work-in-progress. I'll try a few different models over the next few editions.

For today, I'll just include an assortment of topics on which I've gathered some interesting links over the past few weeks.

The Sophie Zhang - Guardian Series

There's a good chance you've already read the one about Facebook not taking action against a network that seems to have included a BJP MP (Julia Carrie Wong, Hanna Ellis-Petersen - TheGuardian). I won't rehash the whole story here, but two things stood out to me.

Some of the networks in question were deemed to be below Facebook's self-defined threshold for Coordinated Inauthentic Behaviour (CIB). That's relevant because Facebook does monthly public disclosures about its actions against such networks. Yet, we don't know how it defines these thresholds. Look, I get they're not fixed because it is not like this activity follows a fixed threshold, but they certainly have some internal guidance. And something like this, politicians across party lines being propped up by inorganic activity warrants some sort of public disclosure.

One of the networks in question shifted from boosting a 'Congress politician in Punjab' to supporting AAP. That's not surprising since there is an element of 'mercenariness' to this, but it also tells you how much we're being played.

The first article in the series included a graphic indicating how many days it took Facebook to against CIB after a case was flagged. For India, that number was around 17 days, the 4th shortest duration. (Julia Carrie Wong - TheGuardian)

YouTube

Around a month ago, YouTube introduced a new statistic called 'violative view rate'. It also said this statistic is down 70% since 2017. But Becca Lewis (whose work you absolutely should follow) posted a Twitter thread about why YouTube just unveiling a shiny new statistic that we'll ‘ooh and aah’ over isn't much of a development by itself.

Tarleton Gillespie posted a few thoughts as well.

One thing that struck me was:

Two YouTubers are looking to contest London's mayoral elections (Joshua Zitser and Rachel E. Greenspan - Insider)

The 'xyz' industry

Kashmir Hill and Aaron Krolik on The Slander Industry (NYTimes)

Sara Fischer on The Professional Trolling Industry (Axios). Reminds me a bit of the BuzzfeedNews story on 'black PR' by Craig Silverman, Jane Lytvynenko and William Kung.

Regulation

Singapore invoked POFMA against opposition politicians claims linking COVID-19 vaccination to a stroke (TheStar).

The ministry said earlier this week that it "instructed" the government office responsible for implementing the 2019 Protection from Online Falsehoods and Manipulation Act "to issue correction directions" to the Singapore People's Party (SPP) and leader Goh Meng Seng.

The order covered Goh's and the SPP's posts "implying that Covid-19 vaccination had caused or substantially contributed to" a case of stroke and the separate death of an 81-year-old.

EDRi on the European Parliament's approval of regulations to govern 'terrorist' content (TERREG) online.

The procedure for the second reading excluded elected representatives from the final decision over this human rights intrusive legislation. It deprived EU citizens from seeing if the Members of the European Parliament, the only democratically elected body of the EU would have accepted a 1-hour removal deadline for content, forcing platforms to use content filtering, and empowering state authorities to enable censorship.

For more context:

In this post, Anna Magzal explains why a final vote didn't happen.

(Tangentially related) Eliska Priskova (AccessNow) writes about how EU Member States are undermining the Digital Services Act.

Florida is planning legislation that could fine social media platforms for deplatforming politicians. Account suspensions will be limited to 14 days. (Cody Godwin - BBC)

P.S. You’ll notice that I haven’t included 2 Facebook oversight decisions in this edition. One that relates to India, and the other pertaining to Donald Trump’s suspension. There are 2 reasons for that. First - I haven’t been able to go through them in detail, yet. Second - I am unsure of how much space I want to devote to individual decisions.