Of Polarisation, propaganda, backfire and participatory disinformation

MisDisMal-Information Edition 39

What is this? MisDisMal-Information (Misinformation, Disinformation and Malinformation) aims to track information disorder and the information ecosystem largely from an Indian perspective. It will also look at some global campaigns and research.

What this is not? A fact-check newsletter. There are organisations like Altnews, Boomlive, etc., who already do some great work. It may feature some of their fact-checks periodically.

Welcome to Edition 39 of MisDisMal-Information

Polarisation, propaganda, magic and backfire

There's a high probability that you've seen at least one of these 2 tables over the last few days.

The first one appeared in edition 126 of Anticipating the Unintended. The second one was posted by the BJP's official Twitter handle.

This isn't surprising. Well, it is surprising that the original touched enough nerves that the BJP felt like it had to respond in kind. What isn't surprising is that an attempt to set one kind of narrative received a response that set an opposing narrative - by talking past the original. Now, you can argue that the original table itself was an attempt to set counter-narratives - which itself were a counter to narratives about the state of the COVID-19 response. And that's the point I am trying to make (I realise I am repeating myself from past editions). We're all locked in this dance (does that make us discourse dancers?)

There are many tangents we can go down from here - most involved clutching your forehead and then wringing it vigourously - how social media has supercharged the performative aspects of politics, how polarisation is tearing us apart, there's no escaping propaganda, attempts to fact-check will backfire, people don't care about the truth, etc.

In Boston Review, C. Thi Nguyen compares theories pitting polarisation and propaganda at the centre of democratic dysfunction (again, note that this is not specifically written for the Indian context). I am going to quote heavily in this section:

Systemic polarization, as it is usually told, is a basically symmetrical story. Polarization arises from a social dynamic that afflicts almost everybody. The social forces at play—social mobility, online media bubbles, algorithmic filtering—are pervasive, and their effect is nearly universal.

...

On the other hand, the propaganda story is usually told asymmetrically: one side is stuck in the propaganda machine, the other side fighting against it. It is certainly possible to tell the propaganda story about both sides, but symmetry isn’t baked into its core.

Look, I think many of us will opine that this probably isn't an either-or scenario, and both will overlap (with several other factors as well), but let's play along. And, if it isn't evident, we're going somewhere in between tangents 2 and 3 (how polarisation is tearing us apart, there's no escaping propaganda). Back to the point, he draws on 2 books:

Overdoing Democracy: Why We Must Put Politics in Its Place (2019) by Robert B. Talisse

Network Propaganda: Manipulation, Disinformation, and Radicalization in American Politics (2018) by Yochai Benkler, Robert Faris, and Hal Roberts

Overdoing Democracy leans heavily here on empirical research regarding group polarization, especially as it has been formulated by Sunstein. (Talisse renames the phenomenon “belief polarization,” but I will stick with the original and standard terminology.) Group polarization “besets individuals who talk only or mainly to others who share their fundamental commitments,” Talisse explains, and leads them to “embrace a more extreme version of their initial opinion.” Gathering in like-mindedness enclaves increases our moral and political certainty.

And in the other corner.

According to Benkler, Faris, and Roberts, a host of factors went into creating the political landscape around the election of Trump, but the dominant factor was propaganda. For these authors, propaganda means the intentional spread of false or misleading information for the sake of political power. And in their analysis, the most influential source of propaganda isn’t the fancy new stuff—bots or Russian troll farms—but the old familiar stuff: propaganda in mainstream news outlets.

A key element of this account is an effect the authors call the “propaganda feedback loop.” Inside the loop, media outlets stop trying to present truths and to fact-check their fellow outlets. Instead, these outlets are out to confirm their followers’ worldview. And the more time they spend in the loop, the more these followers get used to the experience of constant confirmation and grow intolerant of any challenges to their belief system. At the same time, the loop attracts political elites who are willing to align themselves with the loop’s prevailing belief system, in exchange for a share of those followers. The result is a “self-reinforcing feedback loop...

Now, you know I love a good self-reinforcing feedback loop, but let's set that aside for today.

There are 2 kinds of polarisation referenced:

Political polarization, he says, measures the distance between political opponents. The further apart the various elements—the party planks, the commitments of the member of the party—the greater the political polarization. Group polarization, on the other hand, is a process whereby like-minded enclaves increase the extremity of belief.

Ok, so 1 self-contained feedback loop (group) and one feedback loop across groups (political). Sorry, I couldn't resist that.

There's a lot of nuance in the original article, and I am doing it some disservice by simplifying the argument in the next few paragraphs.

Essentially, this is pitting 'both sides are acting irrationally' and 'one side is acting irrationally' arguments against each other.

Or, said another way, the first argument is that extreme ends of the spectrum (extreme not necessarily equivalent to extremist) are squeezing out the centre and is a net-bad. We're going into 'culture wars territory, but not quite there.

The opposing argument is that one side is behaving in a particular manner because it has no incentives to self-correct. Oh wait, we are pretty much in 'culture wars territory.

But here's the interesting bit from the piece. At least for me, because I am somewhere in the middle here. I do see some basic behaviours/dynamics common to different sides of the political spectrum. But that's very different from saying that messages are the same, or that the consequences arising from those behaviours are the same. They are not. When one is routinely using dangerous/fear speech, actual violence over and above cognitive biases and logical fallacies, they will not be.

Imagine a population composed mostly of likeminded centrists, who all believe in the goods of moderation and civility. The group polarization effect could take hold of them, pushing their degree of confidence in their beliefs up beyond their evidence. They might become excessively confident in their moderate beliefs, unwilling to consider the truth of any extreme position. Such group polarization would produce the opposite of political polarization—but that is not always for the best.

... the term “group polarization” is misleading. The phrase conjures an image of a movement toward two (or more) poles of extreme belief, but group polarization can afflict centrists, too

Can there be an extreme centrist or a militant moderate? Nguyen seems to think so:

But group polarization can happen when members of any like-minded group increase their confidence in their beliefs, or acquire beliefs with more extreme content. In this latter sense, it is possible for there to be extreme centrists.

Nguyen concludes:

There is no particular reason to treat the symmetrical view as the right opening assumption, nor to place the burden of proof on those who seek more asymmetric explanations. The moderate call for peace and friendship does not magically escape from the charge of groupthink. Civility is not a default. And leaping to accept, with total confidence, a story of symmetrical irrationality, without sufficient evidence—especially when there are other competing accounts, themselves with significant evidential support—itself bears the mark of motivated reasoning.

For context, Nguyen also pleads guilty to being a 'lefty', so make of that what you will (I don't mean 'pleads guilty' in the sense that it is a crime, of course). But those lines are worth paying attention to, particularly when the status quo continues to heap misery on the disadvantaged. And I say this as a conflict-averse person who leans to the centre (wait, can you lean centre?)

Home-work thought experiment: Is an 'extreme centrist' more likely to drift to the left or the right?

Ok, let's proceed to tangents 4 and 5 (attempts to fact-check will backfire, people don't care about the truth).

Brendan Nyhan, one of the authors of the article that brought us the term 'backfire effect', writes in a Colloquium Paper:

To the contrary, an emerging research consensus finds that corrective information is typically at least somewhat effective at increasing belief accuracy when received by respondents. However, the research that I review suggests that the accuracy-increasing effects of corrective information like fact checks often do not last or accumulate; instead, they frequently seem to decay or be overwhelmed by cues from elites and the media promoting more congenial but less accurate claims. As a result, misperceptions typically persist in public opinion for years after they have been debunked. Given these realities, the primary challenge for scientific communication is not to prevent backfire effects but instead, to understand how to target corrective information better and to make it more effective.

And, Tim Harford writes about the magic can teach us about misinformation [Financial Times - paywall].

Aside: He makes some other interesting references in the article (both of which will seem familiar):

Deat cat strategy

If a dinner party conversation turns awkward, simply toss a dead cat on to the table. People will be outraged but you will succeed in changing the subject.

Firehose of falsehood

barrage ordinary citizens with a stream of lies, inducing a state of learnt helplessness where people shrug and assume nothing is true. The lies don’t need to make sense. What matters is the volume — enough to overwhelm the capabilities of fact-checkers, enough to consume the oxygen of the news cycle.

Back to the point, he starts with 2 explanations for why people accept and spread misleading information. First, people cannot differentiate between facts and falsehoods. Second, people know, they don't care. Note that this excludes bad-faith engagement, which is also a huge part of the problem.

He then references a Nature study by Gordon Pennycook, Ziv Epstein, Mohsen Mosleh and others which surfaces a contradiction. When asked which headlines were accurate, most people got it right. When asked which headlines they would share, the behaviour seemed to be a function of the political beliefs held, i.e. people shared what their beliefs aligned with - without considering accuracy (also, note, these people weren't specifically asked to determine accuracy). Why the contradiction?

Just as a magician relies on distraction (basically, a lack of attention), Harford references a study by David Rand and Gordon Pennycook (Lazy, not biased)

This suggests that we share fake news not because of malice or ineptitude, but because of impulse and inattention.

Incidentally, both these studies also form the core of Max Fisher’s article in the New York Times - ‘Belonging Is Stronger Than Facts’: The Age of Misinformation, which lists 3 reasons for people being prone to misinformation:

First, and perhaps most important, is when conditions in society make people feel a greater need for what social scientists call ingrouping — a belief that their social identity is a source of strength and superiority, and that other groups can be blamed for their problems.

…

The second driver of the misinformation era is the emergence of high-profile political figures who encourage their followers to indulge their desire for identity-affirming misinformation.

…

Then there is the third factor — a shift to social media, which is a powerful outlet for composers of disinformation, a pervasive vector for misinformation itself and a multiplier of the other risk factors.

Yikes

Participatory Disinformation

Let’s talk about India’s COVID vaccination strategy. Ok, not the exact strategy, per se. But some of the information flows around it. It has been hard to miss attempts to pin ‘vaccine hesitancy’ on the opposition and the media, whether it is:

Tweets from Hardeep Singh Puri (Twitter search)

Amit Malviya (link)

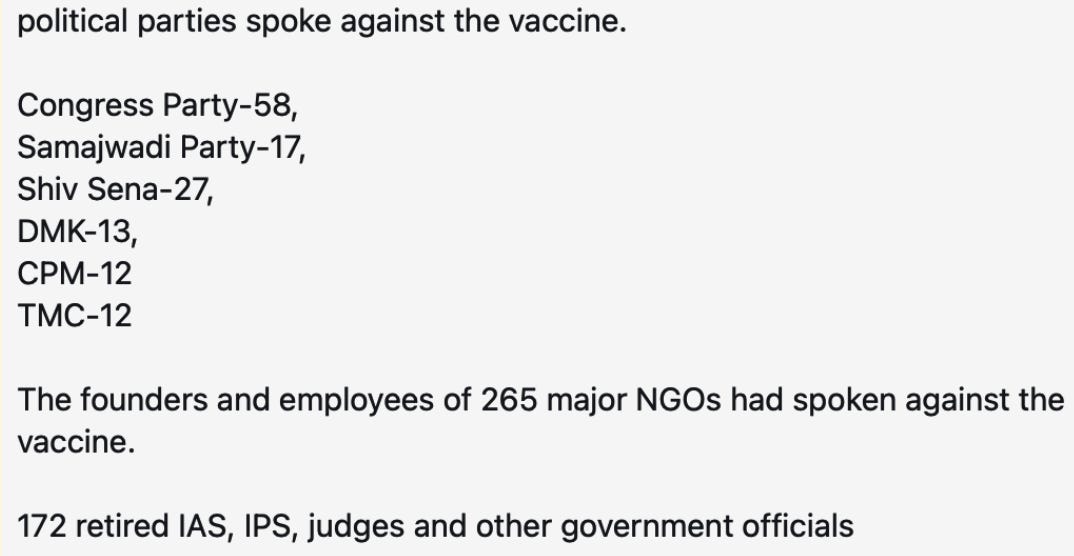

Or various other Twitter handles throwing around numbers of how many articles were published ‘against the vaccine’ (Twitter search). For example, Indian Express ran 182 ‘against the vaccine. I traced these to a thread (archive link) that was posted around 1 AM on 11th May. I also ran a quick hoaxy search for “Indian Express” AND 182. The 2 tweets at the centre of the biggest 2 clusters received a combined ~1K retweets and 1.5K likes. Not a lot. Not yet, anyway.

It remains to be seen whether the version with these specific numbers will be picked up by larger influencers in the right-wing ecosystem.

But this reminded me of what Kate Starbird calls ‘Participatory Disinformation’.

To paraphrase, political elites, influencers, audiences and even inorganic activity feed off each other for content, signals, etc.

P.S. In Edition 27, I had warned about the need to situate criticism/questions carefully to guard against potential weaponisation and appropriation in the future. We are here. The dead cat is being readied for the dinner table.

Again, I will add that the responsibility should not have been on the people posing questions in good faith. Transparency around Covaxin trials, instead of ‘trust us’, would have avoided a lot of this.

Meanwhile in India

Priyam Nayak, Joe Ondrak, Ishaana Aiyanna and Ilma Hasan on narratives around the post-poll violence in West Bengal [Logically]. Among other things, they observe an import of rhetoric similar to the 'Stop the Steal' implying that the elections were unfair. Platforms have had to enforce their Election-Integrity-based policies even more strictly after the U.S. Presidential election. Will that translate to India? And if yes, what happens when you claim the Election Commission is favouring the BJP? Unfortunately, this seems like a question we'll get to sooner rather than later.

A detailed profile of the AltNews team and the context surrounding how it came together, the pressures they face today, etc. [Sonia Faleiro - RestofWorld]

Telecom operators had to deny that any 5G trials were ongoing and links between 5G and India's second COVID wave [TNIE]. Just another example of how information disorder cross-pollinates.

YouTube has (inconsistently) taken down videos of DIY oxygen solutions on the grounds that they were dangerous [Shamani Joshi - Vice World News].

Denialism and espousing miracle cures during the 2nd wave. [Rahul Bhatia - The New Yorker]

Remember that GOM report which wanted to 'neutralise' criticism (to be fair, we can't say for sure whether the word was used as 'disarm' or 'destroy')? An RTI response suggests “Action taken on the GOM report is still continuing” [Anisha Dutta - Hindustan Times]

A post of IFF's community suggested that Instagram was removing stories related to COVID-relief work. Instagram acknowledged an issue with stories and even put out a slightly more detailed update than usual, indicating that it had impacted posts raising awareness about the situation in Israel (more on that later). But no mention of what happened in India. To my knowledge, Facebook has not acknowledged this yet, even though it seems like it was related.

Around the world

A proposed bill in Florida wants fact-checking organisations to file a $1M bond and fine them in case of 'wrongful conduct'. [Beth Leblanc, Craig Mauger - Detroit News]

El Salvador's President has seized the country's official social media accounts rather dramatically.[Alex Gonzálex Ormerod - Rest of World]

In the small hours of Saturday, May 1, more than 12 hours before a single Nuevas Ideas representative had been officially sworn in, images on the Legislative Assembly’s social media accounts switched to feature imagery that resembled the design and color of Bukele’s own insignia. The same happened at the local level, as hundreds of bukelista municipalities awoke to find their official seals had been graphically aligned with the former advertising executive-turned-president’s look and messaging.

Ukraine's President Volodymyr Zelensky has approved a 'Center for Countering Disinformation' [Yevgeny Matyushenko -UNIAN]

The Center shall analyze and monitor events and phenomena in the information domain, the state of information security, and Ukraine's presence in the global information space.

Also, the CCD shall identify and research the existing and projected threats to the information security of Ukraine, factors affecting their buildup, as well as predict their implications for national interests.

Ariel Saramandi [Rest Of World] on Mauritius' new social media law, which could land people in prison for 'annoyance'.

Anyone in Mauritius who sends a message via the internet that causes or could cause an “annoyance” could end up being sentenced to up to ten years in prison. Anyone can file a complaint and seek damages for a post, share, or even a like that “is likely to cause or causes annoyance, humiliation, inconvenience, distress, or anxiety.”

UK announced outs its much anticipated Online Safety Bill

Open Rights Group called them 'Kafkaesque':

“This is a flawed approach which makes no attempt to bring law breakers to justice. Instead it tries to put the problem solely on the shoulders of platforms. But Facebook is not the police and does not operate prisons.

“It also pushes for lawful content, including in private messages sent through these platforms, to be monitored and scoured for alleged risks. The idea that private messages should be routinely checked and examined is extraordinary.

'Hostage-taking laws' are being passed in many countries [Vittoria Elliot - Rest of World]

Jason Pielemeier, policy director of the Global Network Initiative, an organization that promotes free expression online, has nicknamed the regulations “hostage-taking laws,” because they could be used to detain workers from social media platforms if they refuse to take government orders. “I think it’s less likely about threatening to shoot someone,” Pielemeier said. “It’s more about ‘We’re going to take their liberty away.’

Big Tech/Model Watch

Facebook continues to make mistakes or content moderation decisions with significant political impact. This time, Palestinian users were affected by at least 2 known errors/mistakes - the story removal issue referenced earlier, and as Ryan Mac reports, miscategorised the Al-Aqsa mosque as being associated with “violence or dangerous organizations.”

Ernie Piper [Logically] includes examples from other platforms as well. This is absurd.

Laquesha Bailey on Instagram's Infographic Activism. How much of this is down to Instagram's design choices?

A common theme across infographic activism is its propensity for presenting unsourced and unverifiable information. Regarding citations, these graphics tend to do one of three things. They don’t highlight where they sourced the data from at all, which is reprehensible because we should all endeavour to give credit where credit is due. In any other space, biting off the ideas of another creator and repeating their words verbatim is considered plagiarism, but in the cogs of the infographic machine, it’s perfectly acceptable.

Do you remember 'social media companies all starting to the same' from Edition 26? Well, here's another one about creator economy features.

Studies/Analyses

Mitali Mukherjee, Aditi Ratho, Shruti Jain - Unsocial Media: Inclusion, Representation, and Safety for Women on Social Networking Platforms [ORF]

Cynthia Khoo - Deplatforming Misogyny [LEAF]

Heidi J.S Tworek - Fighting Hate with Speech Law: Media and German Visions of Democracy

This article examines why German politicians turned to law as a way to combat the rise of the far right. I explore how NetzDG represented German political understandings of the relationship between freedom of expression and democracy and argue that NetzDG followed a longer historical pattern of German attempts to use media law to raise Germany's profile on the international stage. The article examines the irony that NetzDG was meant to defend democracy in Germany, but may have unintentionally undermined it elsewhere, as authoritarian regimes like Russia seized upon the law to justify their own curtailments of free expression. Finally, I explain the difficulties of measuring whether NetzDG has achieved its goals and showcase a few other approaches to the problems of information in democracies.

While experiences with correction are generally unrelated to misperceptions about COVID-19, those who correct others have higher COVID-19 misperceptions.