Of Not Quite Shadowbans, Content Moderation Issues, Transparency, FIR-ing line 3 and more

The Information Ecologist 59

What is this? Surprise, surprise. This publication no longer goes by the name MisDisMal-Information. After 52 editions (and the 52nd edition, which was centred around the theme of expanding beyond the true/false frame), it felt like it was time the name reflected that vision, too.

The Information Ecologist (About page) will try to explore the dynamics of the Information Ecosystem (mainly) from the perspective of Technology Policy in India (but not always). It will look at topics and themes such as misinformation, disinformation, performative politics, professional incentives, speech and more.

Welcome to The Information Ecologist 59

Yes, I’ve addressed this image in the new about page.

Note: You’ll notice that this edition is coming in later than the usual Tuesday morning (in India) slot. That’s because I tested positive for COVID-19 over the weekend and couldn’t write this up on Monday. This is also not quite a usual edition in that it is just a series of 4-5 short blurbs.

In this edition:

Since this is not quite a regular edition, I thought it would make sense to look at things that are not quite what they seem/claim to be:

Not quite a shadowban (but not quite nothing either)

Not quite a content moderation issue

Not quite transparency

Not quite overrun with misinformation

FIR-ing line 3

Not quite …

… A Shadowban (but not quite nothing either)

Last week, news emerged that Rahul Gandhi had written to Twitter’s CEO back in December about an observation that the follower count of his account had not increased since August 2021 [The Indian Express]. That timeline is notable since it was when his account for temporarily locked following a complaint by the National for Protection of Child Rights (NCPCR) to the Delhi Police for revealing the identity of a sexual assault victim (by posting a picture with the family) [Roobina Mongia - NDTV].

Now, Twitter expectedly played the equivalent of ‘nothing to see here' and pointed to a policy about zero-tolerance for platform manipulation and spam (see Indian Express story linked earlier). However, some analyses do suggest that it isn’t quite as simple as that.

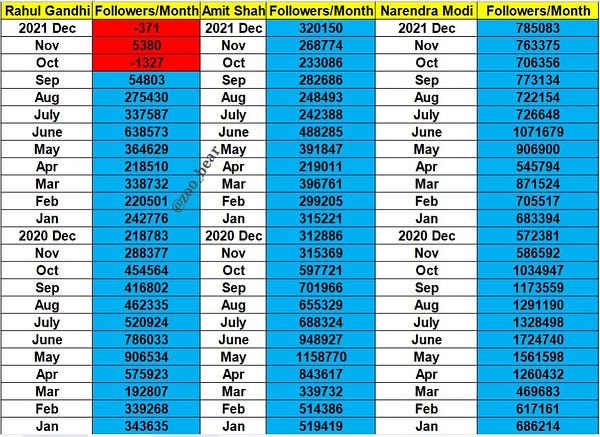

Here’s one by Mohammed Zubair of AltNews, which indicates a sharp drop in new followers/month for Rahul Gandhi v/s Amit Shah and Narendra Modi (see the whole thread).

Here’s a report by the Wall Street Journal citing data from Emplifi and Social Blade to say that “he gained an average of nearly 400,000 new users a month in the first seven months of 2021. That plummeted to an average of fewer than 2,500 monthly from September to December” [Shan Li and Salvador Rodriguez - WSJ (potential paywall)]. Worth pointing out that they also reference a shadowban.

Mr. Gandhi’s letter didn’t explain how exactly he believed his follower numbers were restricted. Technology experts say it is possible to reduce an account’s influence—without outright banning it or deleting content—through a practice known as “shadow banning,” which can include removing accounts from recommended lists and limiting visibility of posts.\

The term shadowban, when traditionally used, is accompanied by allegations that the visibility/reach of content has been reduced/limited in some significant way.

That’s not quite what appears to have happened here, as an analysis by Joyojeet Pal and Anmol Panda showed that Rahul Gandhi’s account still received more median weekly retweets than other politicians in their control group “Twitter handles of Narendra Modi, Amit Shah, Shashi Tharoor, Yogi Adityanath, and Akhilesh Yadav”. There was a drop, though, so one can’t make a clear cut argument about ‘shadowbanning’ in the reduced reach sense (see the whole thread/post linked in the thread).

But, what these analyses make clear is that something strange clearly happened here. One can’t really say whether it was deliberate or not - perhaps some sort of controls kick in once an account is locked, but that’s only a guess. Only Twitter can tell us what actually happened here. Maybe this is something for their META team to look into?

… A Content Moderation Issue

I wonder if the Substack people are thankful to the Spotify people for the Joe Rogan Experience. Why? Well, last week started off with the content moderation conversation focusing on Substack thanks to a study by the Center for Countering Digital Hate which claimed that it was making “millions of dollars off anti-vaccine disinformation” [Elizabeth Dwoskin - The Washington Post], but by the time you were Out on the Weekend *most” of the attention seemed to have shifted to Spotify, Joe Rogan, Neil Young and Joni Mitchell. The cast of characters involved is still evolving. E.g., as I was writing this, Dwayne Johnson found his way into it, so I’m not going to do a full summary. There are also many threads to pull on here, and I’ll just stick to one — which is Substack and Spotify framing these as ‘content moderation’ and ‘censorship’ problems. And sure, there’s an element of content moderation here, as Tarleton Gillespie has pointed out, content moderation is the essence of what many of these platforms do.

Yet, this is not just a content moderation issue - and many intelligent people have been pointing that out.

(There were a lot more, but my bookmarking process seems to have failed me, and I can’t find them :( )

Now, I’m not necessarily suggesting that Substack should go forth and deplatform thousands of newsletters, or that Spotify can solve misinformation on podcasts - which is a significant challenge - complicated further by the fact that you can’t entirely block RSS feeds (people can just go and get that content elsewhere). But, the dynamics are significantly different when you’re paying the content creator for an exclusive contract or allowing them to raise revenue through subscriptions and taking a cut in the process. evelyn douek is spot (ify?) on when she suggests that Spotify is being disingenuous trying to pass off what is clearly an editorial decision as a content moderation problem. And so is Substack’s censorship defence, when it is not transparent about which writers it pursues with deals through Substack Pro (yes, yes, I know you’re reading this courtesy of Substack). And, for all its railing about the ‘ad model’, it does take a cut from many writers who are earning subscription revenues through their platform and have been deplatformed from a bunch of other platforms for various violations (hello, incentives 👋 ). When monetisation in the form of payments/raising money gets involved, it is not quite just a content moderation issue. Yes, I know this potentially widens the net to include fundraising services, which is pretty tricky, and a topic for another day. This was supposed to be a short blurb, remember?

P.S. I will confess I have never listened to Joe Rogan’s podcast nor read/subscribed to any of the writers for which Substack is getting criticised. My comments are not a judgement on their content but from the perspective of the standards that platforms should seek to follow when they enable monetisation.

… Transparency

Con-sparency / Convenient Transparency: In December 2021 and January 2022, the Government of India exercised emergency powers that it gave itself in February 2021 IT Rules to take action against YouTube channels and websites from Pakistan. Somewhat uncharacteristically, they were accompanied by Press Releases including channel names, content examples and estimates of viewership (which was interesting, because I didn’t know what to make of global numbers in this context)

December Press Release: India dismantles Pakistani coordinated disinformation operation

January Press Release: India strikes hard on Pakistani Fake News Factories

The Press Release titles tell us why these were made public, because, very often, legal requests/content takedown requests are made non-transparently. The rules have confidentiality clauses baked in (anyone from the opposition want to take this up? No? I Didn’t think so.) So, when we do find out, it is only because it is convenient for us to do so. In other words, convenient transparency, or con-sparency, or maybe, just a con.

Related: As I was writing this, a story came out about ‘tense and heated’ conversations between officials of the Ministry of Information and Broadcasting and executives of Google, Twitter and Facebook because they aren’t proactive enough about taking down ‘fake news’ [Aditya Kalra - Reuters]. MIB’s involvement is odd, as Apar points out:

Schrödinger’s Transparency: Sticking with the IT Rules 2021, Anandita Mishra and Krishnesh Bapat recently wrote an interesting column on BarAndBench about the monthly transparency reports from Significant Social Media Intermediaries that have been mandated by the rules [Bar And Bench].

… Overrun with misinformation

What is the prevalence of misinformation on social media? This is a great question, and I don’t know when we’ll be able to answer this in a meaningful way. But, in a recent interview, Kiran Garimela made some interesting observations on this subject. Essential to note: potentially low overall prevalence, but significant shift when you narrow down to targeted/fear-speech based (emphasis added). [Karishma Mehrotra - Scroll.in]

We went to party offices in Uttar Pradesh and asked social media persons there to add us to WhatsApp groups, which some of them did. This allowed us to collect hundreds of groups that are internal BJP groups, not publicly accessible. We looked into these groups for misinformation, hate and partisanship.

One of our key findings is that the overall prevalence of misinformation or hate speech might not be that high. When people think of WhatsApp, they think of a cesspool of hate and misinformation. We don’t find that. It’s mostly uninteresting content of rally pictures or good morning messages or pictures of gods.

The main catch is, if you look at messages about Muslims, then there is a lot of misinformation and hate. Roughly 1% to 2% [of messages in general] are misinformation but if you condition on it being about Muslims, then the percentage just jumps 10 times, like 20%.

Somewhat related: A research note from mid-January argued that the overall prevalence of misinformation is low, and more effort should be dedicated to increasing trust in reliable news sources [Alberto Acerbi, Sacha Altay, Hugo Mercier - HKS Misinformation Review]. They are careful to point out that this may not be globally applicable and considers news sources (not posts by individuals, group chats, memes)

studies concur that the base rate of online misinformation consumption in the United States and Europe is very low (~5%) (see Table 1)

….

Overall, these estimates suggest that online misinformation consumption is low in the global north, but this may not be the case in the global south (Narayanan et al., 2019)

…

Our model shows that under a wide range of realistic parameters, given the rarity of misinformation, the effect of fighting misinformation is bound to be minuscule, compared to the effect of fighting for a greater acceptance of reliable information (for a similar approach see Appendix G of Guess, Lerner, et al., 2020). This doesn’t mean that we should dismantle efforts to fight misinformation, since the current equilibrium, with its low prevalence of misinformation, is the outcome of these efforts. Instead, we argue that, at the margin, more efforts should be dedicated to increasing trust in reliable sources of information rather than in fighting misinformation

FIR-ing line 3

Some notes from my efforts to track arrests/cases/FIRs/threats related to posts on Social Media.

Here’s an external link to the tracker: Tracking Arrests / Cases / FIRs / Threat(s) / Detentions related to posts on Social Media (notion.site). The bits here are missing context, so I recommend clicking through to the tracker and the news stories themselves for more context.

Nearly 10 entries in the ‘it went viral’ bucket - which means that law enforcement took action after evidence of an event (typically a video) was widely shared on social media - as reported by the news article. The phrase often used is ‘went viral’. Anyway, it ranged from disrespecting the national flag, assaulting a person for allegedly urinating in front of a cow, violating election code and COVID-19 norms, and more.

Among actions for posts: A BJP youth leader in Tamil Nadu for alleging that temples have been demolished since the current state government was elected, a “Youtuber and journalist” from Mumbai was arrested by the Madhya Pradesh police for allegedly sharing an edited video of the state’s Chief Minister. Accusations that the police in Kerala is registering false cases against Muslims for various kinds of social media posts. And a case in J&K for allegedly insulting the Prime Minister. And Mumbai police registered Rana Ayyub’s complaint about a photoshopped tweet alleging that she hated India and all Indians.

1 entry in the threats bucket: Chennai’s police commissioner warned of action against people who use social media to create ‘religious hatred or disturb public tranquillity’.