Of Turfwars, Memes and Book(ing) Cases, The Trenches and lots of Bee-tee-dubs

MisDisMal-Information Edition #4

What is this? This newsletter aims to track information disorder largely from an Indian perspective. It will also look at some global campaigns and research

What this is not? A fact-check newsletter. There are organisations like Altnews, Boomlive etc who already do some great work. It may feature some of their fact-checks periodically

Welcome to Edition #4 of MisDisMal-Information.

Correction (25th May): This edition initially undercounted the percentage of anti-China and Islamophobic tweets. This has been updated.

Youtubers v/s Tiktokers and the usual discourse

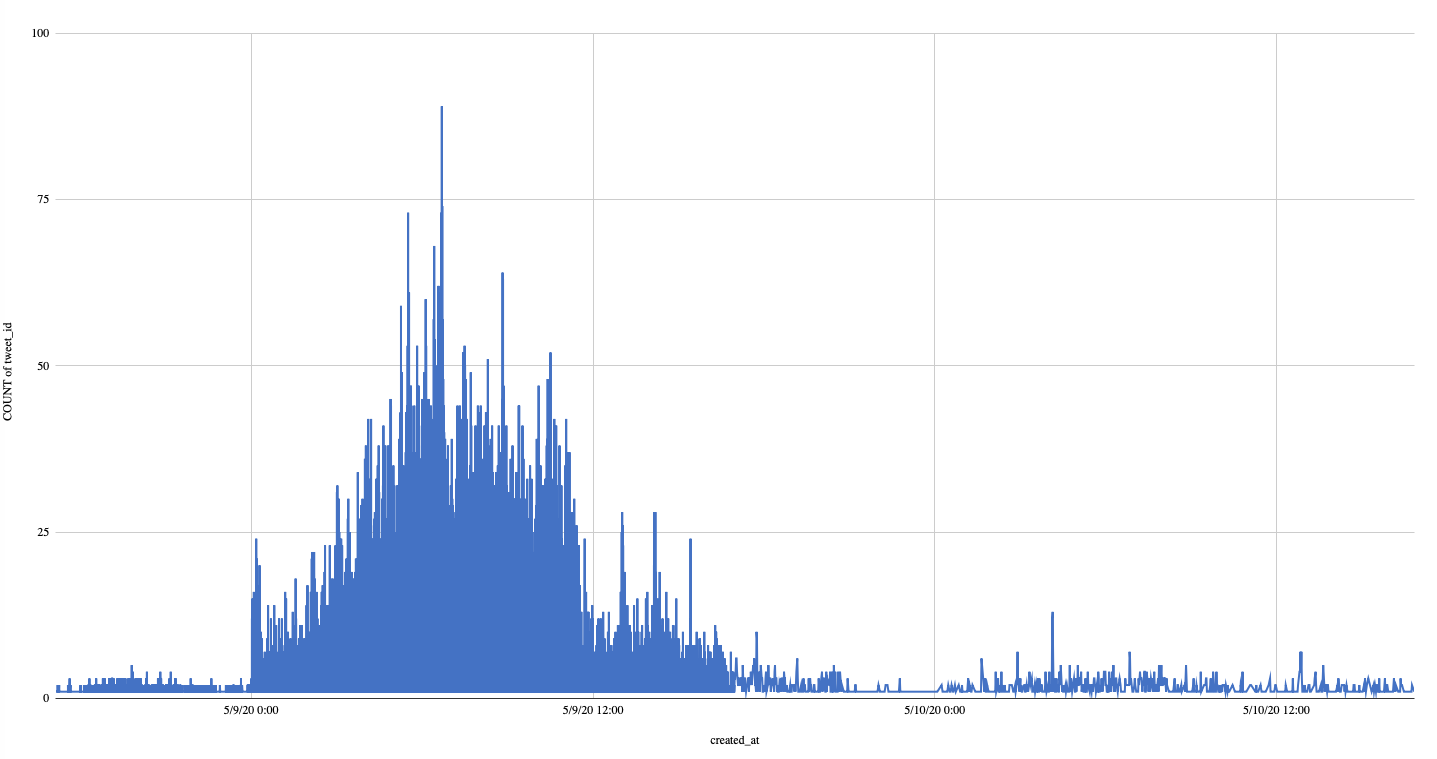

I must admit that I am probably too old to understand some of this, so I won't go into the why's or comment on the content. The gist of it is that a certain Youtuber roasted a TikToker (yes, these are words now, I checked) just as we were getting into the 2nd week of May. Somewhere between this roast and 9th May, it turned into a call to ban TikTok in India. So much so that it was actually trending on Twitter. When I looked into this trend on Twitter.com, I fully expected to find terms like 'cringe' or perhaps some casteist tweets in there. Why? Read this piece by Jyoti Yadav in theprint. But when I looked it up, aside from content directly related to this Youtube-TikTok turfwar/feud, I also came across what looked like a decent amount of anti-China and Islamophobic content. Intrigued, I analysed about 50K tweets from this period.

It was interesting to see that nearly 1/3rd of the tweets came from accounts created in the last few months. The word 'cringe' was used in only 0.1% of the tweets, though. However, ~7.3% of the tweets contained references to religiously loaded terms like hindu, muslim, jihad, lovejihad, religion, culture etc. And another ~5.7% had references to china/chinese, revenge, virus, some statements incorrectly attributed to tiktok's founder etc. Now, I am deliberately not repeating/reposting any of that content here because I do not want to amplify it. But the fact that ~13% of the tweets were so charged, is surprising to me. In my mind, that number is simultaneously higher and lower than I expected.

Why? Let me explain:

High, because, this was largely a face-off between two types of content creators/platforms (on a 3rd platform, no less). That such rhetoric made its way in, just goes to show how the same event can drive many different narratives. I plan to see if this emerges as a pattern of sorts as I analyse more events on twitter.

Low, because based on where I saw some of this content positioned on search results on twitter.com, I actually went into the analysis expecting to find easily more than 15-20% of such content. Of course, since my search was largely textual, I could have missed images/videos that drove similar narratives without the accompanying text.

Oh, there was a redux on 14th/15th May again when said Youtuber's video was taken down by the platform. I did a quick analysis of 35K tweets, almost a quarter of the tweets were generated by accounts created in May, or in other words, just 2 weeks before the event itself. And nearly 45% of tweets were generated by accounts created in the last few months.

If you want to know more about the whole saga, you can read up here.

From Memes to Book(ing)-cases

Props to Mumbai Police's twitter account for catching on to this scene from the first episode of PataalLok.

But, it isn't all fun and games. There does appear to be a very clear pattern of booking cases against individuals/organsiations for 'fake news' or 'misinformation'.

In Goa, a 60 year old man was arrested.

In Maharashtra, the State Cyber Department has lodged 366 cases. 155 related to Whatsapp, 143 to Facebook, 16 to TikTok, 6 for tweets and 4 for Instagram content. 42 cases are linked to Youtube.

In Himachal, 14 FIRs were registered against reporters.

In Dholpur, Rajasthan, the District Collector went to the extent of banning "posting all kinds of unverified news on all social media platforms, including YouTube, Facebook, Instagram, WhatsApp, Twitter, LinkedIn and Telegram, till further orders."

In Meghalaya an FIR was filed against a news channel for reporting that a minister in the state had tested positive for COVID-19.

Shoaib Daniyal writes a piece in Scroll about how the Indian state is essentially weaponising the 'fake news' tag.

But everyone isn't equal of course, after calling out an MP on twitter, the Delhi Police went ahead and deleted the tweet.

From the trenches

Brandy Zadrozny of NBC News wrote a fascinating article featuring Claire Wardle, Kate Starbird and Joan Donovan who have been working on information disorder (it wasn't always referred to as information disorder though) for a long time. I have been following their work for some time now (you would have seen them appear in previous editions too). And if you are watching this space, then you really should be tracking the work they put out.

This quote from Joan Donovan is probably something you've already come across (emphasis mine):

"Information is extremely cheap to produce," she said. "That's why misinformation is a problem, because you don't need any evidence, you don't need any investigation, you don't need any methods to produce it. Knowledge is incredibly expensive to produce. Experts are sought after, and they aren't going to work for free. So platform companies can't rely on the idea that the web is something we build together."

If you hadn't, well:

Shweta Bhandral wrote about an interesting piece titled Fact-Checker’s Life: Exposing Fake News and Communalism, Surviving Social Boycott highlighting some stories from India.

˜˜Slaying˜˜ Flagging Zombie Information Disorder

In Edition 3, I touched upon how wayback machine archives are being used to give debunked misinformation a zombie afterlife. Well, now the Internet Archive is warning. Ok, maybe slaying is too optimistic, so I went back to re-titled this section. Doesn't have the same ring to it though.

Email order Information Disorder

This is dated by a few weeks now, Craig Silverman over at Buzzfeed wrote about 'Masks that seemed to be good to true'. Now, it is largely a familiar tale, misleading claims to sell products etc etc. What struck me was the targeting of 'conservative email lists', 'conservative websites' and even the use of the term 'conservative email marketing'. It is almost as if people's beliefs are being used to peddle products to them. Gasp!

Oh, and he recently wrote about one of the most despicable type of scam imaginable which is also profiting in COVID-times - A Pet Scam

Information Operations

Sarah Cook summarises some of the recent shifts in China's information operations in the China Media Bulletin. Meanwhile, China's ambassador to Canada [claims] that the country is victim of coronavirus disinformation.

Joshua Brustein writes about the Global Engagement Center (what a harmless sounding name) in The Tiny U.S. Agency Fighting Covid Conspiracy Theories Doesn’t Stand a Chance

Harvard Kennedy School Misinformation Review on Italy as the COVID-19 disinformation battlefield

Daily Beast debunks a report that claimed COVID-19 originated in a 'Wuhan Lab'. The report produced an American Department of Defence Contractor.

Bee-Tee-Dubs

No, no, I haven't discovered my inner millennial voice (or is it a Boomer term?). This is a recurring section called Big Tech Watch. This edition will be little heavy on Bee-Tee-Dubs.

I am going to break from my usual format of going company-wise.

First up, is a study titled How search engines disseminate information about COVID-19 and why they should do better. The researchers looked at how search engines select and prioritise information related to COVID-19. What impact randomisation has on what we see and how much results vary based on the language of the search query. A few notable and quotable quotes from it:

We identified large discrepancies in how different search engines disseminate information about the COVID-19 pandemic....For example, we found that some search algorithms potentially prioritize misleading sources of information, such as alternative media and social media content in the case of Yandex, while others prioritize authoritative sources (e.g., government-related pages), such as in the case of Google.

Your search engine determines what you see: We found large discrepancies in the search results between identical agents using different search engines....While differences in source selection are not necessarily a negative aspect, the complete lack of common resources between the search engines can result in substantial information discrepancies among their users, which is troubling during an emergency.

The search results you receive are randomized: We observed substantial differences in the search results received by the identical agents using the same search engine and browser.... One possible explanation for such randomization is that the search algorithms introduce a certain degree of “serendipity” into the way the results are selected to give different sources an opportunity to be seen.

Search engines prioritize different types of sources: ...Considering their ability to spread unverified information, these differences in the knowledge hierarchies constructed by the search engines are troubling. For some of the search engines, the top search results about the pandemic included social media (e.g., Reddit) or infotainment (e.g., HowStuffWorks). Such sources are generally less reliable than official information outlets or quality media. Moreover, in the case of Yandex, the top search results included alternative media (e.g., https://coronavirusleaks.com/), in which the reliability of information is questionable.

A study published in Nature goes into the differences in behaviour between pro-vaccination and anti-vaccination groups on Facebook.

Although smaller in overall size, anti-vaccination clusters manage to become highly entangled with undecided clusters in the main online network, whereas pro-vaccination clusters are more peripheral. Our theoretical framework reproduces the recent explosive growth in anti-vaccination views, and predicts that these views will dominate in a decade.

As if that thought isn't sobering enough, Kevin Roose asks you to Get Ready for a Vaccine Information War. And DFR Lab goes into why the that Plandemic Documentary just won't go away. Answer: "“censorship-free” alternatives to the major platforms,". Wait, what? Wasn't that supposed to be our way out of Big Tech's clutches?

A study on BMJ Global Health looked into YouTube as a source of information on COVID-19: a pandemic of misinformation?. Now, I had an issue with the way this study was covered. The researches started with a pool of 150 videos and whittled that down to 69, of which a quarter had misleading content and 62M views. For comparison, Youtube claims that one billion hours of content are watched daily on its platform generating 'billions' of views. Meanwhile, the study itself was covered by some publications with the title that 1 in 4 videos on YouTube are factually inaccurate. From the starting point of this study, that's quite an extrapolation. Note that the researchers themselves make no such claims.

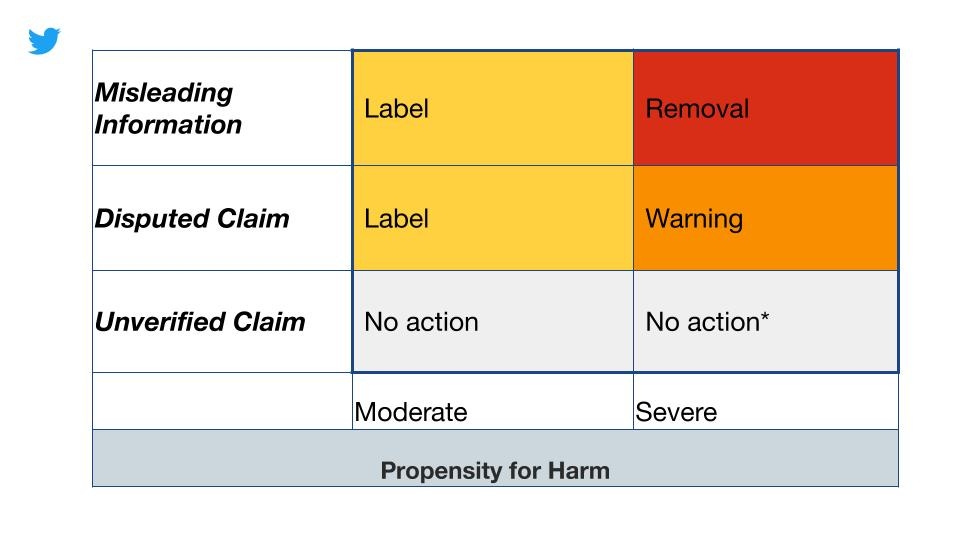

Twitter is updating its approach to misleading information and will start flagging tweets with different kinds of warning labels. This is all great in theory, but the trouble is in defining some of these terms. As much as policy wonks like to analyse things through frameworks, the real world doesn't like to conform to them.

In an episode of VergeCast, Alex Stamos talks about the role TikTok may play in the upcoming Presidential elections in the US. Rolling Stone has an article on COVID-19 conspiracies on the platform.

France wants online content providers to remove terrorism and CSAM within an hour. That's not going to be easy.

Facebook apologised for its role in Sri Lanka's anti-muslim riots in 2018. Also read this piece by RSIS on Islamophobia