Of Oxygen, Amplification, You Are Under Arrest, Plandemic Returns and You Can’t Stop This.

MisDisMal-Information Edition 15

What is this?* This newsletter aims to track information disorder largely from an Indian perspective. It will also look at some global campaigns and research.*

What this is not?A fact-check newsletter. There are organisations like Altnews, Boomlive etc who already do some great work. It may feature some of their fact-checks periodically.

Welcome to Edition 15 of MisDisMal-Information

Mask, No Mask and The Oxygen of Amplification

I can see what’s happening?

What?

And we don’t have a clue

Who??

We keep doing the same things, again and again. I don’t know what to do.

Huh?

I wish I could say this was a Hakuna Matata kind of situation, but it isn’t. There’s plenty to worry about. But before I send you down to the depths of despair, here’s a gif to soften the fall.

What on earth are you going on about, Prateek??

Fine, fine. I’ll come right to it. I am, of course, talking about…

Amplification

You would probably have guessed from the title of this section which incident I am talking about. No? Anti-mask protests are moving closer to home (well, I don’t know where you are, but mine anyway). I am going to avoid calling it an ‘Indian Anti-mask’ movement. There’s no need to make it sound bigger than it actually is.

What happened next? A bunch of people exclaimed on Twitter, many made fun of it. One publication ran a story repeating its claims in the title. Another did a story about such movements ‘gaining momentum’.

Now before going further, I am going to digress and talk a bit about a 3-part series by Whitney Phillips called *The Oxygen of Amplification* (Data and Society 2018) which was the outcome of a number of interviews/conversations she had with journalists about their role covering alt-right movements and the Trump’s first presidential campaign.

There were a couple of things that really stood out to me, I will rely primarily on quoting because I think the language she uses is very important. While a lot of these are in the context of reporters/journalists, many apply to us as social media users too.

What reporters covering “alt-right” antagonisms didn’t anticipate, however, was the impact this reporting would have on unintended audiences; … As the stories themselves and social media reactions to these stories ricocheted across and between online collectives, what was meant as trolling was reported (and reacted to) seriously, and what was meant seriously was reported (and reacted to) as trolling—all while those on the far-right fringes laughed and clapped.

Through reporters’ subsequent public commentary—commentary that fueled, and was fueled by, the public commentary of everyday social media participants— countless citizens were opened up to far-right extremists’ tried and true, even clichéd, manipulations. This outcome persisted even when the purpose of these articles and this commentary was to condemn or undermine the information being discussed.

However critically it might have been framed, however necessary it may have been to expose, coverage of these extremists and manipulators gifted participants with a level of visibility and legitimacy that even they could scarcely believe, as nationalist and supremacist ideology metastasized from culturally peripheral to culturally principal in just a few short months.

That is to say, that any coverage results in amplification in a sense.

She also points out structural issues in the media ecosystem (I’m paraphrasing here).

Reliance on analytics essentially incentivises clickbait-y content instead of deep reporting.

“The journalistic imperative” to cover what is perceived to be relevant to public interest. Attempting to ‘balance perspectives’ and essentially putting even harmful views on the same level as facts.

It is a job - people get assigned stories they may not want to do, may not be interested in, may not agree with.

Misconceived imagination of what the audience might be and a lack of diversity.

I am not directly in the media ecosystem in India, but I suspect many of these would apply here as well. This is why, I haven’t linked to the pieces/tweets I mentioned earlier. My intention isn’t to shame people.

Now, let’s get back to this anti-mask thing or any political leader that makes an outrageous statement, anyone spewing hate, or engaging in targeted harassment, for that matter.

The underlying dynamics are similar - the more coverage or purchase they get; the greater the incentive for others to flood the information ecosystem with similar content.

Does this mean such incidents should never be amplified and swept under the rug? Sigh, of course not.

There is no shortcut to figuring out when to do this either. The tricky part is determining whether something needs coverage or not. I did say there were no Hakuna Matatas here, didn’t I?

Based on this series , I’ve also made a quick mind-map of what participants felt were the trade-offs between choosing to amplify something or not + recommendations on what to consider. I strongly recommend reading the entire series.

Of course, this wouldn’t be MisDisMal-Information if I didn’t immediately throw a contradiction at you. So let’s talk about Hindustani Bhau. Ok, let’s not. But the gist of it is this:

Man spew hate -> People report -> Platform say ‘Policy not violated’ -> People outrage -> Platform say ‘Ok, ok, Policy violated’ -> Man account suspended.

This is a story we’ve seen before.

But wait, turns out, his Facebook account as a ‘Public Figure’ is still around (as of 21 Aug evening, when I am writing this). So is his Twitter account (or at least a Twitter account purportedly managed by one of the people who also manage his Facebook account), where he doubled down on the same video (for which is Instagram account was taken down) by quote tweeting Kunal Kamra (with 1.6M followers) sharing and flagging the Mumbai Police. You can’t make this up - I really wish I was.

Which brings up another question - when one account is suspended by a platform, are other platforms obliged to follow suit? Heck, is the same company expected to follow suit on another platform? Or do we have to rely on outrage-cycles all the time? Because that’s a sign that ‘due-process’ isn’t working.

And in a story that sums up most of this section.

Laura Loomer won a primary in Florida. Wait, Prateek. Who is she?

Excellent question.

She was de-platformed in 2018. It got a lot of coverage. She handcuffed herself to Twitter’s NY office. It got a lot of coverage. She gained influence, leveraged it and clearly capitalised on it.

If you read the Oxygen of Amplification Series and some of the coverage around these events, the points in that mind map make a lot more sense

Becca Lewis has an important thread on this, in the context of de-platforming.

Oh, and remember the anti-mask video? The guy who posted it has been de-platformed at least once before, he keeps coming back.

Meanwhile in India

The Facebook Saga

A lot of ink has been spilled, and even more pixels have been rendered regarding this. I don’t think I can do justice with a ‘round-up’. So I’ll keep this sub-section relatively short.

There are some serious underlying issues here, and it is good that the Parliamentary Standing Committee is looking into the allegations against Facebook. But there is also a very real risk of the Congress v/s BJP aspect of it taking attention away from some of the structural issues at play.

As people smarter than me have argued (the people I mention):

this is a function of how public policy roles are defined in many organisations. It is not limited to Facebook. Government Relations, Policy Definition, Policy Enforcement and Philanthropy roles need to be clearly separated.

But Prateek, why are you telling a private corporation how to run their operations?

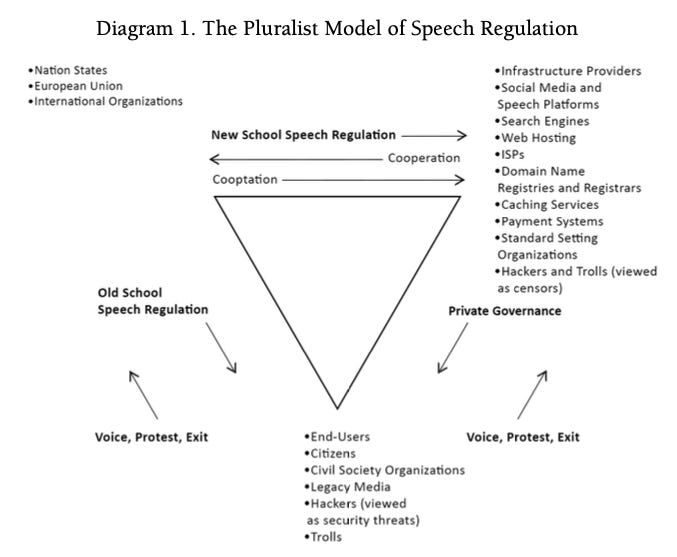

Ah, excellent question (you are on a roll today). To answer this, I want to draw on Jack Balkin’s “Free Speech in the Algorithmic Society”. In the paper, he talks about ‘private governance of speech’ and the 'new school of speech regulation’.

In the Pluralist Model of Speech Regulation he proposes, Platforms belong in the upper right hand corner of the inverted triangle.

The Algorithmic Society also changes the practical conditions of speech as well as the entities that control, limit, and censor speech. First, digital speech flows through an elaborate privately-owned infrastructure of communication. Today our practical ability to speak is subject to the decisions of private infrastructure owners, who govern the digital spaces in which people communicate with each other. This is the problem of private governance of speech

Nation states, understanding this, have developed new techniques for speech regulation. In addition to targeting speakers directly, they now target the owners of private infrastructure, hoping to coerce or coopt them into regulating speech on the nation state’s behalf. This is the problem of “New School” speech regulation.

Since social media platforms are essentially ‘private bureaucracies’ how they choose to moderate content matters immensely. And, as we’ve established many times before. Content Moderation is Hard

You are under arrest

Updated numbers from Maharashtra, the Cyber Department has ‘so far’ registered 601 cases for spreading ‘rumours and fake news on social media’. And 299 people have been arrested. The Press Release didn’t specify the exact period, though.

Facebook: 262 cases

Whatsapp: 219

TikTok: 28

Twitter: 19

Instagram: 5

Misusing audio clips + YouTube videos: 68

In Telengana, a man was arrested for ‘spreading fake news’ about the health of the Chief Minister (you’ll recall that this isn’t the first time something like this happened). The interesting bit is this - the post was made in June when the individual was in Saudi Arabia and was detained when he returned to India (Mumbai).

A journalist in Chattisgarh was arrested for a ‘provocative’ Facebook post.

Prashant Kanojia was arrested by the UP Police (from Delhi) for a tweet.

In Kerala:

Opposition leader Ramesh Chennithala on Wednesday alleged that all news against the state government are being termed as fake news and media persons are subjected to cyber-attacks. Government is trying to eliminate unfavorable news through media censorship, he added.

Micro-aggression and micro-slander

The Financial Express carried an op-ed by Mohandas Pai and Anuraag Saxena in which they make a data-driven case that narratives are used to malign India. There was just one turn of phrase that “spoke to me”:

micro-aggression legitimises micro-slander.

Aparna Sanjay poses an interesting question in this piece for FeminismIndia - Should Feminists Be Worried About “Fake News” Circulation? *I wrote about Dangerous Speech targeted at women in Edition 11*

Salma Shah, writing(non-https link) for TwoCircles about the role of the media in pushing negative stereotypes of Muslims in India.

Micro-aggression legitimises micro-slander and beyond.

Also, in India.

Geeta Seshu has a detailed piece in EPW engage about the J&K Media Policy. She also makes broader points relevant to the rest of the country.

(T)he media industry would rather sack hundreds of journalists without adhering to basic legal provisions governing retrenchments (due process of law) than put pressure on the government to clear its dues. Also, the media industry has brazenly sought more subsidies from the government to bail it out.

Clearly, across the country, the relationship between the fourth estate and the government is unequal, with the balance tilted heavily in the favour of the government, which engages with the media selectively and only on its own terms. And, with the aggressive reworking of nationalism beyond the Kashmir Valley, there will be no prizes for guessing which tunes the piper is called to play.

I am not sure if this was meant to be a fact-check (if it was, it was an extremely poor one) but the MoHFW decided to call out ThePrint’s reporting regarding a proposal to review the marriage age for women.

Himanshi Dahiya in TheQuint on the tendency to prematurely declare that people have died. Bonus points for quoting a dialogue from The Newsroom.

“It’s a person. A doctor pronounces you’re dead. Not the news” - Don Keefer in ‘The Newsroom’

Who would’ve thought that these words by Thomas Sadoski from an episode of ‘The Newsroom’ aired in 2012 will be more than just relevant for journalists in India in 2020? But here we are, reminding ourselves of the guidelines that must be followed while reporting deaths as Indian media’s reportage on that front continues to be – well…callous.

Pune Police is running an awareness campaign to “break the chain of fake news”.

Plandemic Returns

If ever there was a sequel I didn’t want to see, it was one for Plandemic (didn’t the first one come out just last week or was it last year? What is time anymore? Ok, I’ll stop my meltdown here).

As Jane Lytvynenko puts it, this was a rare occasion when a disinformation project was announced/promoted in advance.

And as Casey Newton points out in his newsletter, apparently that’s the secret to platforms being able to contain its initial spread. Great, let’s just formalise a release schedule for information disorder.

Adi Robertson spells it out: Linkedin seems like it was the first to act. Facebook prevented users from posting the URL, Twitter added a warning label.

Of course, I wouldn’t declare any sort of victory over it yet.

You can’t stop this…

(Read the sub-heading as you would MC Hammer’s You Can’t Touch This)

There are somethings you just can’t seem to do anything about.

Venkat Ananth on the Facebook Groups situation in India (along with an older thread that was reposted this week):

Remember section 1 of this edition, that was a long time ago, wasn't it? Anyway, that mask video - Twitter supposedly took it down. Except, it took me 1 search (a very obvious one) to find another version. *head meets table*

On Election Day fake text messages and a video claimed that a candidate had dropped out. Pretty sure this would violate any platform’s version of election integrity rules. The trouble is, can they catch it in time? In this case, the candidate went on to win anyway.

Facebook put fact-checking labels on antivax posts… and got sued for its troubles.

Madness ensued after someone spotted mailboxes lying around in a yard.

Turns out, this was a place that refurbished them. But information ecosystems are so polluted, that it is very easy to believe.

And in the last entry of this section. Washington Post ran a massive interstitial ad on its home page targeting Joe Biden. People were angry, but that’s the business model. Alex Stamos had a good point (I can only assume he was waiting to get this out of his system).

Around the world

CodaStory follows up on Egypt targeting female TikTok influencers.

In Belarus, Telegram is being used to organise protests/demonstrations.

“Only Telegram stayed online thanks to an anti-censorship tool developed by the Russian-born founder Pavel Durov on August 10 which allowed users to circumvent the government’s blocking of internet traffic.”

Something positive for a change: AfricaCheck’s 5-7 minute podcast with fact-checks has seen a 215% increase in subscriptions in the first half of this year. I also love the name “What’s Crap on WhatsApp”.

BellingCat published the 2nd of its 3-part series on Prigozhin. A CNN team in CAR was due to publish a report when:

Prigozhin’s fake-news operation sought to preempt the damaging report. On 11 August, RIA FAN – a St. Petersburg-based “news agency” previously housed in the same building as the Internet Research Agency (“St. Petersburg Troll Factory), controlled by Prigozhin, and sanctioned by the US over its spreading of disinformation, published a 15-minute video segment called a “special investigation into CNN’s activities in the CAR”. The slickly produced video, an attempted character assassination of the CNN journalists, included many spurious claims about the the reporters.

Nina Jankowicz on the growing domestic disinformation threat in Poland and the importance of not neglecting to pay attention to it.

the PiS government has embraced the disinformation playbook, creating a state-sponsored propaganda network and employing inauthentic accounts to influence online political discourse. Across the Atlantic, the United States is fighting a similar battle, one where polarization is exploited and extended by not only foreign actors but domestic politicians as well. Both countries *neglect the homegrown elements at their own peril.*

After a coup in Mali, Euronews reports on the information disorder that was doing the rounds.

In New Zealand: Ximena Smith makes the case for country-wide Media Literacy Campaigns.

The U.S. Senate Intelligence Committee released a ~1000 page report on Russia.

One of the tweets in her thread mentioned a possible link to GRU.

Big Tech Watch

Facebook published an update on how it handles ‘movements and organisations tied to violence’. This is, not surprisingly, but disappointingly a U.S only update. I should add that, presumably, the policy is meant to apply globally. The actual enforcement may vary for any number of reasons

-Deplatform

-Limit appearance in recommendations

-Reduce ranking in news feed + prominence in search results.

-Paused Related Hashtags feature (yes, the same one that malfunctioned and was not surfacing content in certain cases, some of them happened to be Trump related so there was a lot of pushback.

-Prohibit Ads and Fundraising.

They also stated that “any non-state actor or group that qualifies as a dangerous individual or organisation will be banned from our platform.”

Incidentally, this is what the policy page states.

No comment.

Also, Casey Newton has some news. Facebook is looking at getting internal eyes on posts that go beyond a certain vitality threshold.

I’m with Pranav on this one.

TikTok announced an information hub that will set the record straight. MediaNama has the details. Oh, and this page is on the domain tiktokus.info - so it isn’t blocked in India.

Study Corner

A report by Avaaz (oddly enough, the URL is blocked on all connections I have access to) estimated that posts with misleading health information managed to get 3.8B views on Facebook. Another throwback to section 1: Content Moderation is hard.

I won’t re-hash the exec summary because there’s already a ton of that doing the rounds. Let me just go into 2 parts I found interesting.

A representation of political affiliations of the sources.

Section 4 - in which it suggests ways to ‘quarantine health misinformation’. Well done on that title.

Provide every user who has ‘seen’ misinformation with the fact-check. Currently this only happens if someone has ‘interacted’ with the post.

Downgrade posts and actors in news feeds. It does claim to some of this already but the report points out that it isn’t done at the scale required. There is no transparency regarding thresholds.

Tattle’s Blog has an interesting post on their approach to multimedia content.

A report published by HKS Misinformation Review observed that >80% of the information disorder sharing instances on WhatsApp in India happened after a fact-check had already occured. This was of course restricted to images in their dataset which relied on public WhatsApp groups.

This suggests that, if WhatsApp flagged the content as false at the time it was fact-checked, it could prevent a large fraction of shares of misinformation from occurring.