Of Information Operations, Ministries of Truth and Look, Am I your father?

MisDisMal-Information Edition 8

What is this?This newsletter aims to track information disorder largely from an Indian perspective. It will also look at some global campaigns and researchWhat this is not?A fact-check newsletter. There are organisations like Altnews, Boomlive etc who already do some great work. It may feature some of their fact-checks periodically

Welcome to Edition #8 of MisDisMal-Information.

Information Operations #LadakhBorder and #WorldWar3

The New Indian Express ran a rather curious story claiming that 'hundreds of fake Twitter accounts from Pakistan' were spreading lies.

They have since updated the headline to read Ladakh standoff: Hundreds of fake Twitter accounts from Pakistan spread lies in Chinese garb.

It cited some research from Technisanct and quoted the CEO as saying

"As Chinese-Indian tensions started rising, we observed a huge growth in retweeting of pro-Chinese tweets. We identified multiple Pakistan operated handles that started to change their names and translate tweets into Chinese. Most of the accounts have a Pakistani flag and Chinese flag in their handles and a bio to create a feeling that Pakistan is highly backed by China,"

I am not entirely sure what this means, and I wasn't able to find a full report on the company's website. They do have a section for Case Studies and Press Releases, neither mentioned this.

The Technisanct team used a platform called Twint and a trends map to gather information related to the discussion that happened in the aftermath of the Ladakh issue. After observing these Twitter accounts, its followers and past tweets, it was learnt that these are operated to propagate pro-Pakistan narratives. This is a strong organised activity working in a structured format creating Twitter groups and appointing administrators who get instructions from top-level individuals, he said.

Now, let's be clear, such events are opportune times for adversarial actors to run information operations. And this impersonation is very common. We've seen this tactic deployed domestically as well as by foreign actors, What concerned me about the article was a lack of clarity around certain elements:

1) Is there a detailed/full report. I really think publications should start linking to full reports or insist on more clarity before running them.

2) How were 'fake' accounts classified?

3) How did they conclude that it was organised activity? Is the 'structured format' the only signal that was used to determine this?

4) Apart from impersonation, what other false information were they spreading? There were 1-2 anecdotal examples, but nothing else.

5) Is pro-Pakistan narrative the same as anti-India narrative? Depending on the context, that may or may not be the case. My point is that it just isn't clear.

This is an emotive subject and I am coming at this from an academic perspective. Many questions around 'coordinated' activity, 'fake' accounts are still open, so these terms shouldn't be bandied about loosely. But as Thomas Rid points out in Active Measures, overstating the impact of such operations is dangerous as well.

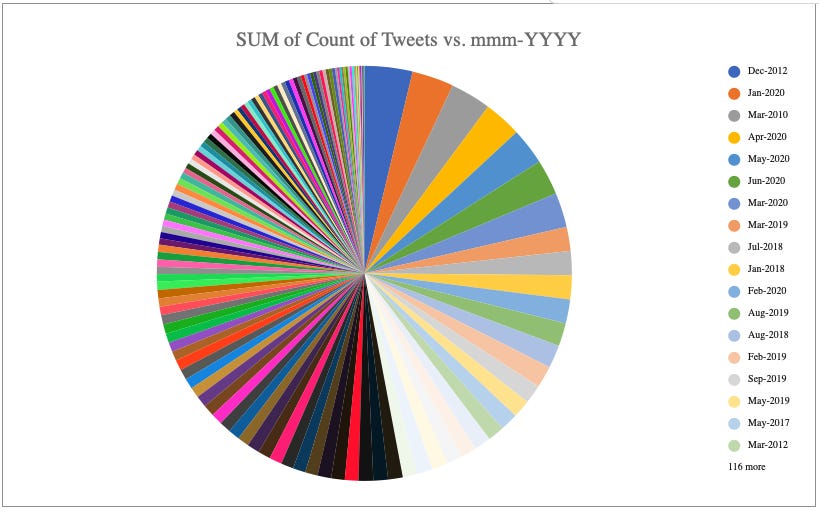

Anyway, I did pull tweets related to both these hashtags at around 12 PM on 20th June. Starting 18th June, there were a total ~23K tweets on both hashtags (~17.5K on #LadakhBorder and ~5.5K on #WorldWar3). I ran the pull operation twice to double-check this was as all there was, turns out, it was. This is certainly not a high number

I also looked at a subset which came from handles that explicitly set the location as Pakistan (~1200 tweets). Some statistics:

Number of Users who tweeted:

Full Dataset - ~18000

Subset - ~ 780

Total Likes (as reported by Twitter's API)

Full Dataset - ~52000

Subset - ~ 3200

Full Dataset

Subset

Full Dataset (Count of Tweets on the right axis)

Subset (Count of Tweets on the right axis)

If you’ve been following similar analysis in this newsletter, there isn’t much that stands out in any of the 4 charts so far.

The only thing that struck me was the fact that the accounts with the most number of tweets in both datasets matched. When I looked into them, they both appeared to be handles that share news - nothing more. Since they are not related to an individual, I can include twitonomy links for both of them.

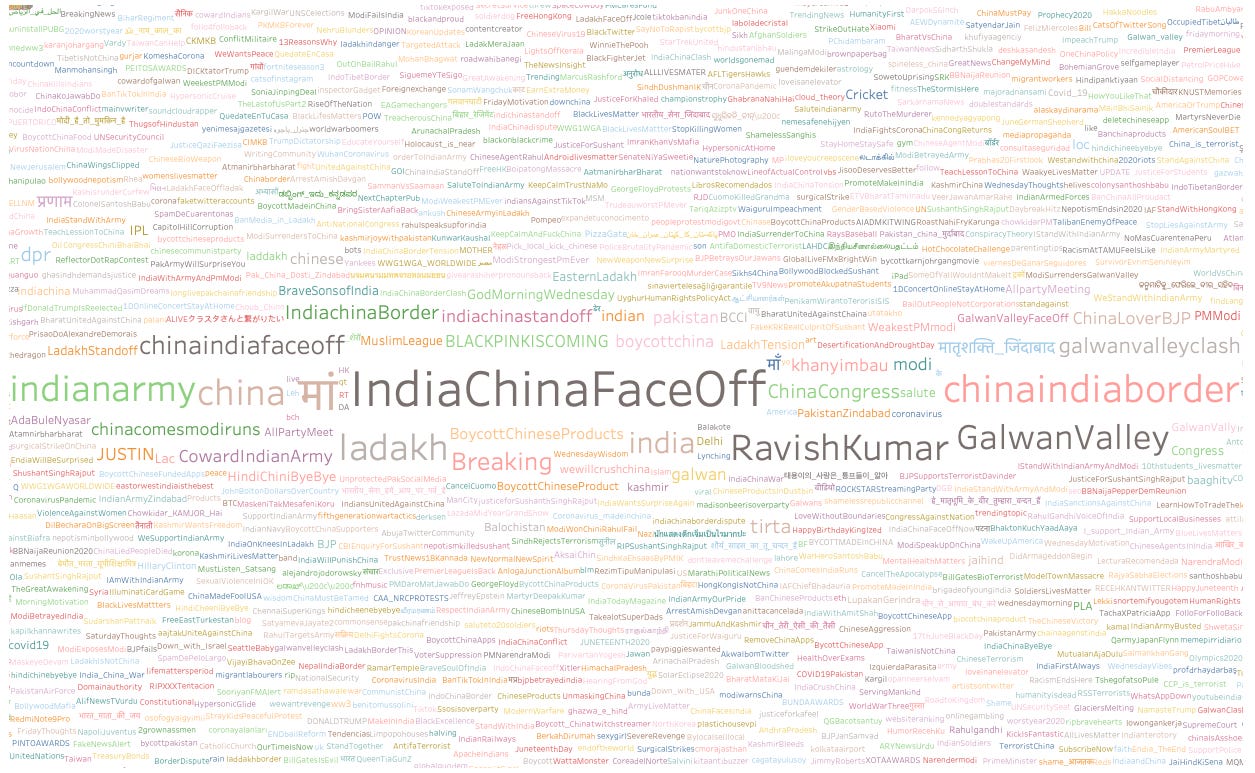

I also looked at the Tag Clouds (excluding #LadakhBorder and #WorldWar3).

It was unsurprising to see some tags disparaging the forces, especially in the subset.

Full Dataset (link to visualisation)

Subset (link to visualisation)

Meanwhile, AFP Fact Check is reporting more disinformation on the India-China front. You can find all there stories tagged India here.

There were many instances of fake accounts impersonated various world leaders tweeting their support for India. Divya Chandra has a good round-up on TheQuint.

Donald Trump

Melania Trump

Benjamin Netanyahu

Shinzo Abe

Angela Merkel

Carrie Lam

What's disturbing is that most of these were easy to pick up with some basic verification (also detailed in the article), nevertheless many of them were amplified by accounts with a large number of followers who turned themselves into super-spreaders (if they weren't already) of information disorder.

This also leads me to a larger point about conduct on social media. By virtue of being on global platforms (with the exception of possibly Sharechat) - Indian conversations on the issue are on display for all to see. There is some level-headed conversation but there is also a lot of bluster.

China, on the other hand is in a position where domestic activity (and by extension any domestic posturing) happens to be on a completely different set of social media platforms. You could certainly make the case that with multilingual usage, some of that translates to India as well. And while that is true to an extent, there is enough discourse in languages like English for people to consider it representative (whether that is true or not). Many Indian Media outlets have also taken a jingoistic position, which is reflected in their social media activity as well. Eg.

External facing Chinese media outlets seem to have a adopted a different approach. They're largely sticking to the 'official story' with the odd passive-aggressive jab and dash of positive spin. For eg. the Indian restaurant story came out a day before a union minister in India called for banning Chinese food. Is our response that predictable?

We haven't yet seen the no-holds-barred wolf warrior diplomacy take aim at India just yet, that doesn't mean it won't. News18 published a story about 'Chinese misinformation taking aim' at India. But at this point, there are only a handful of accounts making the rounds. I had speculatedback in April whether China will respond to the 'ChineseVirus19' activity on Twitter in India.

And PTI reported that Indian Embassy posts on Sina Weibo (China's Twitter equivalent) and WeChat were removed. (It isn't clear to me whether the Indian Embassy account on WeChat is listed as a China-based account or not. That doesn't justify censorship but it is important context nevertheless).

Meanwhile, on the domestic front, plenty of name-calling has started - from Rahul Gandhi attempting to rename the Prime Minister 'Surender Modi' and then opindia, attempted to find a hidden meaning behind why Rahul Gandhi's website is accessible in China, but Narendra Modi's isn't. FWIW, BJP's and Amit Shah's websites are both accessible as per the same tool - so it should tell you that such exercises tell you nothing. And just this morning, Dr. Manmohan Singh released a press statement which among other things stated that 'disinformation is no substitute for diplomacy or decisive leadership'.

Either way, between domestic and international narrative shaping, there is going to be a lot of activity on this front in the coming weeks.

Alt TikTok and K-pop Stan Twitter v/s The Trump Campaign

From a story that NYT ran:

TikTok users and fans of Korean pop music groups claimed to have registered potentially hundreds of thousands of tickets for Mr. Trump’s campaign rally as a prank. After the Trump campaign’s official account @TeamTrump posted a tweet asking supporters to register for free tickets using their phones on June 11, K-pop fan accounts began sharing the information with followers, encouraging them to register for the rally — and then not show.

Now, the rally did ultimately have low attendance, and there could be any number of reasons for that. And many are taking joy in the fact that Trump campaign was left red-faced after claiming to have a record number of registrations.

But, as many users on Twitter have pointed out, there were no limits on how many people could register. And, for believers of alternative facts, a 'record' number of registrations is good enough.

This coordination by the 'Zoomers' was praised. You could almost hear 'the kids are alright' playing in the background.

Now, 24-36 hours later, there are other narratives emerging.

There are legitimate questions being raised about what defines "good" coordinate activity and "bad" coordinated activity. You know what happens when the 'other side' says "The villainy you teach me, I shall execute-it shall go hard, but I will better the instruction".

See these threads by Zeynep Tufekci and Evelyn Douek

The other question that arises, is how this ties in with the various Election Integrity policies that platforms have. Is an attempt to deny others participation in a political rally running afoul of platform policies as they are worded today? Will they not be pressured to make some changes? What does that mean to social media discourse in the medium to long term? Were all the users who registered from the US? If not, whose responsibility was it to ensure that people outside the US could not register - Trump campaign? Platforms?

And obviously, there are attempts to spin this as something sinister because, you know, K-pop stan = Korean and TikTok = Chinese.

Hey Prateek - You said this newsletter was looking at the Indian Perspective, so why are you throwing random words at me?

Great question. I'll come to that. This debate between good coordinated activity and bad coordinated activity is far from settled. Heck, it hasn't been settled for almost a decade. See this nearly 10 year old post on 'ddos as a form of civil disobedience'. And social media platforms/ instant messengers are going to be forced to deal with this in some form or the other. We already have a lot of 'coordinated' activity that takes place across platforms in India. Subjectively, one can say that some groups are far more coordinated than others. And the only way ahead seems to be more coordination, not less. Eventually, we are going to ask the state/platforms/both to address this. At that point, it will likely be a calculus of whom we trust less. I'll stop short calling it a non-choice, but it is certainly a bad decision to make. Also see this essay on Content Cartels by Evelyn Douek.

Literally, the ministry of truth

I&B Minister Prakash Javadekar announced that various 'fact check' units are being set up in states to fight 'fake news':

“With people having the right to get ‘right information’, the pattern of ‘fake news in the media’ would not be tolerated,” he added. The PIB’s fact-checking unit is mandated to identify ‘fake’ news so that it is refrained from ‘being spread’.

And in Assam, the government wants to take action against web portals and users spreading 'fake news'.

“It has come to the notice of the government that most of the times some news portals have been involved in disseminating fake, false and fabricated news,” an official release stated. “There is a huge possibility of these having an adverse impact on the upcoming generation,” it added.

Rights and Risks Analysis Group released a report saying 55 journalists have been booked/arrested for COVID-19 related coverage.

With 11 cases, Uttar Pradesh leads the list of states with maximum number of attacks on media persons, followed by six in Jammu and Kashmir, five in Himachal Pradesh, four each in Tamil Nadu, West Bengal, Odisha and Maharashtra, two each in Punjab, Delhi, Madhya Pradesh and Kerala, and one each in Andaman & Nicobar Islands, Arunachal Pradesh, Assam, Bihar, Chhattisgarh, Gujarat, Karnataka, Nagaland and Telangana.

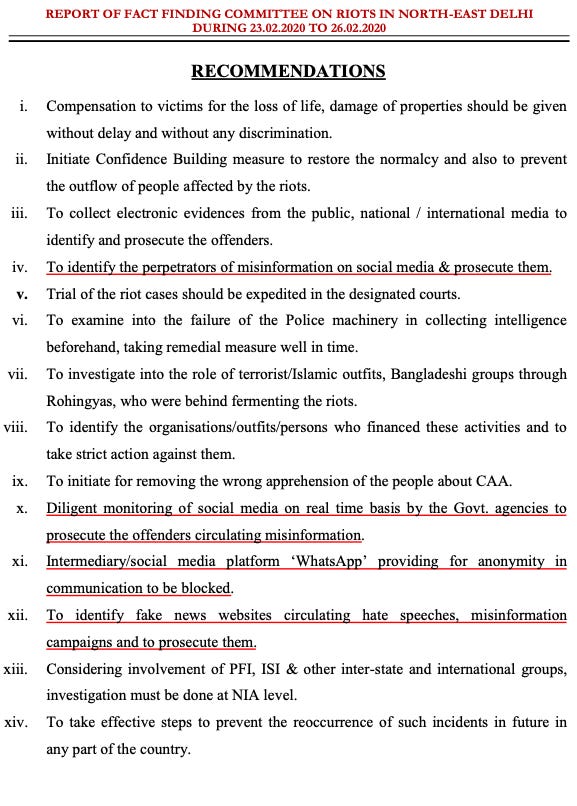

AltNews ran an extensive fact-check on a report that was submitted by an NGO named "Call for Justice". The reportitself is quite a read. From the recommendations page, I have underlined the ones related to information disorder and social media. That's right - it recommends 'real time' monitoring and blocking services that provide anonymity.

Information Operations

Stanford Internet Observatory published a post on Twitter's June 2020 takedowns, involving accounts from China, Russia and Turkey.

Graphika released a report on a Russian information operation Secondary Infektion

The operation ran for 6 years, involved 2500 pieces of content across "300 platforms and web forums, from social media giants such as Facebook, Twitter, YouTube, and Reddit to niche discussion forums in Pakistan and Australia, providing an unprecedented view of the operation’s breadth and depth".

Content was posted in 7 languages.

Many accounts were abandoned after 1 post. Blogging forums were used more extensively than the likes of Reddit, Medium, Twitter, Quora, Facebook and YouTube.

Unsurprisingly,it targeted countries across Europe and North America

Australia accused China of information operations as well as cyber attacks. China, refuted these claims:

At a press briefing in Beijing on Wednesday, the Chinese government spokesman Zhao Lijian accused Australian officials of undermining international efforts to combat the virus. “Australian officials have described the facts of discrimination and violence within their country as disinformation – but what about the feelings of those victims?” Zhao said.

Australia is also considering a task force to counter information operations under its Department of Foreign Affairs and Trade.

India and 12 other countries have started an initiative at United Nations aimed at spreading fact-based content to counter misinformation on the coronavirus

Rounding Up

Vivek Agnihotri is starting his own fact-checking show. No comments.

Quint on why Rohingya Refugees are an easy target for information disorder. Remember the fact finding report earlier in this edition.

An experimental Twitter account that duplicates Donald Trump's tweets has been suspended twice already. A Similar experiment is on Facebook as well, but I don’t have links.

LiveLaw has run 2 interesting essays on misinformation and paid news during elections that are worth reading.

Look, Am I Your father?

Something light to end this edition with -

Sunday, was Father's Day, and there was some odd activity - assigning the title 'Father of the Nation' to current Heads of state.

Of course, there's plenty of sarcasm too now if you actually run a search, but lucky for you I grabbed a few screenshots on Sunday. In India, it looked like it was driven by party loyalists trying to get some attention, as far as I can tell no one took the bait (other than me, probably).