What is this? The Information Ecologist (About page) will try to explore the dynamics of the Information Ecosystem (mainly) from the perspective of Technology Policy in India (but not always). It will look at topics and themes such as misinformation, disinformation, performative politics, professional incentives, speech and more.

Disclaimer: This is usually a space where I write about nascent/work-in-progress thoughts, or raise questions that I think we should (also) be looking to answer.

Disclaimer 2: This is not a systematic, professional activity. On the contrary, if you look at my process, you will see how utterly un-systematic it is. Thanks!

Welcome to/(back.. from the dead?) The Information Ecologist 65

Yes, I’ve addressed this image on the new about page.

In this edition:

Information Ecology: Copycats, Copyr(A)Ights and unfair use:

NYTimes lawsuit against OpenAI, and questions of reproduction, copyright, fair use and how they confuse me.

Let’s get poli-tech-al: Panic! at the elections

So many elections, so many deepfakes, are we asking the right questions.

Warning: I’m borrowing the parallel narratives style of storytelling here. If you don’t like that, read the 1s first, and then the 2s.

1 -

Information Ecology: Copycats, Copyr(A)Ights and unfair use

NYTimes v/s OpenAI

Last week, the New York Times reported that …er… the New York Times was suing OpenAI and Microsoft for use of its copyrighted work [Michael M. Grynbaum, Ryan Mac - NYTimes (potential paywall)]. As per the article:

The Times is the first major American media organization to sue the companies, the creators of ChatGPT and other popular A.I. platforms, over copyright issues associated with its written works. The lawsuit, filed in Federal District Court in Manhattan, contends that millions of articles published by The Times were used to train automated chatbots that now compete with the news outlet as a source of reliable information.

The suit does not include an exact monetary demand. But it says the defendants should be held responsible for “billions of dollars in statutory and actual damages” related to the “unlawful copying and use of The Times’s uniquely valuable works.” It also calls for the companies to destroy any chatbot models and training data that use copyrighted material from The Times.

One part that got my attention was the claim about reproduction (and then not including source links):

The complaint cites several examples when a chatbot provided users with near-verbatim excerpts from Times articles that would otherwise require a paid subscription to view. It asserts that OpenAI and Microsoft placed particular emphasis on the use of Times journalism in training their A.I. programs because of the perceived reliability and accuracy of the material.

Now, if you’ve been around here (this substack) before, then you might know that I have never had much sympathy for ‘media bargaining’ type of arrangements (how’s that going, Canada?). But this (how generative ai systems acquire, use data for training; use that information; make up things along away; sometimes ‘reproduce’; too many issues to list…) is, admittedly, not quite the same thing. But I’ve often struggled to explain to myself why I felt this. I thought Benedict Evans put it quite well in his January 2, 2024 newsletter, highlighting the difference in scale (we’ll come back to this):

Meanwhile, the stock responses from AI people, that LLMs do not contain the training data, only patterns derived for it; that they’re not databases of facts; and that they don't need newspaper data in particular (which is a tiny fraction of a percent of the training corpus) are all true but insufficient. You’re still building what you claim will be a trillion dollar business on other people’s work. Equally, saying that ‘people learn like this too’ might perhaps be true in principle, but a difference in scale can be of itself be a difference in principle.

Specifically on reproduction, it was interesting to read what Mike Masnick over at Techdirt said about the prompts used to get those ‘reproduced outputs’ (I’ll add that it is unclear to me if this is applicable to all the examples that NYTimes included, but it is still pretty important because a lot of the news coverage I read did not go into the detail about the prompts and the role they could have played). Quoting some parts in full [Techdirt]:

Now, the one element that _appears_ different in the Times’ lawsuit is that it has a bunch of exhibits that purport to prove how GPT regurgitates Times articles. Exhibit J is getting plenty of attention here, as the NY Times demonstrates how it was able to prompt ChatGPT in such a manner that it basically provided them with direct copies of NY Times articles.

At first glance that might look damning. But it’s a lot less damning when you look at the actual prompt in Exhibit J and realize what happened, and how generative AI actually works.

…

What the Times did is prompt GPT-4 by (1) giving it the URL of the story and then (2) “prompting” it by giving it the headline of the article and the first seven and a half paragraphs of the article, and asking it to continue.

…

If you actually understand how these systems work, the output looking very similar to the original NY Times piece is not so surprising. When you prompt a generative AI system like GPT, you’re giving it a bunch of parameters, which act as conditions and limits on its output. From those constraints, it’s trying to generate the most likely next part of the response. But, by providing it paragraphs upon paragraphs of these articles, the NY Times has effectively constrained GPT to the point that the most probabilistic responses is… very close to the NY Times’ original story.

In other words, by constraining GPT to effectively “recreate this article,” GPT has a very small data set to work off of, meaning that the highest likelihood outcome is going to sound remarkably like the original.

The gist being that the generative AI system was pretty much ‘constrained’ into a churning out a near replica because of the way it works, and nature of the prompts pointing it to the source content. I recommend reading the article in full, it had some points that I hadn’t even considered, such as NYTimes’ use of copyright in this manner might opening itself up to copyright claims.

Which brings me to my next point of confusion, is copyright the correct instrument here? This is something I am very unsure about, and frankly a little uncomfortable with. I don’t know, may be I followed TorrentFreak for too many years 🤷♂️. Anyway, back in August 2023, Benedict Evans wrote about Generative AI and Intellectual Property [ben-evans.com]. In general terms, he used analogies of mimicry and art to make points about reproduction, and substitutability.

If I ask Midjourney for an image in the style of a particular artist, some people consider this obvious and outright theft, but if you chat to the specialists at Christie’s or Sotheby’s, or wander the galleries of lower Manhattan or Mayfair, most people there will not only disagree but be perplexed by the premise - if you make an image ‘in the style of’ Cindy Sherman, you haven't stolen from her and no-one who values Cindy Sherman will consider your work a substitute.

In the context of news, he likens ChatGPT to having ‘infinite interns’:

I think most people understand that if I post a link to a news story on my Facebook feed and tell my friends to read it, it’s absurd for the newspaper to demand payment for this. A newspaper, indeed, doesn’t pay a restaurant a percentage when it writes a review. If I can ask ChatGPT to read ten newspaper websites and give me a summary of today’s headlines, or explain a big story to me, then suddenly the newspapers’ complaint becomes a lot more reasonable - now the tech company really is ‘using the news’ …

But just as <refers to analogy that I have not mentioned> ChatGPT would not be reproducing the content itself, and indeed I could ask an intern to read the papers for me and give a summary (I often describe AI as giving you infinite interns). That might be breaking the self-declared terms of service, but summaries (as opposed to extracts) are not generally considered to be covered by copyright.

That doesn’t mean there is no problem (recall the quote from his newsletter), because a step-change in scale, is a change (told you we’d come back to this). The surveillance analogy really resonated with this digital rights person (me).

Rather, one way to think about this might be that AI makes practical at a massive scale things that were previously only possible on a small scale. This might be the difference between the police carrying wanted pictures in their pockets and the police putting face recognition cameras on every street corner - a difference in scale can be a difference in principle. What outcomes do we want? What do we want the law to be? What can it be? The law can change.

There’s another aspect that complicates this, as he asserts - the training data isn’t the model itself (some ‘stochastic parroting’ does take place, remember). Generative AI models don’t copy/pirate in the way ‘we normally use that word,’ says Evans:

But the training data is not the model. LLMs are not databases. They deduce or infer patterns in language by seeing vast quantities of text created by people - we write things that contain logic and structure, and LLMs look at that and infer patterns from it, but they don’t keep it. ChatGPT might have looked at a thousand stories from the New York Times, but it hasn’t kept them.

He then uses a fascinating analogy from Tim O’Reilly (and one from data-oil series makes sense), to make the point that if you do want to block/obstruct OpenAI by individual action, it may not be effective. Aggregate action, though…:

Tim O’Reilly’s great phrase, data isn’t oil; data is sand. It’s only valuable in the aggregate of billions, and your novel or song or article is just one grain of dust in the Great Pyramid.

…

On the other hand, it doesn’t need your book or website in particular and doesn’t care what you in particular wrote about, but it does need ‘all’ the books and ‘all’ the websites. It would work if one company removed its content, but not if everyone did.

Great, so now I am confused about both reproduction, and copyright. But it doesn’t end there. Intuitively, one is tempted to think that such use isn’t ‘fair’, especially if you also consider some of NYTimes’ other claims about lack of attribution/no links, and even incorrect attribution / hallucination. Even with my lack of understanding/knowledge of copyright, intellectual property law, and ‘fair use’ jurisprudence, you can guess where this going.

Ben Thompson [Stratechery] (potential paywall), cites an Author’s Guild v/s Google case, where the decision (in Google’s favour) describes a 4-part balancing test.

This summary invokes the four part balancing test for fair use; from the Stanford Library:

The only way to get a definitive answer on whether a particular use is a fair use is to have it resolved in federal court. Judges use four factors to resolve fair use disputes, as discussed in detail below. It’s important to understand that these factors are only guidelines that courts are free to adapt to particular situations on a case‑by‑case basis. In other words, a judge has a great deal of freedom when making a fair use determination, so the outcome in any given case can be hard to predict.

The four factors judges consider are:

The purpose and character of your use

The nature of the copyrighted work

The amount and substantiality of the portion taken, and

The effect of the use upon the potential market.

In my not-a-lawyer estimation, LLMs are clearly transformative (purpose and character); the nature of the New York Times’ work also works in OpenAI’s favor, as there is generally more allowance given to disseminating factual information than to fiction.

Now, it does seem like OpenAI has published a post in response (I haven’t read it yet, so won’t offer my opinion about it), but at least one analysis (Twitter thread by Cecilia Ziniti) suggests it might be off the mark, despite it having a good ‘fair use’ case.

Alright, I’ve gone into why this case seemed different (to me, anyway). But I would be wrong if I didn’t mention some ‘through lines’ from publishers v/s social media + google (maybe this season 2 of that show?).

Meghna Bal lists 3 [The Secretariat] (I’m paraphrasing, read the original for her complete arguments):

NYT has not innovated technologically and is looking for a payout;

OpenAI has also, to some extent, benefitted from the work of news publishers - who themselves were slow to act and protect their interests;

While NYT is portraying itself as an institution serving public interest and casting OpenAI as a purveyor of low quality information, its ‘moral high ground’ is not firm since it is a profit-seeking enterprise.

We’re early days into this…

Let’s get Poli-tech-al: Panic! at the elections

Elections, deepfakes and amendments

— Somewhere between a commentary and rant on technology policy developments in India. This section is a work of fiction (not news/current affairs) because it assumes a reality where the executive troubles itself with things like accountability, transparency, due-process, etc.

You know those TV shows that did parallel timelines until that idea was run to the ground? Well, good, because this section does run parallel narratives with intersecting timelines (Hi Tenent and Primer).

1 - In case you haven’t heard, 2024 is some kind of mega-election year with 80+ elections [Tiffany Hsu, Stuart A. Thompson, Steven Lee Myers - NYTimes]. And if that number doesn’t seem big and scary enough, here’s a nice graphic that almost certainly will (What is up with June, seriously?).

2 - In 64 - All I want for the New Year I wrote about one of the greatest “will they/won’t they”s since Rahul / Anjali, Ross / Rachel, and Ted / Robin - will India’s Ministry from Elections and Information Technology (MeitY) issue advisories or rules, and we had left it an “advisory” with a “side of threats” about deepfakes and synthetic content (Ok fine, it is not great at all). Well, some reporting from last week suggests that there will be more developments on that front [Ashish Aryan - Economic Times], [Aditi Agrawal - Hindustan Times]. It appears that MeitY is considering amending the IT Rules to define deepfakes and jam it into the vague, arbitrary proactive monitoring obligations which were themselves jammed into the IT Rules in October 2022.

1 - Until 2022 we were busy worrying about ‘disinformation’ in elections. Since 2023, we are worrying about deepfake-powered disinformation in elections. See some opinion pieces which highlight the risks rather well (I have some quibbles with framing, but that’s for another day):

Look, as I said in 64: Symptoms, diseases and bad medicines we don’t need, the underlying conditions are factors we cannot and should not ignore. One thing to consider is, how significant the step-change aspect about generative AI that Benedict Evans referred to (see previous section) is in this context? Is it significant enough to hand (even) more discretion to the executive branch? Or to condone the use of poorly designed institutions that may act subjectively and selectively? I have strong reservations about this, very strong reservations. Again, there is a supply and demand-side to this equation. Political actors loom large over the supply side story. That’s where I think Rasmus Kleis-Nielsen is so right in drawing our attention to the politicians [Financial Times] (potential paywall):

As a social scientist working on political communication, I have spent years in these debates — which continue to be remarkably disconnected from what we know from research. Academic findings repeatedly underline the actual impact of politics, while policy documents focus persistently on the possible impact of new technologies.

…

Of course there will be examples of AI-generated misinformation, bots, and deepfakes during various elections next year. But the key question is how politicians will be using these tools. A pro-Ron DeSantis political action committee has already used an AI version of Trump’s voice in a campaign ad. This is not some unnamed “malicious actor”, but a team working on behalf of the governor of a state with a population larger than all but five EU member states. We have seen examples of similar activity in elections in Argentina and New Zealand too.

In the Indian context, read this piece about the use of generative AI in the Telangana assembly elections [Karan Saini - Decode]. FWIW, as Saini points out, some of the more well-known models try to enforce some controls over what can be generated (this form of, er, ‘content moderation’, is obviously going to be porous), but there are ‘open source’ models (that still need some know-how) which can be used too.

While trying to verify the effectiveness of Image Creator’s safeguards, Decode discovered a prompt bypass that successfully generated images of Indian Prime Minister Narendra Modi.

Though Image Creator refused to generate images with the prompt “PMO India” or “Narendra Modi,” submitting the prompt “a respectful meeting between two influential leaders of India” consistently resulted in images being generated of Prime Minister Narendra Modi meeting with seemingly fictitious individuals.

This is an adversarial space, and we’re not fixing all of this, but you probably want to focus on the largest threat in the electoral context - political actors. Neither technology, nor regulation can fix what political actors and sections of society insist on breaking.

No/little supply from political actors, no/little problem (What? I said this section is fictional). You can’t fault me for having high, high hopes (see what I did there?)

2 - There was a curious incident of the Bard in the day time on November 16th, 2023. A Twitter user (with over 100K followers) complained that Bard refused to summarise an article by OpIndia, on the grounds that it was a ‘website that has been repeatedly criticised for publishing false and misleading information’ (where is the lie?). But this wasn’t the curious bit, that was when a union minister quoted it saying something inscrutable about bias being a violation of platforms obligations under 3(1)(b). I’ve posted an image of 3(1)(b) and it has no direct reference to bias.

The IT Rules [meity.gov.in] do contain one reference to the term ‘bias’ in 4(4), but I am not sure that applies here. I am no lawyer, and I could be wrong, since I am relying on what some call a ‘plain reading’, so if you know which part under 3(1)(b) applies to bias, help a fellow human out?

And here’s a quote (unattributed, of course) from the Economic Times article I mentioned earlier:

The government is likely to amend the Information Technology (IT) Rules of 2021 and introduce rules for regulating artificial intelligence (AI) companies and generative AI models, according to people with knowledge of the matter.

The amendments are likely to mandate that platforms which use artificially intelligent algorithms or language models to train their machines are free from “bias” of any kind, they said.

Anyway, whatever form these amendments take, or whatever policy documents say, their stated objectives can be very different from intended outcomes.

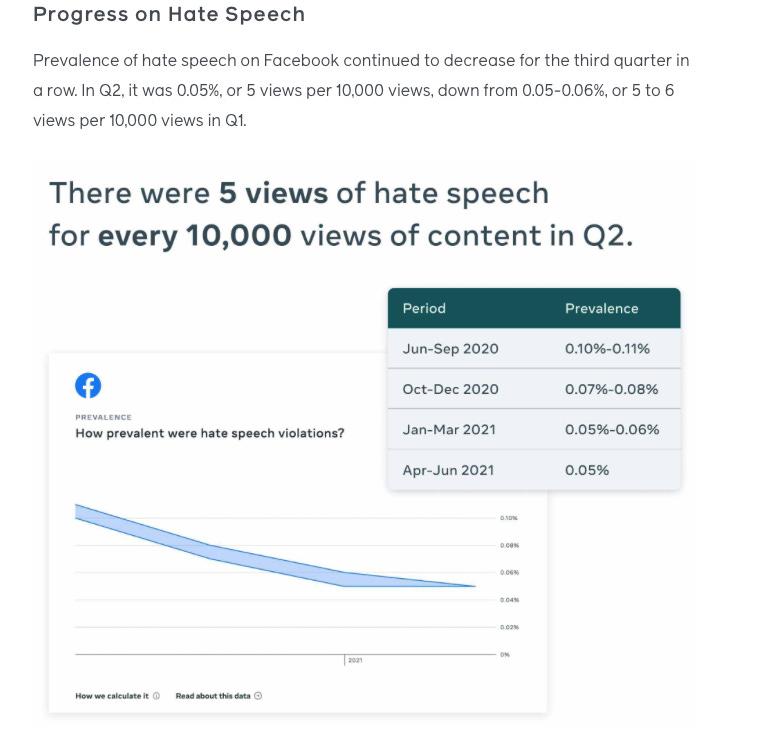

1 - The thing about disinformation, deep fakes, specifically, and ‘harmful content’ [I have never been to a meeting attended by more than 1 person where everyone agreed on what this meant] is that it is easy enough to find egregious examples, but very hard to truly contextualise them (in terms of ALL ‘content’ everywhere). For example, till some point in 2021 Facebook liked to tell us that the prevalence of hate speech on its platform was 0.05% (I wasn’t able to find references to this metric from 2022/3).

That’s a nice, low sounding number, but it doesn’t actually tell you anything meaningful if you’re trying to understand where, and what contexts people actually engage in it. And since, as far as I know, we have not historically quantified hate speech in this way, I can’t even be sure if, even at an aggregate level, this number is high/low/par for course? I don’t know (I get that the ideal number is 0, but even this section is not that fictitious). But one thing this metric does is that it gives you a ‘denominator’ (not literally, but you can estimate one), in a conversation that’s often only about numerators (reductive version: I saw 50 pieces of content I did not like on this platform, therefore all content on this platform must be content I do not like). Ethan Zuckerman has written about this, in 2021 (also, with a non-reductive version of the numerator situation) [TheConversation]:

A widely publicized study from the anti-hate speech advocacy group Center for Countering Digital Hate titled Pandemic Profiteers showed that of 30 anti-vaccine Facebook groups examined, 12 anti-vaccine celebrities were responsible for 70% of the content circulated in these groups, and the three most prominent were responsible for nearly half. But again, it’s critical to ask about denominators: How many anti-vaccine groups are hosted on Facebook? And what percent of Facebook users encounter the sort of information shared in these groups?

Without information about denominators and distribution, the study reveals something interesting about these 30 anti-vaccine Facebook groups, but nothing about medical misinformation on Facebook as a whole.

Of course, platforms have historically been unhelpful on this front, and will likely continue to be that way. As Zuckerman says, in the same post:

Trying to understand misinformation by counting it, without considering denominators or distribution, is what happens when good intentions collide with poor tools. No social media platform makes it possible for researchers to accurately calculate how prominent a particular piece of content is across its platform.

But, it is also incumbent on us not to forget that such denominators exist, and we must attempt not to have a numerators-only conversation about whatever ‘harm’ we are discussing, whether ‘emergent’ or ‘passé’.

2 - The thing to come back to about MeitY’s attempts to amend the IT Rules, and their past advisories about deepfakes, is that they rely/build on the vaguely worded proactive monitoring obligations that were introduced by amendments to the IT Rules in October 2022 (I only repeat this, because I can’t get over it) - and are also stuck at the level of figurative numerators. Here is what 3(1)(b) said before the amendments:

(b) the rules and regulations, privacy policy or user agreement of the intermediary shall inform the user of its computer resource not to host, display, upload, modify, publish, transmit, store, update or share any information that (8 conditions)

And after (emphasis added and added):

(b) the intermediary shall inform its rules and regulations, privacy policy and user agreement to the user in English or any language specified in the Eighth Schedule to the Constitution in the language of his choice and shall make reasonable efforts to cause the user of its computer resource not to host, display, upload, modify, publish, transmit, store, update or share any information that (9 conditions (at the time))

It was never clear what the ‘shall make reasonable efforts’ meant. Unfortunately, not many (if any) ‘intermediaries’ pushed back (publicly, anyway) against this significant change in their obligations. One can assume that they expected this would not be seriously enforced. What is that saying about a gun being introduced in Act I of a play?

So it does confuse me that a lot us disagreed with those particular amendments, and the way in which they were passed - but are, somehow, alright with them being used to threaten action against intermediaries because… we are confused, even panicking, about deepfakes? Oh, and intermediaries are far from being in the clear here - their meekness repeatedly comes back to hit all of us, with interest…

(There are a few more points I want to make, but Substack tells me I’m over some limit… so until next time…)